Indexing

Yorick is good at manipulating elements in N-dimensional arrays conveniently with its powerful syntax.

Several elements can be accessed all at once:

> x=[1,2,3,4,5,6];> x[1,2,3,4,5,6]> x(3:6)[3,4,5,6]> x(3:6:2)[3,5]> x(6:3:-2)[6,4]

- Arbitrary elements

> x=[[1,2,3],[4,5,6]]> x[[1,2,3],[4,5,6]]> x([2,1],[1,2])[[2,1],[5,4]]> list=where(1<x)> list[2,3,4,5,6]> y=x(list)> y[2,3,4,5,6]

- Pseudo-index

Like "theading" in PDL and "broadcasting" in Numpy, Yorick has a mechanism to do this:

> x=[1,2,3]> x[1,2,3]> y=[[1,2,3],[4,5,6]]> y[[1,2,3],[4,5,6]]> y(-,)[[[1],[2],[3]],[[4],[5],[6]]]> x(-,)[[1],[2],[3]]> x(,-)[[1,2,3]]> x(,-)/y[[1,1,1],[0,0,0]]> y=[[1.,2,3],[4,5,6]]> x(,-)/y[[1,1,1],[0.25,0.4,0.5]]

- Rubber index

".." is a rubber-index to represent zero or more dimensions of the array.

> x=[[1,2,3],[4,5,6]]> x[[1,2,3],[4,5,6]]> x(..,1)[1,2,3]> x(1,..)[1,4]> x(2,..,2)5

"*" is a kind of rubber-index to reshape a slice(sub-array) of array to a vector.

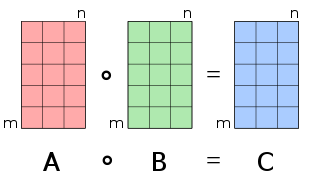

- Tensor multiplication

Tensor multiplication is done as follows in Yorick:

P(,+, )*Q(, +)

means

> x=[[1,2,3],[4,5,6]]> x[[1,2,3],[4,5,6]]> y=[[7,8],[9,10],[11,12]]> x(,+)*y(+,)[[39,54,69],[49,68,87],[59,82,105]]> x(+,)*y(,+)[[58,139],[64,154]]