Related Research Articles

The Carl-Gustaf Rossby Research Medal is the highest award for atmospheric science of the American Meteorological Society. It is presented to individual scientists, who receive a medal. Named in honor of meteorology and oceanography pioneer Carl-Gustaf Rossby, who was also its second (1953) recipient.

Desert Research Institute (DRI) is the nonprofit research campus of the Nevada System of Higher Education (NSHE) and sister property of the University of Nevada, Reno (UNR), the organization that oversees all publicly supported higher education in the U.S. state of Nevada. At DRI, approximately 500 research faculty and support staff engage in more than $50 million in environmental research each year. DRI's environmental research programs are divided into three core divisions and two interdisciplinary centers. Established in 1988 and sponsored by AT&T, the institute's Nevada Medal awards "outstanding achievement in science and engineering".

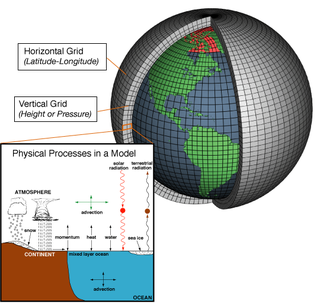

Numerical weather prediction (NWP) uses mathematical models of the atmosphere and oceans to predict the weather based on current weather conditions. Though first attempted in the 1920s, it was not until the advent of computer simulation in the 1950s that numerical weather predictions produced realistic results. A number of global and regional forecast models are run in different countries worldwide, using current weather observations relayed from radiosondes, weather satellites and other observing systems as inputs.

This is a list of meteorology topics. The terms relate to meteorology, the interdisciplinary scientific study of the atmosphere that focuses on weather processes and forecasting.

The Air Resources Laboratory (ARL) is an air quality and climate laboratory in the Office of Oceanic and Atmospheric Research (OAR) which is an operating unit within the National Oceanic and Atmospheric Administration (NOAA) in the United States. It is one of seven NOAA Research Laboratories (RLs). In October 2005, the Surface Radiation Research Branch of the ARL was merged with five other NOAA labs to form the Earth System Research Laboratory.

The National Severe Storms Laboratory (NSSL) is a National Oceanic and Atmospheric Administration (NOAA) weather research laboratory under the Office of Oceanic and Atmospheric Research. It is one of seven NOAA Research Laboratories (RLs).

Atmospheric dispersion modeling is the mathematical simulation of how air pollutants disperse in the ambient atmosphere. It is performed with computer programs that include algorithms to solve the mathematical equations that govern the pollutant dispersion. The dispersion models are used to estimate the downwind ambient concentration of air pollutants or toxins emitted from sources such as industrial plants, vehicular traffic or accidental chemical releases. They can also be used to predict future concentrations under specific scenarios. Therefore, they are the dominant type of model used in air quality policy making. They are most useful for pollutants that are dispersed over large distances and that may react in the atmosphere. For pollutants that have a very high spatio-temporal variability and for epidemiological studies statistical land-use regression models are also used.

CLaMS is a modular chemistry transport model (CTM) system developed at Forschungszentrum Jülich, Germany. CLaMS was first described by McKenna et al. (2000a,b) and was expanded into three dimensions by Konopka et al. (2004). CLaMS has been employed in recent European field campaigns THESEO, EUPLEX, TROCCINOX SCOUT-O3, and RECONCILE with a focus on simulating ozone depletion and water vapour transport.

CALPUFF is an advanced, integrated Lagrangian puff modeling system for the simulation of atmospheric pollution dispersion distributed by the Atmospheric Studies Group at TRC Solutions.

NAME atmospheric pollution dispersion model was first developed by the UK's Met Office in 1986 after the nuclear accident at Chernobyl, which demonstrated the need for a method that could predict the spread and deposition of radioactive gases or material released into the atmosphere.

The Weather Research and Forecasting (WRF) model is a numerical weather prediction (NWP) system designed to serve both atmospheric research and operational forecasting needs. NWP refers to the simulation and prediction of the atmosphere with a computer model, and WRF is a set of software for this. WRF features two dynamical (computational) cores, a data assimilation system, and a software architecture allowing for parallel computation and system extensibility. The model serves a wide range of meteorological applications across scales ranging from meters to thousands of kilometers.

Air pollution dispersion – distribution of air pollution into the atmosphere. Air pollution is the introduction of particulates, biological molecules, or other harmful materials into Earth's atmosphere, causing disease, death to humans, damage to other living organisms such as food crops, or the natural or built environment. Air pollution may come from anthropogenic or natural sources. Dispersion refers to what happens to the pollution during and after its introduction; understanding this may help in identifying and controlling it. Air pollution dispersion has become the focus of environmental conservationists and governmental environmental protection agencies of many countries regarding air pollution control.

Eddy diffusion, eddy dispersion, multipath, or turbulent diffusion is any diffusion process by which substances are mixed in the atmosphere or in any fluid system due to eddy motion. In other words, it is mixing that is caused by eddies that can vary in size from the small Kolmogorov microscales to subtropical gyres. The size of eddies decreases as kinetic energy is lost, until it reaches a small enough size for viscosity to control, resulting in kinetic energy dissipating into heat. The concept of turbulence or turbulent flow causes eddy diffusion to occur. The theory of eddy diffusion was developed by Sir Geoffrey Ingram Taylor.

David J. Lary is a British-American atmospheric scientist interested in applying computational and information systems to facilitate discovery and decision support in Earth system science. His main contributions have been to highlight the role of carbonaceous aerosols in atmospheric chemistry, heterogeneous bromine reactions, and to employ chemical data assimilation for satellite validation, and the use of machine learning for remote sensing applications. He is author of AutoChem, NASA release software that constitutes an automatic computer code generator and documentor for chemically reactive systems. It was designed primarily for modeling atmospheric chemistry, and in particular, for chemical data assimilation. He is author of more than 150 publications receiving more than 3,850 citations.

The Global Environmental Multiscale Model (GEM), often known as the CMC model in North America, is an integrated forecasting and data assimilation system developed in the Recherche en Prévision Numérique (RPN), Meteorological Research Branch (MRB), and the Canadian Meteorological Centre (CMC). Along with the NWS's Global Forecast System (GFS), which runs out to 16 days, the ECMWF's Integrated Forecast System (IFS), which runs out 10 days, the Naval Research Laboratory Navy Global Environmental Model (NAVGEM), which runs out eight days, and the UK Met Office's Unified Model, which runs out to six days, it is one of the five predominant synoptic scale medium-range models in general use.

RIMPUFF is a local-scale puff diffusion model developed by Risø DTU National Laboratory for Sustainable Energy, Denmark. It is an emergency response model to help emergency management organisations deal with chemical, nuclear, biological and radiological releases to the atmosphere.

ARGOS is a Decision Support System (DSS) for crisis and emergency management for incidents with chemical, biological, radiological, and nuclear (CBRN) releases.

The history of numerical weather prediction considers how current weather conditions as input into mathematical models of the atmosphere and oceans to predict the weather and future sea state has changed over the years. Though first attempted manually in the 1920s, it was not until the advent of the computer and computer simulation that computation time was reduced to less than the forecast period itself. ENIAC was used to create the first forecasts via computer in 1950, and over the years more powerful computers have been used to increase the size of initial datasets as well as include more complicated versions of the equations of motion. The development of global forecasting models led to the first climate models. The development of limited area (regional) models facilitated advances in forecasting the tracks of tropical cyclone as well as air quality in the 1970s and 1980s.

A prognostic chart is a map displaying the likely weather forecast for a future time. Such charts generated by atmospheric models as output from numerical weather prediction and contain a variety of information such as temperature, wind, precipitation and weather fronts. They can also indicate derived atmospheric fields such as vorticity, stability indices, or frontogenesis. Forecast errors need to be taken into account and can be determined either via absolute error, or by considering persistence and absolute error combined.

References

- ↑ Turner, D.B. (1994). Workbook of atmospheric dispersion estimates: an introduction to dispersion modeling (2nd ed.). CRC Press. ISBN 1-56670-023-X. www.crcpress.com Archived 2007-11-05 at the Wayback Machine

- ↑ Beychok, M.R. (2005). Fundamentals Of Stack Gas Dispersion (4th ed.). author-published. ISBN 0-9644588-0-2. www.air-dispersion.com

- ↑ Sugiyama, G., and S. T. Chan (1998), A New Meteorological Data Assimilation Model for Real-Time Emergency Response, 10th Joint Conference on the Applications of Air Pollution Meteorology, Phoenix, Arizona, January, 1998.

- ↑ Ermak, D.L., and J.S. Nasstrom (2000), A Lagrangian Stochastic Diffusion Method for Inhomogeneous Turbulence, Atmospheric Environment, 34, 7, 1059-1068.

- ↑ Hodur, R. M. (1997), The Naval Research Laboratory's Coupled Ocean/Atmosphere Mesoscale Prediction System (COAMPS), Mon. Wea. Rev., 125, 1414-1430.