Examples

The examples here illustrate different aspects of dfns. Additional examples are found in the cited articles. [8] [9] [10]

Default left argument

The function {⍺+0j1×⍵} adds ⍺ to 0j1 (i or ) times ⍵.

3{⍺+0j1×⍵}43J4∘.{⍺+0j1×⍵}⍨¯2+⍳5¯2J¯2¯2J¯1¯2¯2J1¯2J2¯1J¯2¯1J¯1¯1¯1J1¯1J20J¯20J¯100J10J21J¯21J¯111J11J22J¯22J¯122J12J2The significance of this function can be seen as follows:

Complex numbers can be constructed as ordered pairs of real numbers, similar to how integers can be constructed as ordered pairs of natural numbers and rational numbers as ordered pairs of integers. For complex numbers,

{⍺+0j1×⍵}plays the same role as-for integers and÷for rational numbers. [11] : §8

Moreover, analogous to that monadic -⍵ ⇔ 0-⍵ (negate) and monadic ÷⍵ ⇔ 1÷⍵ (reciprocal), a monadic definition of the function is useful, effected by specifying a default value of 0 for ⍺: if j←{⍺←0⋄⍺+0j1×⍵}, then j⍵ ⇔ 0j⍵ ⇔ 0+0j1×⍵.

j←{⍺←0⋄⍺+0j1×⍵}3j4¯5.67.893J43J¯5.63J7.89j4¯5.67.890J40J¯5.60J7.89sin←1∘○cos←2∘○Euler←{(*j⍵)=(cos⍵)j(sin⍵)}Euler(¯0.5+?10⍴0)j(¯0.5+?10⍴0)1111111111The last expression illustrates Euler's formula on ten random numbers with real and imaginary parts in the interval .

Single recursion

The ternary construction of the Cantor set starts with the interval [0,1] and at each stage removes the middle third from each remaining subinterval:

The Cantor set of order ⍵ defined as a dfn: [11] : §2.5

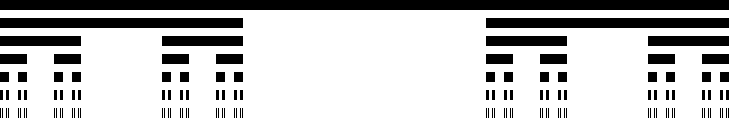

Cantor←{0=⍵:,1⋄,101∘.∧∇⍵-1}Cantor01Cantor1101Cantor2101000101Cantor3101000101000000000101000101Cantor 0 to Cantor 6 depicted as black bars:

The function sieve⍵ computes a bit vector of length ⍵ so that bit i (for 0≤i and i<⍵) is 1 if and only if i is a prime. [10] : §46

sieve←{4≥⍵:⍵⍴0011r←⌊0.5*⍨n←⍵p←235711131719232931374143p←(1+(n≤×⍀p)⍳1)↑pb←0@1⊃{(m⍴⍵)>m⍴⍺↑1⊣m←n⌊⍺×≢⍵}⌿⊖1,p{r<q←b⍳1:b⊣b[⍵]←1⋄b[q,q×⍸b↑⍨⌈n÷q]←0⋄∇⍵,q}p}1010⍴sieve1000011010100010100010100010000010100000100010100010000010000010100000100010100000100010000010000000100b←sieve1e9≢b1000000000(10*⍳10)(+⌿↑)⍤01⊢b04251681229959278498664579576145550847534The last sequence, the number of primes less than powers of 10, is an initial segment of OEIS: A006880 . The last number, 50847534, is the number of primes less than . It is called Bertelsen's number, memorably described by MathWorld as "an erroneous name erroneously given the erroneous value of ". [12]

sieve uses two different methods to mark composites with 0s, both effected using local anonymous dfns: The first uses the sieve of Eratosthenes on an initial mask of 1 and a prefix of the primes 2 3...43, using the insert operator ⌿ (right fold). (The length of the prefix obtains by comparison with the primorial function ×⍀p.) The second finds the smallest new prime q remaining in b (q←b⍳1), and sets to 0 bit q itself and bits at q times the numbers at remaining 1 bits in an initial segment of b (⍸b↑⍨⌈n÷q). This second dfn uses tail recursion.

Tail recursion

Typically, the factorial function is define recursively (as above), but it can be coded to exploit tail recursion by using an accumulator left argument: [13]

fac←{⍺←1⋄⍵=0:⍺⋄(⍺×⍵)∇⍵-1}Similarly, the determinant of a square complex matrix using Gaussian elimination can be computed with tail recursion: [14]

det←{⍝ determinant of a square complex matrix⍺←1⍝ product of co-factor coefficients so far0=≢⍵:⍺⍝ result for 0-by-0(ij)←(⍴⍵)⊤⊃⍒|,⍵⍝ row and column index of the maximal elementk←⍳≢⍵(⍺×⍵[i;j]ׯ1*i+j)∇⍵[k~i;k~j]-⍵[k~i;j]∘.×⍵[i;k~j]÷⍵[i;j]}Multiple recursion

A partition of a non-negative integer is a vector of positive integers such that n=+⌿v, where the order in is not significant. For example, 22 and 211 are partitions of 4, and 211 and 121 and 112 are considered to be the same partition.

The partition function counts the number of partitions. The function is of interest in number theory, studied by Euler, Hardy, Ramanujan, Erdős, and others. The recurrence relation

derived from Euler's pentagonal number theorem. [15] Written as a dfn: [10] : §16

pn←{1≥⍵:0≤⍵⋄-⌿+⌿∇¨rec⍵}rec←{⍵-(÷∘2(×⍤1)¯11∘.+3∘×)1+⍳⌈0.5*⍨⍵×2÷3}pn1042pn¨⍳13⍝ OEIS A00004111235711152230425677The basis step 1≥⍵:0≤⍵ states that for 1≥⍵, the result of the function is 0≤⍵, 1 if ⍵ is 0 or 1 and 0 otherwise. The recursive step is highly multiply recursive. For example, pn200 would result in the function being applied to each element of rec200, which are:

rec200199195188178165149130108835524¯10198193185174160143123100744513¯22and pn200 requires longer than the age of the universe to compute ( function calls to itself). [10] : §16 The compute time can be reduced by memoization, here implemented as the direct operator (higher-order function) M:

M←{f←⍺⍺i←2+'⋄'⍳⍨t←2↓,⎕cr'f'⍎'{T←(1+⍵)⍴¯1 ⋄ ',(i↑t),'¯1≢T[⍵]:⊃T[⍵] ⋄ ⊃T[⍵]←⊂',(i↓t),'⍵}⍵'}pnM2003.973E120⍕pnM200⍝ format to 0 decimal places3972999029388This value of pnM200 agrees with that computed by Hardy and Ramanujan in 1918. [16]

The memo operator M defines a variant of its operand function ⍺⍺ to use a cache T and then evaluates it. With the operand pn the variant is:

{T←(1+⍵)⍴¯1⋄{1≥⍵:0≤⍵⋄¯1≢T[⍵]:⊃T[⍵]⋄⊃T[⍵]←⊂-⌿+⌿∇¨rec⍵}⍵}Direct operator (dop)

Quicksort on an array ⍵ works by choosing a "pivot" at random among its major cells, then catenating the sorted major cells which strictly precede the pivot, the major cells equal to the pivot, and the sorted major cells which strictly follow the pivot, as determined by a comparison function ⍺⍺. Defined as a direct operator (dop) Q:

Q←{1≥≢⍵:⍵⋄(∇⍵⌿⍨0>s)⍪(⍵⌿⍨0=s)⍪∇⍵⌿⍨0<s←⍵⍺⍺⍵⌷⍨?≢⍵}⍝ precedes ⍝ follows ⍝ equals2(×-)88(×-)28(×-)8¯110x←2193836941970101514(×-)Qx0233467891014151919Q3 is a variant that catenates the three parts enclosed by the function ⊂ instead of the parts per se. The three parts generated at each recursive step are apparent in the structure of the final result. Applying the function derived from Q3 to the same argument multiple times gives different results because the pivots are chosen at random. In-order traversal of the results does yield the same sorted array.

Q3←{1≥≢⍵:⍵⋄(⊂∇⍵⌿⍨0>s)⍪(⊂⍵⌿⍨0=s)⍪⊂∇⍵⌿⍨0<s←⍵⍺⍺⍵⌷⍨?≢⍵}(×-)Q3x┌────────────────────────────────────────────┬─────┬┐│┌──────────────┬─┬─────────────────────────┐│1919││││┌──────┬───┬─┐│6│┌──────┬─┬──────────────┐│││││││┌┬─┬─┐│33│4││││┌┬─┬─┐│9│┌┬──┬────────┐││││││││││0│2│││││││││7│8│││││10│┌──┬──┬┐│││││││││└┴─┴─┘││││││└┴─┴─┘││││││14│15││││││││││└──────┴───┴─┘││││││││└──┴──┴┘│││││││││││││└┴──┴────────┘│││││││││└──────┴─┴──────────────┘│││││└──────────────┴─┴─────────────────────────┘│││└────────────────────────────────────────────┴─────┴┘(×-)Q3x┌───────────────────────────┬─┬─────────────────────────────┐│┌┬─┬──────────────────────┐│7│┌────────────────────┬─────┬┐││││0│┌┬─┬─────────────────┐││││┌──────┬──┬────────┐│1919│││││││││2│┌────────────┬─┬┐││││││┌┬─┬─┐│10│┌──┬──┬┐│││││││││││││┌───────┬─┬┐│6││││││││││8│9││││14│15││││││││││││││││┌┬───┬┐│4│││││││││││└┴─┴─┘││└──┴──┴┘││││││││││││││││33│││││││││││││└──────┴──┴────────┘│││││││││││││└┴───┴┘││││││││││└────────────────────┴─────┴┘│││││││││└───────┴─┴┘│││││││││││││││└────────────┴─┴┘│││││││││└┴─┴─────────────────┘│││││└┴─┴──────────────────────┘│││└───────────────────────────┴─┴─────────────────────────────┘The above formulation is not new; see for example Figure 3.7 of the classic The Design and Analysis of Computer Algorithms. [17] However, unlike the pidgin ALGOL program in Figure 3.7, Q is executable, and the partial order used in the sorting is an operand, the (×-) the examples above. [9]

Dfns with operators and trains

Dfns, especially anonymous dfns, work well with operators and trains. The following snippet solves a "Programming Pearls" puzzle: [18] given a dictionary of English words, here represented as the character matrix a, find all sets of anagrams.

a{⍵[⍋⍵]}⍤1⊢a({⍵[⍋⍵]}⍤1{⊂⍵}⌸⊢)apatsapst┌────┬────┬────┐spatapst│pats│teas│star│teasaest│spat│sate││sateaest│taps│etas││tapsapst│past│seat││etasaest││eats││pastapst││tase││seataest││east││eatsaest││seta││taseaest└────┴────┴────┘stararsteastaestsetaaestThe algorithm works by sorting the rows individually ({⍵[⍋⍵]}⍤1⊢a), and these sorted rows are used as keys ("signature" in the Programming Pearls description) to the key operator ⌸ to group the rows of the matrix. [9] : §3.3 The expression on the right is a train, a syntactic form employed by APL to achieve tacit programming. Here, it is an isolated sequence of three functions such that (fgh)⍵ ⇔ (f⍵)g(h⍵), whence the expression on the right is equivalent to ({⍵[⍋⍵]}⍤1⊢a){⊂⍵}⌸a.

Lexical scope

When an inner (nested) dfn refers to a name, it is sought by looking outward through enclosing dfns rather than down the call stack. This regime is said to employ lexical scope instead of APL's usual dynamic scope. The distinction becomes apparent only if a call is made to a function defined at an outer level. For the more usual inward calls, the two regimes are indistinguishable. [19] : p.137

For example, in the following function which, the variable ty is defined both in which itself and in the inner function f1. When f1 calls outward to f2 and f2 refers to ty, it finds the outer one (with value 'lexical') rather than the one defined in f1 (with value 'dynamic'):

which←{ty←'lexical'f1←{ty←'dynamic'⋄f2⍵}f2←{ty,⍵}f1⍵}which' scope'lexicalscopeError-guard

The following function illustrates use of error guards: [19] : p.139

plus←{tx←'catch all'⋄0::txtx←'domain'⋄11::txtx←'length'⋄5::tx⍺+⍵}2plus3⍝ no errors52345plus'three'⍝ argument lengths don't matchlength2345plus'four'⍝ can't add charactersdomain23plus34⍴5⍝ can't add vector to matrixcatchallIn APL, error number 5 is "length error"; error number 11 is "domain error"; and error number 0 is a "catch all" for error numbers 1 to 999.

The example shows the unwinding of the local environment before an error-guard's expression is evaluated. The local name tx is set to describe the purview of its following error-guard. When an error occurs, the environment is unwound to expose tx's statically correct value.