In mathematics and computer programming, exponentiating by squaring is a general method for fast computation of large positive integer powers of a number, or more generally of an element of a semigroup, like a polynomial or a square matrix. Some variants are commonly referred to as square-and-multiply algorithms or binary exponentiation. These can be of quite general use, for example in modular arithmetic or powering of matrices. For semigroups for which additive notation is commonly used, like elliptic curves used in cryptography, this method is also referred to as double-and-add.

First-order logic—also called predicate logic, predicate calculus, quantificational logic—is a collection of formal systems used in mathematics, philosophy, linguistics, and computer science. First-order logic uses quantified variables over non-logical objects, and allows the use of sentences that contain variables. Rather than propositions such as "all men are mortal", in first-order logic one can have expressions in the form "for all x, if x is a man, then x is mortal"; where "for all x" is a quantifier, x is a variable, and "... is a man" and "... is mortal" are predicates. This distinguishes it from propositional logic, which does not use quantifiers or relations; in this sense, propositional logic is the foundation of first-order logic.

In computability theory, a primitive recursive function is, roughly speaking, a function that can be computed by a computer program whose loops are all "for" loops. Primitive recursive functions form a strict subset of those general recursive functions that are also total functions.

In mathematical logic and computer science, a general recursive function, partial recursive function, or μ-recursive function is a partial function from natural numbers to natural numbers that is "computable" in an intuitive sense – as well as in a formal one. If the function is total, it is also called a total recursive function. In computability theory, it is shown that the μ-recursive functions are precisely the functions that can be computed by Turing machines. The μ-recursive functions are closely related to primitive recursive functions, and their inductive definition (below) builds upon that of the primitive recursive functions. However, not every total recursive function is a primitive recursive function—the most famous example is the Ackermann function.

In mathematics, the floor function is the function that takes as input a real number x, and gives as output the greatest integer less than or equal to x, denoted ⌊x⌋ or floor(x). Similarly, the ceiling function maps x to the least integer greater than or equal to x, denoted ⌈x⌉ or ceil(x).

In computational complexity theory, the time hierarchy theorems are important statements about time-bounded computation on Turing machines. Informally, these theorems say that given more time, a Turing machine can solve more problems. For example, there are problems that can be solved with n2 time but not n time, where n is the input length.

In mathematical logic, the arithmetical hierarchy, arithmetic hierarchy or Kleene–Mostowski hierarchy classifies certain sets based on the complexity of formulas that define them. Any set that receives a classification is called arithmetical. The arithmetical hierarchy was invented independently by Kleene (1943) and Mostowski (1946).

In mathematical logic, a formula of first-order logic is in Skolem normal form if it is in prenex normal form with only universal first-order quantifiers.

In number theory, a formula for primes is a formula generating the prime numbers, exactly and without exception. Formulas for calculating primes do exist; however, they are computationally very slow. A number of constraints are known, showing what such a "formula" can and cannot be.

In linear algebra and functional analysis, a projection is a linear transformation from a vector space to itself such that . That is, whenever is applied twice to any vector, it gives the same result as if it were applied once. It leaves its image unchanged. This definition of "projection" formalizes and generalizes the idea of graphical projection. One can also consider the effect of a projection on a geometrical object by examining the effect of the projection on points in the object.

In number theory, the integer square root (isqrt) of a non-negative integer n is the non-negative integer m which is the greatest integer less than or equal to the square root of n,

In mathematics, a pairing function is a process to uniquely encode two natural numbers into a single natural number.

Primitive recursive arithmetic (PRA) is a quantifier-free formalization of the natural numbers. It was first proposed by Norwegian mathematician Skolem (1923), as a formalization of his finitistic conception of the foundations of arithmetic, and it is widely agreed that all reasoning of PRA is finitistic. Many also believe that all of finitism is captured by PRA, but others believe finitism can be extended to forms of recursion beyond primitive recursion, up to ε0, which is the proof-theoretic ordinal of Peano arithmetic. PRA's proof theoretic ordinal is ωω, where ω is the smallest transfinite ordinal. PRA is sometimes called Skolem arithmetic, although that has another meaning, see Skolem arithmetic.

TC0 is a complexity class used in circuit complexity. It is the first class in the hierarchy of TC classes.

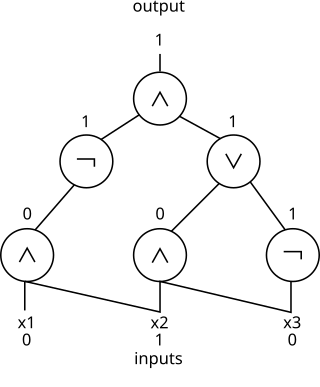

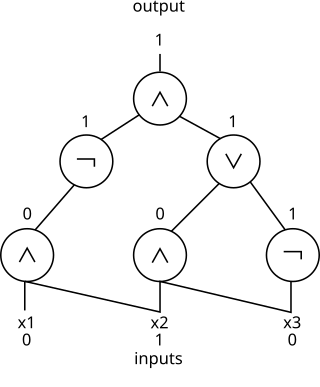

In theoretical computer science, circuit complexity is a branch of computational complexity theory in which Boolean functions are classified according to the size or depth of the Boolean circuits that compute them. A related notion is the circuit complexity of a recursive language that is decided by a uniform family of circuits .

The Grzegorczyk hierarchy, named after the Polish logician Andrzej Grzegorczyk, is a hierarchy of functions used in computability theory. Every function in the Grzegorczyk hierarchy is a primitive recursive function, and every primitive recursive function appears in the hierarchy at some level. The hierarchy deals with the rate at which the values of the functions grow; intuitively, functions in lower levels of the hierarchy grow slower than functions in the higher levels.

In proof theory, a branch of mathematical logic, elementary function arithmetic (EFA), also called elementary arithmetic and exponential function arithmetic, is the system of arithmetic with the usual elementary properties of 0, 1, +, ×, , together with induction for formulas with bounded quantifiers.

In stochastic analysis, a rough path is a generalization of the notion of smooth path allowing to construct a robust solution theory for controlled differential equations driven by classically irregular signals, for example a Wiener process. The theory was developed in the 1990s by Terry Lyons. Several accounts of the theory are available.

The purpose of this page is to catalog new, interesting, and useful identities related to number-theoretic divisor sums, i.e., sums of an arithmetic function over the divisors of a natural number , or equivalently the Dirichlet convolution of an arithmetic function with one:

In computer science, merge-insertion sort or the Ford–Johnson algorithm is a comparison sorting algorithm published in 1959 by L. R. Ford Jr. and Selmer M. Johnson. It uses fewer comparisons in the worst case than the best previously known algorithms, binary insertion sort and merge sort, and for 20 years it was the sorting algorithm with the fewest known comparisons. Although not of practical significance, it remains of theoretical interest in connection with the problem of sorting with a minimum number of comparisons. The same algorithm may have also been independently discovered by Stanisław Trybuła and Czen Ping.