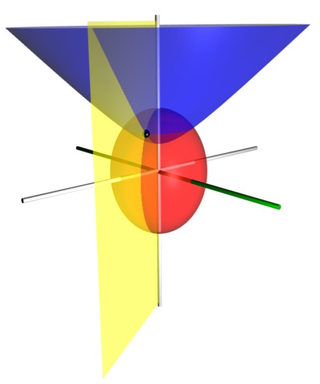

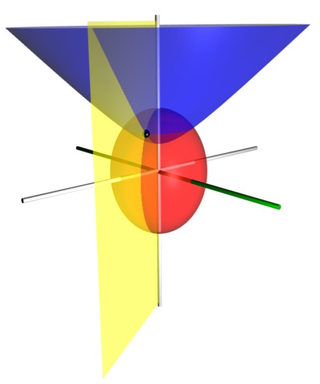

In vector calculus, the gradient of a scalar-valued differentiable function of several variables is the vector field whose value at a point gives the direction and the rate of fastest increase. The gradient transforms like a vector under change of basis of the space of variables of . If the gradient of a function is non-zero at a point , the direction of the gradient is the direction in which the function increases most quickly from , and the magnitude of the gradient is the rate of increase in that direction, the greatest absolute directional derivative. Further, a point where the gradient is the zero vector is known as a stationary point. The gradient thus plays a fundamental role in optimization theory, where it is used to minimize a function by gradient descent. In coordinate-free terms, the gradient of a function may be defined by:

The Navier–Stokes equations are partial differential equations which describe the motion of viscous fluid substances. They were named after French engineer and physicist Claude-Louis Navier and the Irish physicist and mathematician George Gabriel Stokes. They were developed over several decades of progressively building the theories, from 1822 (Navier) to 1842–1850 (Stokes).

In mathematics, the Laplace operator or Laplacian is a differential operator given by the divergence of the gradient of a scalar function on Euclidean space. It is usually denoted by the symbols , (where is the nabla operator), or . In a Cartesian coordinate system, the Laplacian is given by the sum of second partial derivatives of the function with respect to each independent variable. In other coordinate systems, such as cylindrical and spherical coordinates, the Laplacian also has a useful form. Informally, the Laplacian Δf (p) of a function f at a point p measures by how much the average value of f over small spheres or balls centered at p deviates from f (p).

In mathematics, Jensen's inequality, named after the Danish mathematician Johan Jensen, relates the value of a convex function of an integral to the integral of the convex function. It was proved by Jensen in 1906, building on an earlier proof of the same inequality for doubly-differentiable functions by Otto Hölder in 1889. Given its generality, the inequality appears in many forms depending on the context, some of which are presented below. In its simplest form the inequality states that the convex transformation of a mean is less than or equal to the mean applied after convex transformation; it is a simple corollary that the opposite is true of concave transformations.

In mathematics, the covariant derivative is a way of specifying a derivative along tangent vectors of a manifold. Alternatively, the covariant derivative is a way of introducing and working with a connection on a manifold by means of a differential operator, to be contrasted with the approach given by a principal connection on the frame bundle – see affine connection. In the special case of a manifold isometrically embedded into a higher-dimensional Euclidean space, the covariant derivative can be viewed as the orthogonal projection of the Euclidean directional derivative onto the manifold's tangent space. In this case the Euclidean derivative is broken into two parts, the extrinsic normal component and the intrinsic covariant derivative component.

In probability theory, the Borel–Kolmogorov paradox is a paradox relating to conditional probability with respect to an event of probability zero. It is named after Émile Borel and Andrey Kolmogorov.

In mathematics, theta functions are special functions of several complex variables. They show up in many topics, including Abelian varieties, moduli spaces, quadratic forms, and solitons. As Grassmann algebras, they appear in quantum field theory.

A directional derivative is a concept in multivariable calculus that measures the rate at which a function changes in a particular direction at a given point.

The representation theory of groups is a part of mathematics which examines how groups act on given structures.

In physics, the Hamilton–Jacobi equation, named after William Rowan Hamilton and Carl Gustav Jacob Jacobi, is an alternative formulation of classical mechanics, equivalent to other formulations such as Newton's laws of motion, Lagrangian mechanics and Hamiltonian mechanics.

In general relativity, Schwarzschild geodesics describe the motion of test particles in the gravitational field of a central fixed mass that is, motion in the Schwarzschild metric. Schwarzschild geodesics have been pivotal in the validation of Einstein's theory of general relativity. For example, they provide accurate predictions of the anomalous precession of the planets in the Solar System and of the deflection of light by gravity.

In quantum field theory, the Wightman distributions can be analytically continued to analytic functions in Euclidean space with the domain restricted to the ordered set of points in Euclidean space with no coinciding points. These functions are called the Schwinger functions and they are real-analytic, symmetric under the permutation of arguments, Euclidean covariant and satisfy a property known as reflection positivity. Properties of Schwinger functions are known as Osterwalder–Schrader axioms. Schwinger functions are also referred to as Euclidean correlation functions.

In mathematics, the derivative is a fundamental construction of differential calculus and admits many possible generalizations within the fields of mathematical analysis, combinatorics, algebra, geometry, etc.

In mathematics, the Gateaux differential or Gateaux derivative is a generalization of the concept of directional derivative in differential calculus. Named after René Gateaux, it is defined for functions between locally convex topological vector spaces such as Banach spaces. Like the Fréchet derivative on a Banach space, the Gateaux differential is often used to formalize the functional derivative commonly used in the calculus of variations and physics.

In mathematics, the Fréchet derivative is a derivative defined on normed spaces. Named after Maurice Fréchet, it is commonly used to generalize the derivative of a real-valued function of a single real variable to the case of a vector-valued function of multiple real variables, and to define the functional derivative used widely in the calculus of variations.

In mathematics, subharmonic and superharmonic functions are important classes of functions used extensively in partial differential equations, complex analysis and potential theory.

Prolate spheroidal coordinates are a three-dimensional orthogonal coordinate system that results from rotating the two-dimensional elliptic coordinate system about the focal axis of the ellipse, i.e., the symmetry axis on which the foci are located. Rotation about the other axis produces oblate spheroidal coordinates. Prolate spheroidal coordinates can also be considered as a limiting case of ellipsoidal coordinates in which the two smallest principal axes are equal in length.

The concept of an abstract Wiener space is a mathematical construction developed by Leonard Gross to understand the structure of Gaussian measures on infinite-dimensional spaces. The construction emphasizes the fundamental role played by the Cameron–Martin space. The classical Wiener space is the prototypical example.

In mathematics, the spectral theory of ordinary differential equations is the part of spectral theory concerned with the determination of the spectrum and eigenfunction expansion associated with a linear ordinary differential equation. In his dissertation, Hermann Weyl generalized the classical Sturm–Liouville theory on a finite closed interval to second order differential operators with singularities at the endpoints of the interval, possibly semi-infinite or infinite. Unlike the classical case, the spectrum may no longer consist of just a countable set of eigenvalues, but may also contain a continuous part. In this case the eigenfunction expansion involves an integral over the continuous part with respect to a spectral measure, given by the Titchmarsh–Kodaira formula. The theory was put in its final simplified form for singular differential equations of even degree by Kodaira and others, using von Neumann's spectral theorem. It has had important applications in quantum mechanics, operator theory and harmonic analysis on semisimple Lie groups.

In mathematics, the reduced derivative is a generalization of the notion of derivative that is well-suited to the study of functions of bounded variation. Although functions of bounded variation have derivatives in the sense of Radon measures, it is desirable to have a derivative that takes values in the same space as the functions themselves. Although the precise definition of the reduced derivative is quite involved, its key properties are quite easy to remember: