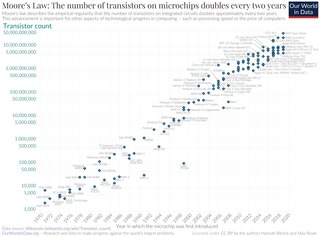

Moore's law is the observation that the number of transistors in an integrated circuit (IC) doubles about every two years. Moore's law is an observation and projection of a historical trend. Rather than a law of physics, it is an empirical relationship. It is an experience-curve law, a type of law quantifying efficiency gains from experience in production.

Processor power dissipation or processing unit power dissipation is the process in which computer processors consume electrical energy, and dissipate this energy in the form of heat due to the resistance in the electronic circuits.

Power management is a feature of some electrical appliances, especially copiers, computers, computer CPUs, computer GPUs and computer peripherals such as monitors and printers, that turns off the power or switches the system to a low-power state when inactive. In computing this is known as PC power management and is built around a standard called ACPI which superseded APM. All recent computers have ACPI support.

Robert Heath Dennard was an American electrical engineer and inventor.

Miniaturization is the trend to manufacture ever-smaller mechanical, optical, and electronic products and devices. Examples include miniaturization of mobile phones, computers and vehicle engine downsizing. In electronics, the exponential scaling and miniaturization of silicon MOSFETs leads to the number of transistors on an integrated circuit chip doubling every two years, an observation known as Moore's law. This leads to MOS integrated circuits such as microprocessors and memory chips being built with increasing transistor density, faster performance, and lower power consumption, enabling the miniaturization of electronic devices.

Reversible computing is any model of computation where the computational process, to some extent, is time-reversible. In a model of computation that uses deterministic transitions from one state of the abstract machine to another, a necessary condition for reversibility is that the relation of the mapping from states to their successors must be one-to-one. Reversible computing is a form of unconventional computing.

Wirth's law is an adage on computer performance which states that software is getting slower more rapidly than hardware is becoming faster.

Edge computing is a distributed computing model that brings computation and data storage closer to the sources of data. More broadly, it refers to any design that pushes computation physically closer to a user, so as to reduce the latency compared to when an application runs on a centralized data centre.

Edholm's law, proposed by and named after Phil Edholm, refers to the observation that the three categories of telecommunication, namely wireless (mobile), nomadic and wired networks (fixed), are in lockstep and gradually converging. Edholm's law also holds that data rates for these telecommunications categories increase on similar exponential curves, with the slower rates trailing the faster ones by a predictable time lag. Edholm's law predicts that the bandwidth and data rates double every 18 months, which has proven to be true since the 1970s. The trend is evident in the cases of Internet, cellular (mobile), wireless LAN and wireless personal area networks.

In computing, performance per watt is a measure of the energy efficiency of a particular computer architecture or computer hardware. Literally, it measures the rate of computation that can be delivered by a computer for every watt of power consumed. This rate is typically measured by performance on the LINPACK benchmark when trying to compare between computing systems: an example using this is the Green500 list of supercomputers. Performance per watt has been suggested to be a more sustainable measure of computing than Moore's Law.

Mark D. Papermaster is an American business executive who is the chief technology officer (CTO) and executive vice president for technology and engineering at Advanced Micro Devices (AMD). On January 25, 2019 he was promoted to AMD's Executive Vice President.

Jonathan Koomey is a researcher who identified a long-term trend in energy-efficiency of computing that has come to be known as Koomey's law. From 1984 to 2003, Dr. Koomey was at Lawrence Berkeley National Laboratory, where he founded and led the End-Use Forecasting group, and has been a visiting professor at Stanford University, Yale University, and the University of California, Berkeley. He has also been a lecturer and a consulting professor at Stanford and a lecturer at UC Berkeley. He is a graduate of Harvard University (A.B) and University of California at Berkeley. His research focuses on the economics of greenhouse gas emissions and the effects of information technology on resource use. He has also published extensively on critical thinking skills and business analytics.

The IEEE International Electron Devices Meeting (IEDM) is an annual micro- and nanoelectronics conference held each December that serves as a forum for reporting technological breakthroughs in the areas of semiconductor and related device technologies, design, manufacturing, physics, modeling and circuit-device interaction.

In semiconductor electronics, Dennard scaling, also known as MOSFET scaling, is a scaling law which states roughly that, as transistors get smaller, their power density stays constant, so that the power use stays in proportion with area; both voltage and current scale (downward) with length. The law, originally formulated for MOSFETs, is based on a 1974 paper co-authored by Robert H. Dennard, after whom it is named.

Heterogeneous computing refers to systems that use more than one kind of processor or core. These systems gain performance or energy efficiency not just by adding the same type of processors, but by adding dissimilar coprocessors, usually incorporating specialized processing capabilities to handle particular tasks.

Superconducting logic refers to a class of logic circuits or logic gates that use the unique properties of superconductors, including zero-resistance wires, ultrafast Josephson junction switches, and quantization of magnetic flux (fluxoid). As of 2023, superconducting computing is a form of cryogenic computing, as superconductive electronic circuits require cooling to cryogenic temperatures for operation, typically below 10 kelvin. Often superconducting computing is applied to quantum computing, with an important application known as superconducting quantum computing.

Beyond CMOS refers to the possible future digital logic technologies beyond the scaling limits of CMOS technology. which limits device density and speeds due to heating effects.

The Task Force on Rebooting Computing (TFRC), housed within IEEE Computer Society, is the new home for the IEEE Rebooting Computing Initiative. Founded in 2013 by the IEEE Future Directions Committee, Rebooting Computing has provided an international, interdisciplinary environment where experts from a wide variety of computer-related fields can come together to explore novel approaches to future computing. IEEE Rebooting Computing began as a global initiative launched by IEEE that proposes to rethink the concept of computing through a holistic look at all aspects of computing, from the device itself to the user interface. As part of its work, IEEE Rebooting Computing provides access to various resources like conferences and educational events, feature and scholarly articles, reports, and videos.

Samuel Naffziger is an American electrical engineer who has been employed at Advanced Micro Devices in Fort Collins, Colorado since 2006. He was named a Fellow of the Institute of Electrical and Electronics Engineers (IEEE) in 2014 for his leadership in the development of power management and low-power processor technologies. He is also the Senior Vice President and Product Technology Architect at AMD.

Huang's law is the observation in computer science and engineering that advancements in graphics processing units (GPUs) are growing at a rate much faster than with traditional central processing units (CPUs). The observation is in contrast to Moore's law that predicted the number of transistors in a dense integrated circuit (IC) doubles about every two years. Huang's law states that the performance of GPUs will more than double every two years. The hypothesis is subject to questions about its validity.