Athlon is the brand name applied to a series of x86-compatible microprocessors designed and manufactured by AMD. The original Athlon was the first seventh-generation x86 processor and the first desktop processor to reach speeds of one gigahertz (GHz). It made its debut as AMD's high-end processor brand on June 23, 1999. Over the years AMD has used the Athlon name with the 64-bit Athlon 64 architecture, the Athlon II, and Accelerated Processing Unit (APU) chips targeting the Socket AM1 desktop SoC architecture, and Socket AM4 Zen (microarchitecture). The modern Zen-based Athlon with a Radeon Graphics processor was introduced in 2019 as AMD's highest-performance entry-level processor.

The Cyrix 6x86 is a line of sixth-generation, 32-bit x86 microprocessors designed and released by Cyrix in 1995. Cyrix, being a fabless company, had the chips manufactured by IBM and SGS-Thomson. The 6x86 was made as a direct competitor to Intel's Pentium microprocessor line, and was pin compatible. During the 6x86's development, the majority of applications performed almost entirely integer operations. The designers foresaw that future applications would most likely maintain this instruction focus. So, to optimize the chip's performance for what they believed to be the most likely application of the CPU, the integer execution resources received most of the transistor budget. This would later prove to be a strategic mistake, as the popularity of the P5 Pentium caused many software developers to hand-optimize code in assembly language, to take advantage of the P5 Pentium's tightly pipelined and lower latency FPU. For example, the highly anticipated first-person shooter Quake used highly optimized assembly code designed almost entirely around the P5 Pentium's FPU. As a result, the P5 Pentium significantly outperformed other CPUs in the game.

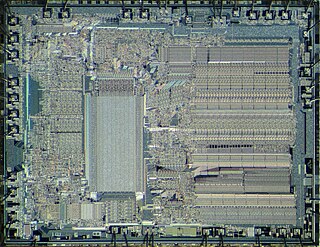

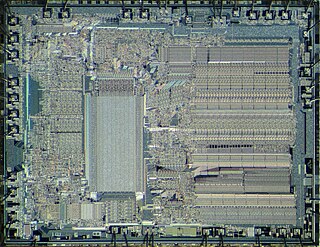

The Intel 80286 is a 16-bit microprocessor that was introduced on February 1, 1982. It was the first 8086-based CPU with separate, non-multiplexed address and data buses and also the first with memory management and wide protection abilities. The 80286 used approximately 134,000 transistors in its original nMOS (HMOS) incarnation and, just like the contemporary 80186, it can correctly execute most software written for the earlier Intel 8086 and 8088 processors.

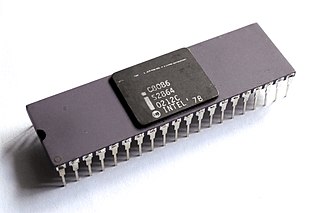

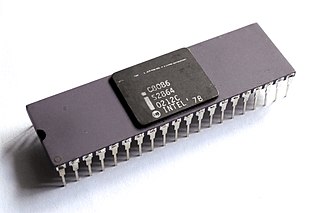

The 8086 is a 16-bit microprocessor chip designed by Intel between early 1976 and June 8, 1978, when it was released. The Intel 8088, released July 1, 1979, is a slightly modified chip with an external 8-bit data bus, and is notable as the processor used in the original IBM PC design.

The Intel 8088 microprocessor is a variant of the Intel 8086. Introduced on June 1, 1979, the 8088 has an eight-bit external data bus instead of the 16-bit bus of the 8086. The 16-bit registers and the one megabyte address range are unchanged, however. In fact, according to the Intel documentation, the 8086 and 8088 have the same execution unit (EU)—only the bus interface unit (BIU) is different. The 8088 was used in the original IBM PC and in IBM PC compatible clones.

The Intel 486, officially named i486 and also known as 80486, is a microprocessor. It is a higher-performance follow-up to the Intel 386. The i486 was introduced in 1989. It represents the fourth generation of binary compatible CPUs following the 8086 of 1978, the Intel 80286 of 1982, and 1985's i386.

The Pentium is a x86 microprocessor introduced by Intel on March 22, 1993. It is the first CPU using the Pentium brand. Considered the fifth generation in the 8086 compatible line of processors, its implementation and microarchitecture was internally called P5.

Celeron is a discontinued series of low-end IA-32 and x86-64 computer microprocessor models targeted at low-cost personal computers, manufactured by Intel. The first Celeron-branded CPU was introduced on April 15, 1998, and was based on the Pentium II.

Cyrix Corporation was a microprocessor developer that was founded in 1988 in Richardson, Texas, as a specialist supplier of floating point units for 286 and 386 microprocessors. The company was founded by Tom Brightman and Jerry Rogers.

Pentium 4 is a series of single-core CPUs for desktops, laptops and entry-level servers manufactured by Intel. The processors were shipped from November 20, 2000 until August 8, 2008. It was removed from the official price lists starting in 2010, being replaced by Pentium Dual-Core.

The Pentium III brand refers to Intel's 32-bit x86 desktop and mobile CPUs based on the sixth-generation P6 microarchitecture introduced on February 28, 1999. The brand's initial processors were very similar to the earlier Pentium II-branded processors. The most notable differences were the addition of the Streaming SIMD Extensions (SSE) instruction set, and the introduction of a controversial serial number embedded in the chip during manufacturing.

The PR system was a figure of merit developed by AMD, Cyrix, IBM Microelectronics and SGS-Thomson in the mid-1990s as a method of comparing their x86 processors to those of rival Intel. The idea was to consider instructions per cycle (IPC) in addition to the clock speed, so that the processors become comparable with Intel's Pentium that had a higher clock speed with overall lower IPC.

In computing, the clock rate or clock speed typically refers to the frequency at which the clock generator of a processor can generate pulses, which are used to synchronize the operations of its components, and is used as an indicator of the processor's speed. It is measured in the SI unit of frequency hertz (Hz).

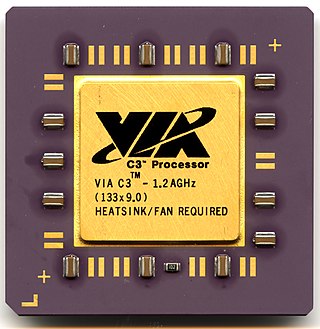

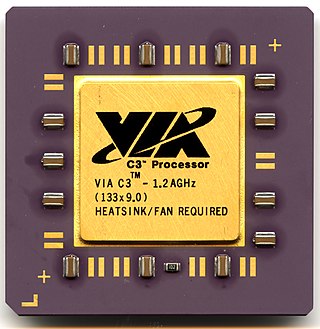

The VIA C3 is a family of x86 central processing units for personal computers designed by Centaur Technology and sold by VIA Technologies. The different CPU cores are built following the design methodology of Centaur Technology.

The NetBurst microarchitecture, called P68 inside Intel, was the successor to the P6 microarchitecture in the x86 family of central processing units (CPUs) made by Intel. The first CPU to use this architecture was the Willamette-core Pentium 4, released on November 20, 2000 and the first of the Pentium 4 CPUs; all subsequent Pentium 4 and Pentium D variants have also been based on NetBurst. In mid-2001, Intel released the Foster core, which was also based on NetBurst, thus switching the Xeon CPUs to the new architecture as well. Pentium 4-based Celeron CPUs also use the NetBurst architecture.

The Intel 8087, announced in 1980, was the first floating-point coprocessor for the 8086 line of microprocessors. The purpose of the chip was to speed up floating-point arithmetic operations, such as addition, subtraction, multiplication, division, and square root. It also computes transcendental functions such as exponential, logarithmic or trigonometric calculations. The performance enhancements were from approximately 20% to over 500%, depending on the specific application. The 8087 could perform about 50,000 FLOPS using around 2.4 watts.

The VIA C7 is an x86 central processing unit designed by Centaur Technology and sold by VIA Technologies.

Enhanced SpeedStep is a series of dynamic frequency scaling technologies built into some Intel's microprocessors that allow the clock speed of the processor to be dynamically changed by software. This allows the processor to meet the instantaneous performance needs of the operation being performed, while minimizing power draw and heat generation. EIST was introduced in several Prescott 6 series in the first quarter of 2005, namely the Pentium 4 660. Intel Speed Shift Technology (SST) was introduced in Intel Skylake Processor.

Yonah is the code name of Intel's first generation 65 nm process CPU cores, based on cores of the earlier Banias / Dothan Pentium M microarchitecture. Yonah CPU cores were used within Intel's Core Solo and Core Duo mobile microprocessor products. SIMD performance on Yonah improved through the addition of SSE3 instructions and improvements to SSE and SSE2 implementations; integer performance decreased slightly due to higher latency cache. Additionally, Yonah included support for the NX bit.

The history of general-purpose CPUs is a continuation of the earlier history of computing hardware.