Related Research Articles

Additive synthesis is a sound synthesis technique that creates timbre by adding sine waves together.

A digital synthesizer is a synthesizer that uses digital signal processing (DSP) techniques to make musical sounds, in contrast to older analog synthesizers, which produce music using analog electronics, and samplers, which play back digital recordings of acoustic, electric, or electronic instruments. Some digital synthesizers emulate analog synthesizers, while others include sampling capability in addition to digital synthesis.

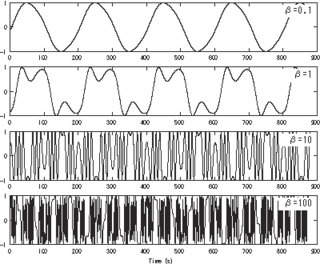

Frequency modulation synthesis is a form of sound synthesis whereby the frequency of a waveform is changed by modulating its frequency with a modulator. The (instantaneous) frequency of an oscillator is altered in accordance with the amplitude of a modulating signal.

Subtractive synthesis is a method of sound synthesis in which overtones of an audio signal are attenuated by a filter to alter the timbre of the sound.

Karplus–Strong string synthesis is a method of physical modelling synthesis that loops a short waveform through a filtered delay line to simulate the sound of a hammered or plucked string or some types of percussion.

Digital music technology encompasses the use of digital instruments to produce, perform or record music. These instruments vary, including computers, electronic effects units, software, and digital audio equipment. Digital music technology is used in performance, playback, recording, composition, mixing, analysis and editing of music, by professions in all parts of the music industry.

Digital waveguide synthesis is the synthesis of audio using a digital waveguide. Digital waveguides are efficient computational models for physical media through which acoustic waves propagate. For this reason, digital waveguides constitute a major part of most modern physical modeling synthesizers.

The Yamaha DX7 is a synthesizer manufactured by Yamaha Corporation from 1983 to 1989. It was the first successful digital synthesizer and is one of the best-selling synthesizers in history, selling more than 200,000 units.

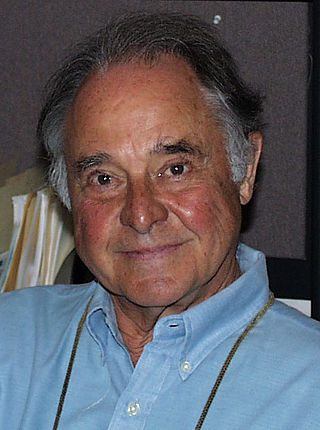

John M. Chowning is an American composer, musician, discoverer, and professor best known for his work at Stanford University, the founding of CCRMA – Center for Computer Research in Music and Acoustics in 1975 and his development of the digital implementation of FM synthesis and the digital sound spatialization while there.

The Sound Blaster 16 is a series of sound cards by Creative Technology, first released in June 1992 for PCs with an ISA or PCI slot. It was the successor to the Sound Blaster Pro series of sound cards and introduced CD-quality digital audio to the Sound Blaster line. For optional wavetable synthesis, the Sound Blaster 16 also added an expansion-header for add-on MIDI-daughterboards, called a Wave Blaster connector, and a game port for optional connection with external MIDI sound modules.

A synthesizer is an electronic musical instrument that generates audio signals. Synthesizers typically create sounds by generating waveforms through methods including subtractive synthesis, additive synthesis and frequency modulation synthesis. These sounds may be altered by components such as filters, which cut or boost frequencies; envelopes, which control articulation, or how notes begin and end; and low-frequency oscillators, which modulate parameters such as pitch, volume, or filter characteristics affecting timbre. Synthesizers are typically played with keyboards or controlled by sequencers, software or other instruments, and may be synchronized to other equipment via MIDI.

Banded Waveguides Synthesis is a physical modeling synthesis method to simulate sounds of dispersive sounding objects, or objects with strongly inharmonic resonant frequencies efficiently. It can be used to model the sound of instruments based on elastic solids such as vibraphone and marimba bars, singing bowls and bells. It can also be used for other instruments with inharmonic partials, such as membranes or plates. For example, simulations of tabla drums and cymbals have been implemented using this method. Because banded waveguides retain the dynamics of the system, complex non-linear excitations can be implemented. The method was originally invented in 1999 by Georg Essl and Perry Cook to synthesize the sound of bowed vibraphone bars.

Gnuspeech is an extensible text-to-speech computer software package that produces artificial speech output based on real-time articulatory speech synthesis by rules. That is, it converts text strings into phonetic descriptions, aided by a pronouncing dictionary, letter-to-sound rules, and rhythm and intonation models; transforms the phonetic descriptions into parameters for a low-level articulatory speech synthesizer; uses these to drive an articulatory model of the human vocal tract producing an output suitable for the normal sound output devices used by various computer operating systems; and does this at the same or faster rate than the speech is spoken for adult speech.

David Aaron Jaffe is an American composer who has written over ninety works for orchestra, chorus, chamber ensembles, and electronics. He is best known for using technology as an electronic music or computer music composer in works such as Silicon Valley Breakdown. He is also known for his development of computer music algorithmic innovations, such as the physical modeling of plucked and bowed strings, as well as for his development of music software such as the NeXT Music Kit and the Universal Audio UAD-2/Apollo/LUNA Recording System.

The Kronos is a music workstation manufactured by Korg that combines nine different synthesizer sound engines with a sequencer, digital recorder, effects, a color touchscreen display and a keyboard. Korg's latest flagship synthesizer series at the time of its announcement, the Kronos series was announced at the winter NAMM Show in Anaheim, California in January 2011.

The Korg Z1 is a digital synthesizer released by Korg in 1997. The Z1 built upon the foundation set by the monophonic Prophecy released two years prior by offering 12-note polyphony and featuring expanded oscillator options, a polyphonic arpeggiator and an XY touchpad for enhanced performance interaction. It was the world's first multitimbral physical modelling synthesizer.

The Korg OASYS PCI is a DSP-based PCI-card for PC and Mac released in 1999. It offers many synthesizer engines from sampling and substractive to FM and physical modelling. Because of its high market price and low polyphony, production was stopped in 2001. About 2000 cards were produced.

The MicroFreak is a synthesizer manufactured by French music technology company Arturia and released in 2019. Described as a "Hybrid Experimental Synthesizer", it uses 18 digital sound engines (algorithms) to synthesize raw tones. This digital oscillator is then fed into a multi-mode analog filter, giving the MicroFreak its hybrid sounds.

The Nautilus is a music workstation manufactured by Korg, a successor to Kronos 2, which comes with Kronos' nine different synthesizer sound engines and other similar features. It was announced in November 2020 with availability in January 2021.

References

- Hiller, L.; Ruiz, P. (1971). "Synthesizing Musical Sounds by Solving the Wave Equation for Vibrating Objects". Journal of the Audio Engineering Society.

- Karplus, K.; Strong, A. (1983). "Digital synthesis of plucked string and drum timbres". Computer Music Journal. 7 (2). Computer Music Journal, Vol. 7, No. 2: 43–55. doi:10.2307/3680062. JSTOR 3680062.

- Julius O. Smith III (December 2010). Physical Audio Signal Processing.

- Cadoz, C.; Luciani A; Florens JL (1993). "CORDIS-ANIMA : a Modeling and Simulation System for Sound and Image Synthesis: The General Formalism". Computer Music Journal. 17/1 (1). Computer Music Journal, MIT Press 1993, Vol. 17, No. 1.