Related Research Articles

Software testing is the act of checking whether software satisfies expectations.

In computer networking, a thin client, sometimes called slim client or lean client, is a simple (low-performance) computer that has been optimized for establishing a remote connection with a server-based computing environment. They are sometimes known as network computers, or in their simplest form as zero clients. The server does most of the work, which can include launching software programs, performing calculations, and storing data. This contrasts with a rich client or a conventional personal computer; the former is also intended for working in a client–server model but has significant local processing power, while the latter aims to perform its function mostly locally.

In software quality assurance, performance testing is in general a testing practice performed to determine how a system performs in terms of responsiveness and stability under a particular workload. It can also serve to investigate, measure, validate or verify other quality attributes of the system, such as scalability, reliability and resource usage.

In computing, overclocking is the practice of increasing the clock rate of a computer to exceed that certified by the manufacturer. Commonly, operating voltage is also increased to maintain a component's operational stability at accelerated speeds. Semiconductor devices operated at higher frequencies and voltages increase power consumption and heat. An overclocked device may be unreliable or fail completely if the additional heat load is not removed or power delivery components cannot meet increased power demands. Many device warranties state that overclocking or over-specification voids any warranty, but some manufacturers allow overclocking as long as it is done (relatively) safely.

In engineering, a factor of safety (FoS) or safety factor (SF) expresses how much stronger a system is than it needs to be for an intended load. Safety factors are often calculated using detailed analysis because comprehensive testing is impractical on many projects, such as bridges and buildings, but the structure's ability to carry a load must be determined to a reasonable accuracy. Many systems are intentionally built much stronger than needed for normal usage to allow for emergency situations, unexpected loads, misuse, or degradation (reliability). Margin of safety is a related measure, expressed as a relative change.

Load testing is the process of putting demand on a structure or system and measuring its response.

Failure mode and effects analysis is the process of reviewing as many components, assemblies, and subsystems as possible to identify potential failure modes in a system and their causes and effects. For each component, the failure modes and their resulting effects on the rest of the system are recorded in a specific FMEA worksheet. There are numerous variations of such worksheets. A FMEA can be a qualitative analysis, but may be put on a quantitative basis when mathematical failure rate models are combined with a statistical failure mode ratio database. It was one of the first highly structured, systematic techniques for failure analysis. It was developed by reliability engineers in the late 1950s to study problems that might arise from malfunctions of military systems. An FMEA is often the first step of a system reliability study.

Reliability engineering is a sub-discipline of systems engineering that emphasizes the ability of equipment to function without failure. Reliability is defined as the probability that a product, system, or service will perform its intended function adequately for a specified period of time, OR will operate in a defined environment without failure. Reliability is closely related to availability, which is typically described as the ability of a component or system to function at a specified moment or interval of time.

Fault tolerance is the ability of a system to maintain proper operation despite failures or faults in one or more of its components. This capability is essential for high-availability, mission-critical, or even life-critical systems.

In computer science, fault injection is a testing technique for understanding how computing systems behave when stressed in unusual ways. This can be achieved using physical- or software-based means, or using a hybrid approach. Widely studied physical fault injections include the application of high voltages, extreme temperatures and electromagnetic pulses on electronic components, such as computer memory and central processing units. By exposing components to conditions beyond their intended operating limits, computing systems can be coerced into mis-executing instructions and corrupting critical data.

Stress testing is a software testing activity that determines the robustness of software by testing beyond the limits of normal operation. Stress testing is particularly important for "mission critical" software, but is used for all types of software. Stress tests commonly put a greater emphasis on robustness, availability, and error handling under a heavy load, than on what would be considered correct behavior under normal circumstances.

A highly accelerated life test (HALT) is a stress testing methodology for enhancing product reliability in which prototypes are stressed to a much higher degree than expected from actual use in order to identify weaknesses in the design or manufacture of the product. Manufacturing and research and development organizations in the electronics, computer, medical, and military industries use HALT to improve product reliability.

API testing is a type of software testing that involves testing application programming interfaces (APIs) directly and as part of integration testing to determine if they meet expectations for functionality, reliability, performance, and security. Since APIs lack a GUI, API testing is performed at the message layer. API testing is now considered critical for automating testing because APIs serve as the primary interface to application logic and because GUI tests are difficult to maintain with the short release cycles and frequent changes commonly used with Agile software development and DevOps.

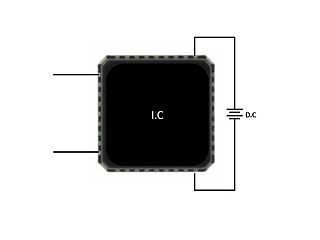

High-temperature operating life (HTOL) is a reliability test applied to integrated circuits (ICs) to determine their intrinsic reliability. This test stresses the IC at an elevated temperature, high voltage and dynamic operation for a predefined period of time. The IC is usually monitored under stress and tested at intermediate intervals. This reliability stress test is sometimes referred to as a lifetime test, device life test or extended burn in test and is used to trigger potential failure modes and assess IC lifetime.

A stress test of hardware is a form of deliberately intense and thorough testing used to determine the stability of a given system or entity. It involves testing beyond normal operational capacity, often to a breaking point, in order to observe the results.

Research and literature on concurrency testing and concurrent testing typically focuses on testing software and systems that use concurrent computing. The purpose is, as with most software testing, to understand the behaviour and performance of a software system that uses concurrent computing, particularly assessing the stability of a system or application during normal activity.

This article discusses a set of tactics useful in software testing. It is intended as a comprehensive list of tactical approaches to software quality assurance and general application of the test method.

For the American musician, see Wayne Nelson.

Stress testing is a form of deliberately intense or thorough testing, used to determine the stability of a given system, critical infrastructure or entity. It involves testing beyond normal operational capacity, often to a breaking point, in order to observe the results.

The term load testing or stress testing is used in different ways in the professional software testing community. Load testing generally refers to the practice of modeling the expected usage of a software program by simulating multiple users accessing the program concurrently. As such, this testing is most relevant for multi-user systems; often one built using a client/server model, such as web servers. However, other types of software systems can also be load tested. For example, a word processor or graphics editor can be forced to read an extremely large document; or a financial package can be forced to generate a report based on several years' worth of data. The most accurate load testing simulates actual use, as opposed to testing using theoretical or analytical modeling.

References

- ↑ "Keep it stable, stupid! How to stress-test your PC hardware". PCWorld. Retrieved 2023-03-11.

- ↑ Nelson, Wayne B., (2004), Accelerated Testing - Statistical Models, Test Plans, and Data Analysis, John Wiley & Sons, New York, ISBN 0-471-69736-2