In computing, extract, transform, load (ETL) is a three-phase process where data is first extracted then transformed and finally loaded into an output data container. The data can be collated from one or more sources and it can also be outputted to one or more destinations. ETL processing is typically executed using software applications but it can be also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on reoccurring schedules either as single jobs or aggregated into a batch of jobs.

A domain-specific language (DSL) is a computer language specialized to a particular application domain. This is in contrast to a general-purpose language (GPL), which is broadly applicable across domains. There are a wide variety of DSLs, ranging from widely used languages for common domains, such as HTML for web pages, down to languages used by only one or a few pieces of software, such as MUSH soft code. DSLs can be further subdivided by the kind of language, and include domain-specific markup languages, domain-specific modeling languages, and domain-specific programming languages. Special-purpose computer languages have always existed in the computer age, but the term "domain-specific language" has become more popular due to the rise of domain-specific modeling. Simpler DSLs, particularly ones used by a single application, are sometimes informally called mini-languages.

Apache Cocoon, usually abbreviated as Cocoon, is a web application framework built around the concepts of Pipeline, separation of concerns, and component-based web development. The framework focuses on XML and XSLT publishing and is built using the Java programming language. The flexibility afforded by relying heavily on XML allows rapid content publishing in a variety of formats including HTML, PDF, and WML. The content management systems Apache Lenya and Daisy have been created on top of the framework. Cocoon is also commonly used as a data warehousing ETL tool or as middleware for transporting data between systems.

Data Transformation Services, or DTS, is a set of objects and utilities to allow the automation of extract, transform and load operations to or from a database. The objects are DTS packages and their components, and the utilities are called DTS tools. DTS was included with earlier versions of Microsoft SQL Server, and was almost always used with SQL Server databases, although it could be used independently with other databases.

In computing and data management, data mapping is the process of creating data element mappings between two distinct data models. Data mapping is used as a first step for a wide variety of data integration tasks, including:

Analysis of Functional NeuroImages (AFNI) is an open-source environment for processing and displaying functional MRI data—a technique for mapping human brain activity.

SQL Server Integration Services (SSIS) is a component of the Microsoft SQL Server database software that can be used to perform a broad range of data migration tasks.

Data cleansing or data cleaning is the process of detecting and correcting corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data. Data cleansing may be performed interactively with data wrangling tools, or as batch processing through scripting.

A semantic mapper is tool or service that aids in the transformation of data elements from one namespace into another namespace. A semantic mapper is an essential component of a semantic broker and one tool that is enabled by the Semantic Web technologies.

Sunopsis is a software company based near Lyon, France. It also has a United States headquarters in Burlington, Massachusetts. The company was bought by Oracle in October 2006. The new name of Sunopsis is Oracle Data Integrator - ODI.

Data integration involves combining data residing in different sources and providing users with a unified view of them. This process becomes significant in a variety of situations, which include both commercial and scientific domains. Data integration appears with increasing frequency as the volume and the need to share existing data explodes. It has become the focus of extensive theoretical work, and numerous open problems remain unsolved. Data integration encourages collaboration between internal as well as external users. The data being integrated must be received from a heterogeneous database system and transformed to a single coherent data store that provides synchronous data across a network of files for clients. A common use of data integration is in data mining when analyzing and extracting information from existing databases that can be useful for Business information.

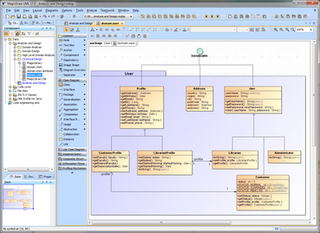

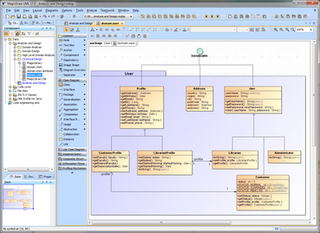

MagicDraw is a visual UML, SysML, BPMN, and UPDM modeling tool with team collaboration support. Designed for business analysts, software analysts, programmers, and QA engineers, this dynamic and versatile development tool facilitates analysis and design of object oriented (OO) systems and databases. It provides the code engineering mechanism, as well as database schema modeling, DDL generation and reverse engineering facilities.

Sandcastle is a documentation generator from Microsoft. It automatically produces MSDN-style code documentation out of reflection information of .NET assemblies and XML documentation comments found in the source code of these assemblies. It can also be used to produce user documentation from Microsoft Assistance Markup Language (MAML) with the same look and feel as reference documentation.

IBM App Connect Enterprise is IBM's integration broker from the WebSphere product family that allows business information to flow between disparate applications across multiple hardware and software platforms. Rules can be applied to the data flowing through the message broker to route and transform the information. The product is an Enterprise Service Bus supplying a communication channel between applications and services in a service-oriented architecture.

Data wrangling, sometimes referred to as data munging, is the process of transforming and mapping data from one "raw" data form into another format with the intent of making it more appropriate and valuable for a variety of downstream purposes such as analytics. The goal of data wrangling is to assure quality and useful data. Data analysts typically spend the majority of their time in the process of data wrangling compared to the actual analysis of the data.

Domain-specific multimodeling is a software development paradigm where each view is made explicit as a separate domain-specific language (DSL).

An integration platform is software which integrates different applications and services. It differentiates itself from the enterprise application integration which has a focus on supply chain management. It uses the idea of system integration to create an environment for engineers.

KNIME, the Konstanz Information Miner, is a free and open-source data analytics, reporting and integration platform. KNIME integrates various components for machine learning and data mining through its modular data pipelining "Building Blocks of Analytics" concept. A graphical user interface and use of JDBC allows assembly of nodes blending different data sources, including preprocessing, for modeling, data analysis and visualization without, or with only minimal, programming.

Guaraná DSL is a domain-specific language (DSL) to design enterprise application integration (EAI) solutions at a high level of abstraction. The resulting models are platform-independent, so engineers do not need to have skills on a low-level integration technology when designing their solutions. Furthermore, this design can be re-used to automatically generate executable EAI solutions for different target technologies.

Oracle TopLink is a mapping and persistence framework for Java developers. TopLink is produced by Oracle and is a part of Oracle's OracleAS, WebLogic, and OC4J servers. It is an object-persistence and object-transformation framework. TopLink provides development tools and run-time functionalities that ease the development process and help increase functionality. Persistent object-oriented data is stored in relational databases which helps build high-performance applications. Storing data in either XML or relational databases is made possible by transforming it from object-oriented data.