Related Research Articles

In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and analyze the data. The DBMS additionally encompasses the core facilities provided to administer the database. The sum total of the database, the DBMS and the associated applications can be referred to as a database system. Often the term "database" is also used loosely to refer to any of the DBMS, the database system or an application associated with the database.

Enterprise resource planning (ERP) is the integrated management of main business processes, often in real time and mediated by software and technology. ERP is usually referred to as a category of business management software—typically a suite of integrated applications—that an organization can use to collect, store, manage and interpret data from many business activities. ERP systems can be local-based or cloud-based. Cloud-based applications have grown in recent years due to the increased efficiencies arising from information being readily available from any location with Internet access.

In computing, a legacy system is an old method, technology, computer system, or application program, "of, relating to, or being a previous or outdated computer system", yet still in use. Often referencing a system as "legacy" means that it paved the way for the standards that would follow it. This can also imply that the system is out of date or in need of replacement.

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted from an input source, transformed, and loaded into an output data container. The data can be collated from one or more sources and it can also be output to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on recurring schedules either as single jobs or aggregated into a batch of jobs.

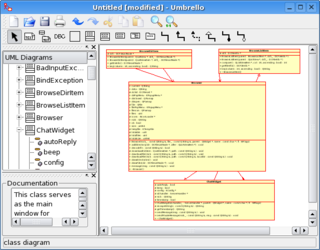

Computer-aided software engineering (CASE) is a domain of software tools used to design and implement applications. CASE tools are similar to and are partly inspired by computer-aided design (CAD) tools used for designing hardware products. CASE tools are intended to help develop high-quality, defect-free, and maintainable software. CASE software was often associated with methods for the development of information systems together with automated tools that could be used in the software development process.

Digital obsolescence is the risk of data loss because of inabilities to access digital assets, due to the hardware or software required for information retrieval being repeatedly replaced by newer devices and systems, resulting in increasingly incompatible formats. While the threat of an eventual "digital dark age" was initially met with little concern until the 1990s, modern digital preservation efforts in the information and archival fields have implemented protocols and strategies such as data migration and technical audits, while the salvage and emulation of antiquated hardware and software address digital obsolescence to limit the potential damage to long-term information access.

Enterprise content management (ECM) extends the concept of content management by adding a timeline for each content item and, possibly, enforcing processes for its creation, approval, and distribution. Systems using ECM generally provide a secure repository for managed items, analog or digital. They also include one methods for importing content to manage new items, and several presentation methods to make items available for use. Although ECM content may be protected by digital rights management (DRM), it is not required. ECM is distinguished from general content management by its cognizance of the processes and procedures of the enterprise for which it is created.

Enterprise software, also known as enterprise application software (EAS), is computer software used to satisfy the needs of an organization rather than its individual users. Enterprise software is an integral part of a computer-based information system, handling a number of business operations, for example to enhance business and management reporting tasks, or support production operations and back office functions. Enterprise systems must process information at a relatively high speed.

IBM InfoSphere DataStage is an ETL tool and part of the IBM Information Platforms Solutions suite and IBM InfoSphere. It uses a graphical notation to construct data integration solutions and is available in various versions such as the Server Edition, the Enterprise Edition, and the MVS Edition. It uses a client-server architecture. The servers can be deployed in both Unix as well as Windows.

Business intelligence software is a type of application software designed to retrieve, analyze, transform and report data for business intelligence (BI). The applications generally read data that has been previously stored, often - though not necessarily - in a data warehouse or data mart.

In computing, data transformation is the process of converting data from one format or structure into another format or structure. It is a fundamental aspect of most data integration and data management tasks such as data wrangling, data warehousing, data integration and application integration.

Legacy modernization, also known as software modernization or platform modernization, refers to the conversion, rewriting or porting of a legacy system to modern computer programming languages, architectures, software libraries, protocols or hardware platforms. Legacy transformation aims to retain and extend the value of the legacy investment through migration to new platforms to benefit from the advantage of the new technologies.

In information systems, applications architecture or application architecture is one of several architecture domains that form the pillars of an enterprise architecture (EA).

RTTS is a professional services organization that provides software quality outsourcing, training, and resources for business applications. With offices in New York City, Philadelphia, Atlanta, and Phoenix, RTTS serves mid-sized to large corporations throughout North America. RTTS uses the software quality and test solutions from IBM, Hewlett Packard Enterprise, Microsoft and other vendors and open source tools to perform software performance testing, functional test automation, big data testing, data warehouse/ETL testing, mobile application testing, security testing and service virtualization.

Database preservation usually involves converting the information stored in a database to a form likely to be accessible in the long term as technology changes, without losing the initial characteristics of the data.

Innovative Routines International (IRI), Inc. is an American software company first known for bringing mainframe sort merge functionality into open systems. IRI was the first vendor to develop a commercial replacement for the Unix sort command, and combine data transformation and reporting in Unix batch processing environments. In 2007, IRI's coroutine sort ("CoSort") became the first product to collate and convert multi-gigabyte XML and LDIF files, join and lookup across multiple files, and apply role-based data privacy functions for fields within sensitive files.

Linoma Software was a developer of secure managed file transfer and IBM i software solutions. The company was acquired by HelpSystems in June 2016. Mid-sized companies, large enterprises and government entities use Linoma's software products to protect sensitive data and comply with data security regulations such as PCI DSS, HIPAA/HITECH, SOX, GLBA and state privacy laws. Linoma's software runs on a variety of platforms including Windows, Linux, UNIX, IBM i, AIX, Solaris, HP-UX and Mac OS X.

Data virtualization is an approach to data management that allows an application to retrieve and manipulate data without requiring technical details about the data, such as how it is formatted at source, or where it is physically located, and can provide a single customer view of the overall data.

The following is provided as an overview of and topical guide to databases:

SAP HANA is an in-memory, column-oriented, relational database management system developed and marketed by SAP SE. Its primary function as the software running a database server is to store and retrieve data as requested by the applications. In addition, it performs advanced analytics and includes extract, transform, load (ETL) capabilities as well as an application server.

References

- 1 2 3 4 5 Morris, J. (2012). "Chapter 1: Data Migration: What's All the Fuss?". Practical Data Migration (2nd ed.). BCS Learning & Development Ltd. pp. 7–15. ISBN 9781906124847.

- 1 2 3 4 5 6 7 8 Dufrasne, B.; Warmuth, A.; Appel, J.; et al. (2017). "Chapter 1: Introducing disk data migration". DS8870 Data Migration Techniques. IBM Redbooks. pp. 1–16. ISBN 9780738440606.

- ↑ Howard, P. (23 August 2011). "Data Migration Report - 2011". Bloor Research International Limited. Retrieved 20 July 2018.

- ↑ King, T. (17 August 2016). "Data Integration vs. Data Migration; What's the Difference?". Solutions Review - Data Integration. LeadSpark, Inc. Retrieved 20 July 2018.

- ↑ Seiwert, C.; Klee, P.; Marinez, L.; et al. (2012). "Chapter 2: Migration techniques and processes". Data Migration to IBM Disk Storage Systems. IBM Redbooks. pp. 7–30. ISBN 9780738436289.

- ↑ Fowler, M.; Beck, K.; Brant, J.; et al. (2012). Refactoring: Improving the Design of Existing Code. Addison-Wesley. pp. 63–4. ISBN 9780133065268.

- ↑ Fronc, A. (1 March 2015). "Database-agnostic applications". DBA Presents. Retrieved 20 July 2018.

- ↑ Plivna, G. (1 July 2006). "Data migration from old to new application: An experience". gplivna.eu. Retrieved 20 July 2018.

- 1 2 Allen, M.; Cervo, D. (2015). Multi-Domain Master Data Management: Advanced MDM and Data Governance in Practice. Morgan Kaufmann. pp. 61–2. ISBN 9780128011478.

- ↑ van der Hoeven, Jeffrey; Bram Lohman; Remco Verdegem (2007). "Emulation for Digital Preservation in Practice: The Results". The International Journal of Digital Curation. 2 (2): 123–132. doi: 10.2218/ijdc.v2i2.35 .

- ↑ Muira, Gregory (2007). "Pushing the Boundaries of Traditional Heritage Policy: maintaining long-term access to multimedia content" (PDF). IFLA Journal. 33 (4): 323–326. doi:10.1177/0340035207086058. S2CID 110505620.