Related Research Articles

Control theory deals with the control of dynamical systems in engineered processes and machines. The objective is to develop a model or algorithm governing the application of system inputs to drive the system to a desired state, while minimizing any delay, overshoot, or steady-state error and ensuring a level of control stability; often with the aim to achieve a degree of optimality.

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or, more simply yet, neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

Systems theory is the interdisciplinary study of systems, i.e. cohesive groups of interrelated, interdependent components that can be natural or human-made. Every system has causal boundaries, is influenced by its context, defined by its structure, function and role, and expressed through its relations with other systems. A system is "more than the sum of its parts" by expressing synergy or emergent behavior.

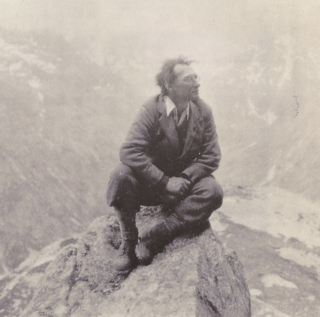

Ivor Armstrong Richards CH, known as I. A. Richards, was an English educator, literary critic, poet, and rhetorician. His work contributed to the foundations of the New Criticism, a formalist movement in literary theory which emphasized the close reading of a literary text, especially poetry, in an effort to discover how a work of literature functions as a self-contained and self-referential æsthetic object.

Instantaneously trained neural networks are feedforward artificial neural networks that create a new hidden neuron node for each novel training sample. The weights to this hidden neuron separate out not only this training sample but others that are near it, thus providing generalization. This separation is done using the nearest hyperplane that can be written down instantaneously. In the two most important implementations the neighborhood of generalization either varies with the training sample or remains constant. These networks use unary coding for an effective representation of the data sets.

A feed forward is an element or pathway within a control system that passes a controlling signal from a source in its external environment to a load elsewhere in its external environment. This is often a command signal from an external operator.

A recurrent neural network (RNN) is a class of artificial neural networks where connections between nodes form a directed or undirected graph along a temporal sequence. This allows it to exhibit temporal dynamic behavior. Derived from feedforward neural networks, RNNs can use their internal state (memory) to process variable length sequences of inputs. This makes them applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition. Recurrent neural networks are theoretically Turing complete and can run arbitrary programs to process arbitrary sequences of inputs.

A neural network is a network or circuit of biological neurons, or, in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus, a neural network is either a biological neural network, made up of biological neurons, or an artificial neural network, used for solving artificial intelligence (AI) problems. The connections of the biological neuron are modeled in artificial neural networks as weights between nodes. A positive weight reflects an excitatory connection, while negative values mean inhibitory connections. All inputs are modified by a weight and summed. This activity is referred to as a linear combination. Finally, an activation function controls the amplitude of the output. For example, an acceptable range of output is usually between 0 and 1, or it could be −1 and 1.

Second-order cybernetics, also known as the cybernetics of cybernetics, is the recursive application of cybernetics to itself and the reflexive practice of cybernetics according to such a critique. It is cybernetics where "the role of the observer is appreciated and acknowledged rather than disguised, as had become traditional in western science". Second-order cybernetics was developed between the late 1960s and mid 1970s by Heinz von Foerster and others, with key inspiration coming from Margaret Mead. Foerster referred to it as "the control of control and the communication of communication" and differentiated first order cybernetics as "the cybernetics of observed systems" and second-order cybernetics as "the cybernetics of observing systems". It is closely allied to radical constructivism, which was developed around the same time by Ernst von Glasersfeld. While it is sometimes considered a break from the earlier concerns of cybernetics, there is much continuity with previous work and it can be thought of as a distinct tradition within cybernetics, with origins in issues evident during the Macy conferences in which cybernetics was initially developed. Its concerns include autonomy, epistemology, ethics, language, self-consistency, self-referentiality, and self-organizing capabilities of complex systems. It has been characterised as cybernetics where "circularity is taken seriously".

Quantum neural networks are computational neural network models which are based on the principles of quantum mechanics. The first ideas on quantum neural computation were published independently in 1995 by Subhash Kak and Ron Chrisley, engaging with the theory of quantum mind, which posits that quantum effects play a role in cognitive function. However, typical research in quantum neural networks involves combining classical artificial neural network models with the advantages of quantum information in order to develop more efficient algorithms. One important motivation for these investigations is the difficulty to train classical neural networks, especially in big data applications. The hope is that features of quantum computing such as quantum parallelism or the effects of interference and entanglement can be used as resources. Since the technological implementation of a quantum computer is still in a premature stage, such quantum neural network models are mostly theoretical proposals that await their full implementation in physical experiments.

The Macy Conferences were a set of meetings of scholars from various disciplines held in New York under the direction of Frank Fremont-Smith at the Josiah Macy Jr. Foundation starting in 1941 and ending in 1960. The explicit aim of the conferences was to promote meaningful communication across scientific disciplines, and restore unity to science. There were different sets of conferences designed to cover specific topics, for a total of 160 conferences over the 19 years this program was active; the phrase "Macy conference" does not apply only to those on cybernetics, although it is sometimes used that way informally by those familiar only with that set of events. Disciplinary isolation within medicine was viewed as particularly problematic by the Macy Foundation, and given that their mandate was to aid medical research, they decided to do something about it. Thus other topics covered in different sets of conferences included: aging, adrenal cortex, biological antioxidants, blood clotting, blood pressure, connective tissues, infancy and childhood, liver injury, metabolic interrelations, nerve impulse, problems of consciousness, and renal function.

An echo state network (ESN) is a type of reservoir computer that uses a recurrent neural network with a sparsely connected hidden layer. The connectivity and weights of hidden neurons are fixed and randomly assigned. The weights of output neurons can be learned so that the network can produce or reproduce specific temporal patterns. The main interest of this network is that although its behaviour is non-linear, the only weights that are modified during training are for the synapses that connect the hidden neurons to output neurons. Thus, the error function is quadratic with respect to the parameter vector and can be differentiated easily to a linear system.

Alexey Ivakhnenko ; was a Soviet and Ukrainian mathematician most famous for developing the Group Method of Data Handling (GMDH), a method of inductive statistical learning, for which he is sometimes referred to as the "Father of Deep Learning".

Group method of data handling (GMDH) is a family of inductive algorithms for computer-based mathematical modeling of multi-parametric datasets that features fully automatic structural and parametric optimization of models.

In science, computing, and engineering, a black box is a system which can be viewed in terms of its inputs and outputs, without any knowledge of its internal workings. Its implementation is "opaque" (black). The term can be used to refer to many inner workings, such as the ones of a transistor, an engine, an algorithm, the human brain, or an institution or government.

Cybernetics is a wide-ranging field concerned with regulatory and purposive systems. The core concept of cybernetics is circular causality or feedback—where the observed outcomes of actions are taken as inputs for further action in ways that support the pursuit and maintenance of particular conditions, or their disruption. Cybernetics is named after an example of circular causality, that of steering a ship, where the helmsperson maintains a steady course in a changing environment by adjusting their steering in continual response to the effect it is observed as having. Other examples of circular causal feedback include: technological devices such as thermostats ; biological examples such as the coordination of volitional movement through the nervous system; and processes of social interaction such as conversation. Cybernetics is concerned with feedback processes such as steering however they are embodied, including in ecological, technological, biological, cognitive, and social systems, and in the context of practical activities such as designing, learning, managing, conversation, and the practice of cybernetics itself. Cybernetics' transdisciplinary and "antidisciplinary" character has meant that it intersects with a number of other fields, leading to it having both wide influence and diverse interpretations.

Feed forward in management theory is an application of the cybernetic concept of feedforward first articulated by I. A. Richards in 1951. It reflects the impact of Management cybernetics in the general area of management studies.

Feedforward is the provision of context of what one wants to communicate prior to that communication.

There are many types of artificial neural networks (ANN).

Autonomous agency theory (AAT) is a viable system theory (VST) which models autonomous social complex adaptive systems. It can be used to model the relationship between an agency and its environment(s), and these may include other interactive agencies. The nature of that interaction is determined by both the agency's external and internal attributes and constraints. Internal attributes may include immanent dynamic "self" processes that drive agency change.

References

- 1 2 Richards, I. A. (1968). "The Secret of "Feedforward"". Saturday Review (3 February 1968): 14–17.

- 1 2 3 Logan, Robert K. (2015). "Feedforward, I. A. Richards, cybernetics and Marshall McLuhan" (PDF). Systema: Connecting Matter, Life, Culture and Technology. 3 (1): 177–185.

- ↑ Richards, I. A. (1952). Communication Between Men: The Meaning of Language. In Heinz von Foerster (ed), Transactions of 8th Macy Conference - Cybernetics: Circular Causal and Feedback Mechanisms in Biological and Social System. New York: Josiah Macy, Jr. Foundation.