In mathematics, a dynamical system is a system in which a function describes the time dependence of a point in an ambient space, such as in a parametric curve. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in a pipe, the random motion of particles in the air, and the number of fish each springtime in a lake. The most general definition unifies several concepts in mathematics such as ordinary differential equations and ergodic theory by allowing different choices of the space and how time is measured. Time can be measured by integers, by real or complex numbers or can be a more general algebraic object, losing the memory of its physical origin, and the space may be a manifold or simply a set, without the need of a smooth space-time structure defined on it.

In mathematics, a Lie group is a group that is also a differentiable manifold, such that group multiplication and taking inverses are both differentiable.

In mathematics and physics, Laplace's equation is a second-order partial differential equation named after Pierre-Simon Laplace, who first studied its properties. This is often written as or where is the Laplace operator, is the divergence operator, is the gradient operator, and is a twice-differentiable real-valued function. The Laplace operator therefore maps a scalar function to another scalar function.

In mathematics, a partial differential equation (PDE) is an equation which computes a function between various partial derivatives of a multivariable function.

In mathematics and physics, the heat equation is a certain partial differential equation. Solutions of the heat equation are sometimes known as caloric functions. The theory of the heat equation was first developed by Joseph Fourier in 1822 for the purpose of modeling how a quantity such as heat diffuses through a given region.

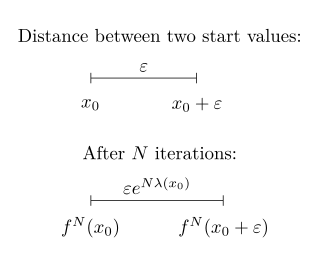

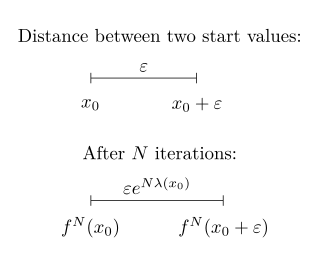

In mathematics, the Lyapunov exponent or Lyapunov characteristic exponent of a dynamical system is a quantity that characterizes the rate of separation of infinitesimally close trajectories. Quantitatively, two trajectories in phase space with initial separation vector diverge at a rate given by

Various types of stability may be discussed for the solutions of differential equations or difference equations describing dynamical systems. The most important type is that concerning the stability of solutions near to a point of equilibrium. This may be discussed by the theory of Aleksandr Lyapunov. In simple terms, if the solutions that start out near an equilibrium point stay near forever, then is Lyapunov stable. More strongly, if is Lyapunov stable and all solutions that start out near converge to , then is said to be asymptotically stable. The notion of exponential stability guarantees a minimal rate of decay, i.e., an estimate of how quickly the solutions converge. The idea of Lyapunov stability can be extended to infinite-dimensional manifolds, where it is known as structural stability, which concerns the behavior of different but "nearby" solutions to differential equations. Input-to-state stability (ISS) applies Lyapunov notions to systems with inputs.

In theoretical physics, the (one-dimensional) nonlinear Schrödinger equation (NLSE) is a nonlinear variation of the Schrödinger equation. It is a classical field equation whose principal applications are to the propagation of light in nonlinear optical fibers and planar waveguides and to Bose–Einstein condensates confined to highly anisotropic, cigar-shaped traps, in the mean-field regime. Additionally, the equation appears in the studies of small-amplitude gravity waves on the surface of deep inviscid (zero-viscosity) water; the Langmuir waves in hot plasmas; the propagation of plane-diffracted wave beams in the focusing regions of the ionosphere; the propagation of Davydov's alpha-helix solitons, which are responsible for energy transport along molecular chains; and many others. More generally, the NLSE appears as one of universal equations that describe the evolution of slowly varying packets of quasi-monochromatic waves in weakly nonlinear media that have dispersion. Unlike the linear Schrödinger equation, the NLSE never describes the time evolution of a quantum state. The 1D NLSE is an example of an integrable model.

In mathematics, Mathieu functions, sometimes called angular Mathieu functions, are solutions of Mathieu's differential equation

Nonlinear control theory is the area of control theory which deals with systems that are nonlinear, time-variant, or both. Control theory is an interdisciplinary branch of engineering and mathematics that is concerned with the behavior of dynamical systems with inputs, and how to modify the output by changes in the input using feedback, feedforward, or signal filtering. The system to be controlled is called the "plant". One way to make the output of a system follow a desired reference signal is to compare the output of the plant to the desired output, and provide feedback to the plant to modify the output to bring it closer to the desired output.

In mathematics, a first-order partial differential equation is a partial differential equation that involves only first derivatives of the unknown function of n variables. The equation takes the form

In mathematics, stability theory addresses the stability of solutions of differential equations and of trajectories of dynamical systems under small perturbations of initial conditions. The heat equation, for example, is a stable partial differential equation because small perturbations of initial data lead to small variations in temperature at a later time as a result of the maximum principle. In partial differential equations one may measure the distances between functions using Lp norms or the sup norm, while in differential geometry one may measure the distance between spaces using the Gromov–Hausdorff distance.

Numerical continuation is a method of computing approximate solutions of a system of parameterized nonlinear equations,

The Mathieu equation is a linear second-order differential equation with periodic coefficients. The French mathematician, E. Léonard Mathieu, first introduced this family of differential equations, nowadays termed Mathieu equations, in his “Memoir on vibrations of an elliptic membrane” in 1868. "Mathieu functions are applicable to a wide variety of physical phenomena, e.g., diffraction, amplitude distortion, inverted pendulum, stability of a floating body, radio frequency quadrupole, and vibration in a medium with modulated density"

In mathematics, Liouville's formula, also known as the Abel–Jacobi–Liouville identity, is an equation that expresses the determinant of a square-matrix solution of a first-order system of homogeneous linear differential equations in terms of the sum of the diagonal coefficients of the system. The formula is named after the French mathematician Joseph Liouville. Jacobi's formula provides another representation of the same mathematical relationship.

In mathematics, the spectral theory of ordinary differential equations is the part of spectral theory concerned with the determination of the spectrum and eigenfunction expansion associated with a linear ordinary differential equation. In his dissertation, Hermann Weyl generalized the classical Sturm–Liouville theory on a finite closed interval to second order differential operators with singularities at the endpoints of the interval, possibly semi-infinite or infinite. Unlike the classical case, the spectrum may no longer consist of just a countable set of eigenvalues, but may also contain a continuous part. In this case the eigenfunction expansion involves an integral over the continuous part with respect to a spectral measure, given by the Titchmarsh–Kodaira formula. The theory was put in its final simplified form for singular differential equations of even degree by Kodaira and others, using von Neumann's spectral theorem. It has had important applications in quantum mechanics, operator theory and harmonic analysis on semisimple Lie groups.

In control theory, the state-transition matrix is a matrix whose product with the state vector at an initial time gives at a later time . The state-transition matrix can be used to obtain the general solution of linear dynamical systems.

In mathematical physics and the theory of partial differential equations, the solitary wave solution of the form is said to be orbitally stable if any solution with the initial data sufficiently close to forever remains in a given small neighborhood of the trajectory of

Multiple scattering theory (MST) is the mathematical formalism that is used to describe the propagation of a wave through a collection of scatterers. Examples are acoustical waves traveling through porous media, light scattering from water droplets in a cloud, or x-rays scattering from a crystal. A more recent application is to the propagation of quantum matter waves like electrons or neutrons through a solid.

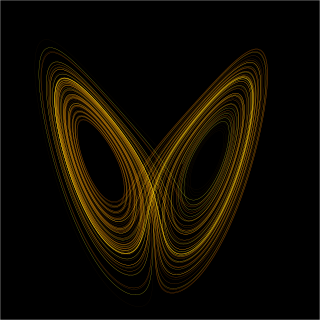

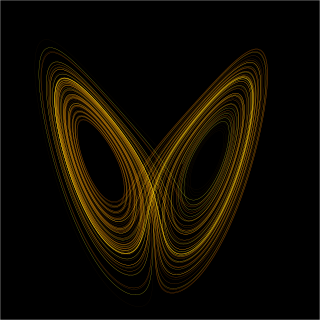

In the mathematics of dynamical systems, the concept of Lyapunov dimension was suggested by Kaplan and Yorke for estimating the Hausdorff dimension of attractors. Further the concept has been developed and rigorously justified in a number of papers, and nowadays various different approaches to the definition of Lyapunov dimension are used. Remark that the attractors with noninteger Hausdorff dimension are called strange attractors. Since the direct numerical computation of the Hausdorff dimension of attractors is often a problem of high numerical complexity, estimations via the Lyapunov dimension became widely spread. The Lyapunov dimension was named after the Russian mathematician Aleksandr Lyapunov because of the close connection with the Lyapunov exponents.