In mathematics, the concept of a measure is a generalization and formalization of geometrical measures and other common notions, such as magnitude, mass, and probability of events. These seemingly distinct concepts have many similarities and can often be treated together in a single mathematical context. Measures are foundational in probability theory, integration theory, and can be generalized to assume negative values, as with electrical charge. Far-reaching generalizations of measure are widely used in quantum physics and physics in general.

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is The parameter is the mean or expectation of the distribution, while the parameter is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate.

Fuzzy logic is a form of many-valued logic in which the truth value of variables may be any real number between 0 and 1. It is employed to handle the concept of partial truth, where the truth value may range between completely true and completely false. By contrast, in Boolean logic, the truth values of variables may only be the integer values 0 or 1.

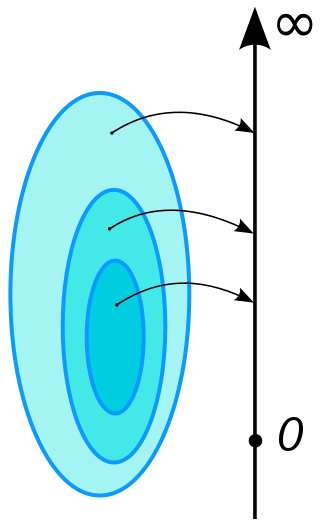

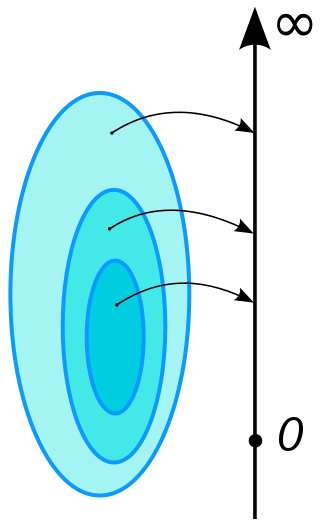

In mathematics, fuzzy sets are sets whose elements have degrees of membership. Fuzzy sets were introduced independently by Lotfi A. Zadeh in 1965 as an extension of the classical notion of set. At the same time, Salii (1965) defined a more general kind of structure called an "L-relation", which he studied in an abstract algebraic context; fuzzy relations are special cases of L-relations when L is the unit interval [0, 1]. They are now used throughout fuzzy mathematics, having applications in areas such as linguistics, decision-making, and clustering.

In probability theory and statistics, Student's t distribution is a continuous probability distribution that generalizes the standard normal distribution. Like the latter, it is symmetric around zero and bell-shaped.

In mathematics, the Kronecker delta is a function of two variables, usually just non-negative integers. The function is 1 if the variables are equal, and 0 otherwise: or with use of Iverson brackets: For example, because , whereas because .

In mathematics, an indicator function or a characteristic function of a subset of a set is a function that maps elements of the subset to one, and all other elements to zero. That is, if A is a subset of some set X, then if and otherwise, where is a common notation for the indicator function. Other common notations are and

In calculus and real analysis, absolute continuity is a smoothness property of functions that is stronger than continuity and uniform continuity. The notion of absolute continuity allows one to obtain generalizations of the relationship between the two central operations of calculus—differentiation and integration. This relationship is commonly characterized in the framework of Riemann integration, but with absolute continuity it may be formulated in terms of Lebesgue integration. For real-valued functions on the real line, two interrelated notions appear: absolute continuity of functions and absolute continuity of measures. These two notions are generalized in different directions. The usual derivative of a function is related to the Radon–Nikodym derivative, or density, of a measure. We have the following chains of inclusions for functions over a compact subset of the real line:

In mathematics, the Radon–Nikodym theorem is a result in measure theory that expresses the relationship between two measures defined on the same measurable space. A measure is a set function that assigns a consistent magnitude to the measurable subsets of a measurable space. Examples of a measure include area and volume, where the subsets are sets of points; or the probability of an event, which is a subset of possible outcomes within a wider probability space.

In mathematics, the total variation identifies several slightly different concepts, related to the (local or global) structure of the codomain of a function or a measure. For a real-valued continuous function f, defined on an interval [a, b] ⊂ R, its total variation on the interval of definition is a measure of the one-dimensional arclength of the curve with parametric equation x ↦ f(x), for x ∈ [a, b]. Functions whose total variation is finite are called functions of bounded variation.

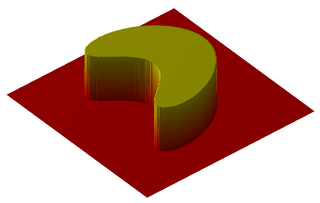

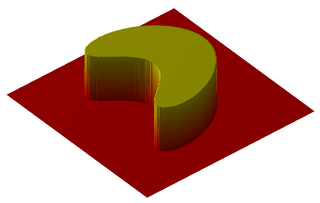

Fuzzy set operations are a generalization of crisp set operations for fuzzy sets. There is in fact more than one possible generalization. The most widely used operations are called standard fuzzy set operations; they comprise: fuzzy complements, fuzzy intersections, and fuzzy unions.

The noncentral t-distribution generalizes Student's t-distribution using a noncentrality parameter. Whereas the central probability distribution describes how a test statistic t is distributed when the difference tested is null, the noncentral distribution describes how t is distributed when the null is false. This leads to its use in statistics, especially calculating statistical power. The noncentral t-distribution is also known as the singly noncentral t-distribution, and in addition to its primary use in statistical inference, is also used in robust modeling for data.

This article discusses how information theory is related to measure theory.

In mathematics, and specifically in measure theory, equivalence is a notion of two measures being qualitatively similar. Specifically, the two measures agree on which events have measure zero.

In mathematics, the Wasserstein distance or Kantorovich–Rubinstein metric is a distance function defined between probability distributions on a given metric space . It is named after Leonid Vaseršteĭn.

In mathematics, the disintegration theorem is a result in measure theory and probability theory. It rigorously defines the idea of a non-trivial "restriction" of a measure to a measure zero subset of the measure space in question. It is related to the existence of conditional probability measures. In a sense, "disintegration" is the opposite process to the construction of a product measure.

In statistics, the multivariate t-distribution is a multivariate probability distribution. It is a generalization to random vectors of the Student's t-distribution, which is a distribution applicable to univariate random variables. While the case of a random matrix could be treated within this structure, the matrix t-distribution is distinct and makes particular use of the matrix structure.

In probability theory, a random measure is a measure-valued random element. Random measures are for example used in the theory of random processes, where they form many important point processes such as Poisson point processes and Cox processes.

Fuzzy classification is the process of grouping elements into fuzzy sets whose membership functions are defined by the truth value of a fuzzy propositional function. A fuzzy propositional function is analogous to an expression containing one or more variables, such that when values are assigned to these variables, the expression becomes a fuzzy proposition.

In mathematics and information theory, Sanov's theorem gives a bound on the probability of observing an atypical sequence of samples from a given probability distribution. In the language of large deviations theory, Sanov's theorem identifies the rate function for large deviations of the empirical measure of a sequence of i.i.d. random variables.