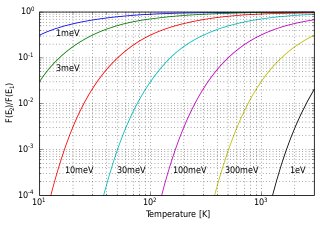

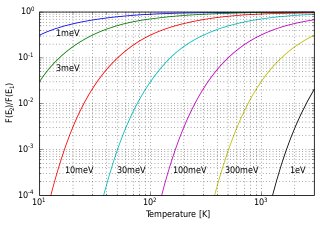

In statistical mechanics and mathematics, a Boltzmann distribution is a probability distribution or probability measure that gives the probability that a system will be in a certain state as a function of that state's energy and the temperature of the system. The distribution is expressed in the form:

Entropy is a scientific concept, as well as a measurable physical property that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the microscopic description of nature in statistical physics, and to the principles of information theory. It has found far ranging applications in chemistry and physics, biological systems and their relation to life, cosmology, economics, sociology, weather science and climate change, and information systems and the transmission of information in telecommunication.

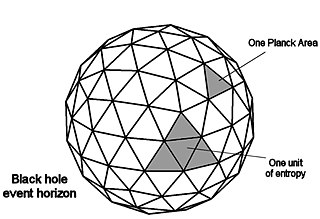

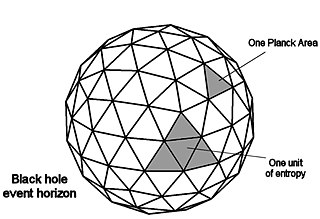

The holographic principle is a tenet of string theories and a supposed property of quantum gravity that states that the description of a volume of space can be thought of as encoded on a lower-dimensional boundary to the region—such as a light-like boundary like a gravitational horizon. First proposed by Gerard 't Hooft, it was given a precise string-theory interpretation by Leonard Susskind, who combined his ideas with previous ones of 't Hooft and Charles Thorn. As pointed out by Raphael Bousso, Thorn observed in 1978 that string theory admits a lower-dimensional description in which gravity emerges from it in what would now be called a holographic way. The prime example of holography is the AdS/CFT correspondence.

Quantum information is the information of the state of a quantum system. It is the basic entity of study in quantum information theory, and can be manipulated using quantum information processing techniques. Quantum information refers to both the technical definition in terms of Von Neumann entropy and the general computational term.

Statistical mechanics, one of the pillars of modern physics, describes how macroscopic observations are related to microscopic parameters that fluctuate around an average. It connects thermodynamic quantities to microscopic behavior, whereas, in classical thermodynamics, the only available option would be to measure and tabulate such quantities for various materials.

Statistical physics is a branch of physics that uses methods of probability theory and statistics, and particularly the mathematical tools for dealing with large populations and approximations, in solving physical problems. It can describe a wide variety of fields with an inherently stochastic nature. Its applications include many problems in the fields of physics, biology, chemistry, neuroscience, and even some social sciences, such as sociology and linguistics. Its main purpose is to clarify the properties of matter in aggregate, in terms of physical laws governing atomic motion.

The second law of thermodynamics establishes the concept of entropy as a physical property of a thermodynamic system. Entropy predicts the direction of spontaneous processes, and determines whether they are irreversible or impossible, despite obeying the requirement of conservation of energy, which is established in the first law of thermodynamics. The second law may be formulated by the observation that the entropy of isolated systems left to spontaneous evolution cannot decrease, as they always arrive at a state of thermodynamic equilibrium, where the entropy is highest. If all processes in the system are reversible, the entropy is constant.

In physics, T-symmetry or time reversal symmetry is the study of the symmetry of physical laws under the inversion of the flow of the direction of time. It is a very active area of study, as there currently appears to be an unresolved paradox between the known microscopic and macroscopic laws of physics. In particular, most of the microscopic laws, those that govern atomic physics and particle physics, appear to be fully symmetric under time reversal. By contrast, at the human scale, time obviously flows in only one direction: from past to future, and never in reverse. Thus, a fundamental task is to understand how time-symmetric microscopic laws lead to time-asymmetric macroscopic laws. Aside from this, the study of T-symmetry also includes the careful articulation of the specific symmetry of specific laws and formulas.

In dynamical system theory, a phase space is a space in which all possible states of a system are represented, with each possible state corresponding to one unique point in the phase space. For mechanical systems, the phase space usually consists of all possible values of position and momentum variables. The concept of phase space was developed in the late 19th century by Ludwig Boltzmann, Henri Poincaré, and Josiah Willard Gibbs.

In classical statistical mechanics, the H-theorem, introduced by Ludwig Boltzmann in 1872, describes the tendency to decrease in the quantity H in a nearly-ideal gas of molecules. As this quantity H was meant to represent the entropy of thermodynamics, the H-theorem was an early demonstration of the power of statistical mechanics as it claimed to derive the second law of thermodynamics—a statement about fundamentally irreversible processes—from reversible microscopic mechanics. It is thought to prove the second law of thermodynamics, albeit under the assumption of low-entropy initial conditions.

In physics, the Bekenstein bound is an upper limit on the entropy S, or information I, that can be contained within a given finite region of space which has a finite amount of energy—or conversely, the maximal amount of information required to perfectly describe a given physical system down to the quantum level. It implies that the information of a physical system, or the information necessary to perfectly describe that system, must be finite if the region of space and the energy is finite. In computer science, this implies that there is a maximal information-processing rate for a physical system that has a finite size and energy, and that a Turing machine with finite physical dimensions and unbounded memory is not physically possible.

In quantum statistical mechanics, the von Neumann entropy, named after John von Neumann, is the extension of classical Gibbs entropy concepts to the field of quantum mechanics. For a quantum-mechanical system described by a density matrix ρ, the von Neumann entropy is

In physics, maximum entropy thermodynamics views equilibrium thermodynamics and statistical mechanics as inference processes. More specifically, MaxEnt applies inference techniques rooted in Shannon information theory, Bayesian probability, and the principle of maximum entropy. These techniques are relevant to any situation requiring prediction from incomplete or insufficient data. MaxEnt thermodynamics began with two papers by Edwin T. Jaynes published in the 1957 Physical Review.

Landauer's principle is a physical principle pertaining to the lower theoretical limit of energy consumption of computation. It holds that "any logically irreversible manipulation of information, such as the erasure of a bit or the merging of two computation paths, must be accompanied by a corresponding entropy increase in non-information-bearing degrees of freedom of the information-processing apparatus or its environment".

The mathematical expressions for thermodynamic entropy in the statistical thermodynamics formulation established by Ludwig Boltzmann and J. Willard Gibbs in the 1870s are similar to the information entropy by Claude Shannon and Ralph Hartley, developed in the 1940s.

The concept entropy was first developed by German physicist Rudolf Clausius in the mid-nineteenth century as a thermodynamic property that predicts that certain spontaneous processes are irreversible or impossible. In statistical mechanics, entropy is formulated as a statistical property using probability theory. The statistical entropy perspective was introduced in 1870 by Austrian physicist Ludwig Boltzmann, who established a new field of physics that provided the descriptive linkage between the macroscopic observation of nature and the microscopic view based on the rigorous treatment of a large ensembles of microstates that constitute thermodynamic systems.

In thermodynamics, entropy is a numerical quantity that shows that many physical processes can go in only one direction in time. For example, you can pour cream into coffee and mix it, but you cannot "unmix" it; you can burn a piece of wood, but you can't "unburn" it. The word 'entropy' has entered popular usage to refer a lack of order or predictability, or of a gradual decline into disorder. A more physical interpretation of thermodynamic entropy refers to spread of energy or matter, or to extent and diversity of microscopic motion.

Temperature is a physical quantity that expresses hot and cold. It is the manifestation of thermal energy, present in all matter, which is the source of the occurrence of heat, a flow of energy, when a body is in contact with another that is colder.

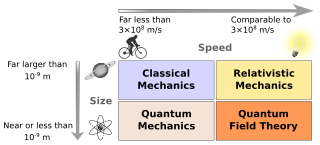

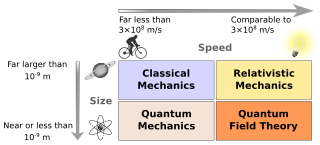

Physics deals with the combination of matter and energy. It also deals with a wide variety of systems, about which theories have been developed that are used by physicists. In general, theories are experimentally tested numerous times before they are accepted as correct as a description of Nature. For instance, the theory of classical mechanics accurately describes the motion of objects, provided they are much larger than atoms and moving at much less than the speed of light. These "central theories" are important tools for research in more specialized topics, and any physicist, regardless of his or her specialization, is expected to be literate in them.

In information theory, the principle of minimum Fisher information (MFI) is a variational principle which, when applied with the proper constraints needed to reproduce empirically known expectation values, determines the best probability distribution that characterizes the system.