A central processing unit (CPU), also called a central processor or main processor, is the electronic circuitry within a computer that executes instructions that make up a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions in the program. The computer industry used the term "central processing unit" as early as 1955. Traditionally, the term "CPU" refers to a processor, more specifically to its processing unit and control unit (CU), distinguishing these core elements of a computer from external components such as main memory and I/O circuitry.

Processor design is the design engineering task of creating a processor, a key component of computer hardware. It is a subfield of computer engineering and electronics engineering (fabrication). The design process involves choosing an instruction set and a certain execution paradigm and results in a microarchitecture, which might be described in e.g. VHDL or Verilog. For microprocessor design, this description is then manufactured employing some of the various semiconductor device fabrication processes, resulting in a die which is bonded onto a chip carrier. This chip carrier is then soldered onto, or inserted into a socket on, a printed circuit board (PCB).

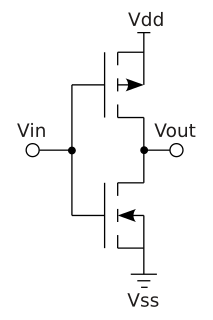

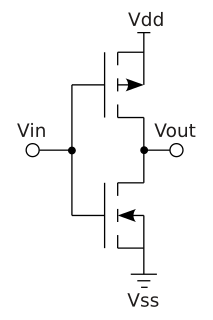

Complementary metal–oxide–semiconductor (CMOS), also known as complementary-symmetry metal–oxide–semiconductor (COS-MOS), is a type of metal–oxide–semiconductor field-effect transistor (MOSFET) fabrication process that uses complementary and symmetrical pairs of p-type and n-type MOSFETs for logic functions. CMOS technology is used for constructing integrated circuit (IC) chips, including microprocessors, microcontrollers, memory chips, and other digital logic circuits, and replaced earlier transistor-transistor logic (TTL) technology.

In computing, overclocking is the practice of increasing the clock rate of a computer to exceed that certified by the manufacturer. Commonly, operating voltage is also increased to maintain a component's operational stability at accelerated speeds. Semiconductor devices operated at higher frequencies and voltages increase power consumption and heat. An overclocked device may be unreliable or fail completely if the additional heat load is not removed or power delivery components cannot meet increased power demands. Many device warranties state that overclocking and/or over-specification voids any warranty, however there are an increasing number of manufacturers that will allow overclocking as long as performed (relatively) safely.

In electronics and especially synchronous digital circuits, a clock signal oscillates between a high and a low state and is used like a metronome to coordinate actions of digital circuits.

The Pentium M is a family of mobile 32-bit single-core x86 microprocessors introduced in March 2003 and forming a part of the Intel Carmel notebook platform under the then new Centrino brand. The Pentium M processors had a maximum thermal design power (TDP) of 5–27 W depending on the model, and were intended for use in laptops. They evolved from the core of the last Pentium III–branded CPU by adding the front-side bus (FSB) interface of Pentium 4, an improved instruction decoding and issuing front end, improved branch prediction, SSE2 support, and a much larger cache. The first Pentium M–branded CPU, code-named Banias, was followed by Dothan. The Pentium M-branded processors were succeeded by the Core-branded dual-core mobile Yonah CPU with a modified microarchitecture.

Underclocking, also known as downclocking, is modifying a computer or electronic circuit's timing settings to run at a lower clock rate than is specified. Underclocking is used to reduce a computer's power consumption, increase battery life, reduce heat emission, and it may also increase the system's stability and compatibility. Underclocking may be implemented by the factory, but many computers and components may be underclocked by the end user.

Power management is a feature of some electrical appliances, especially copiers, computers, CPUs, GPUs and computer peripherals such as monitors and printers, that turns off the power or switches the system to a low-power state when inactive. In computing this is known as PC power management and is built around a standard called ACPI. This supersedes APM. All recent (consumer) computers have ACPI support.

The thermal design power (TDP), sometimes called thermal design point, is the maximum amount of heat generated by a computer chip or component that the cooling system in a computer is designed to dissipate under any workload.

In computer engineering, a logic family may refer to one of two related concepts. A logic family of monolithic digital integrated circuit devices is a group of electronic logic gates constructed using one of several different designs, usually with compatible logic levels and power supply characteristics within a family. Many logic families were produced as individual components, each containing one or a few related basic logical functions, which could be used as "building-blocks" to create systems or as so-called "glue" to interconnect more complex integrated circuits. A "logic family" may also refer to a set of techniques used to implement logic within VLSI integrated circuits such as central processors, memories, or other complex functions. Some such logic families use static techniques to minimize design complexity. Other such logic families, such as domino logic, use clocked dynamic techniques to minimize size, power consumption and delay.

A voltage regulator module (VRM), sometimes called processor power module (PPM), is a buck converter that provides a microprocessor the appropriate supply voltage, converting +5 V or +12 V to a much lower voltage required by the CPU, allowing processors with different supply voltage to be mounted on the same motherboard. On personal computer (PC) systems, the VRM is typically made up of power MOSFET devices.

The CPU core voltage (VCORE) is the power supply voltage supplied to the CPU, GPU, or other device containing a processing core. The amount of power a CPU uses, and thus the amount of heat it dissipates, is the product of this voltage and the current it draws. In modern CPUs, which are CMOS circuits, the current is almost proportional to the clock speed, the CPU drawing almost no current between clock cycles.

Enhanced SpeedStep is a series of dynamic frequency scaling technologies built into some Intel microprocessors that allow the clock speed of the processor to be dynamically changed by software. This allows the processor to meet the instantaneous performance needs of the operation being performed, while minimizing power draw and heat generation. EIST was introduced in several Prescott 6 series in the first quarter of 2005, namely the Pentium 4 660. Intel Speed Shift Technology (SST) was introduced in Intel Skylake Processor.

The Intel Core microarchitecture is a multi-core processor microarchitecture unveiled by Intel in Q1 2006. It is based on the Yonah processor design and can be considered an iteration of the P6 microarchitecture introduced in 1995 with Pentium Pro. High power consumption and heat intensity, the resulting inability to effectively increase clock speed, and other shortcomings such as an inefficient pipeline were the primary reasons why Intel abandoned the NetBurst microarchitecture and switched to a completely different architectural design, delivering high efficiency through a small pipeline rather than high clock speeds. The Core microarchitecture initially did not reach the clock speeds of the NetBurst microarchitecture, even after moving to 45 nm lithography. However after many generations of successor microarchitectures which used Core as their basis, Intel managed to eventually surpass the clock speeds of Netburst with the Devil's Canyon microarchitecture reaching a base frequency of 4 GHz and a maximum tested frequency of 4.4 GHz using 22 nm lithography.

In integrated circuit design, dynamic logic is a design methodology in combinatory logic circuits, particularly those implemented in MOS technology. It is distinguished from the so-called static logic by exploiting temporary storage of information in stray and gate capacitances. It was popular in the 1970s and has seen a recent resurgence in the design of high speed digital electronics, particularly computer CPUs. Dynamic logic circuits are usually faster than static counterparts, and require less surface area, but are more difficult to design. Dynamic logic has a higher toggle rate than static logic but the capacitive loads being toggled are smaller so the overall power consumption of dynamic logic may be higher or lower depending on various tradeoffs. When referring to a particular logic family, the dynamic adjective usually suffices to distinguish the design methodology, e.g. dynamic CMOS or dynamic SOI design.

Dynamic frequency scaling is a technique in computer architecture whereby the frequency of a microprocessor can be automatically adjusted "on the fly" depending on the actual needs, to conserve power and reduce the amount of heat generated by the chip. Dynamic frequency scaling helps preserve battery on mobile devices and decrease cooling cost and noise on quiet computing settings, or can be useful as a security measure for overheated systems. Dynamic frequency scaling is used in all ranges of computing systems, ranging from mobile systems to data centers to reduce the power at the times of low workload.

Dynamic voltage scaling is a power management technique in computer architecture, where the voltage used in a component is increased or decreased, depending upon circumstances. Dynamic voltage scaling to increase voltage is known as overvolting; dynamic voltage scaling to decrease voltage is known as undervolting. Undervolting is done in order to conserve power, particularly in laptops and other mobile devices, where energy comes from a battery and thus is limited, or in rare cases, to increase reliability. Overvolting is done in order to increase computer performance.

Low-power electronics are electronics, such as notebook processors, that have been designed to use less electric power than usual, often at some expense. In the case of notebook processors, this expense is processing power; notebook processors tend to consume less power than their desktop counterparts, at the expense of lower processing power.

Electronic systems’ power consumption has been a real challenge for Hardware and Software designers as well as users especially in portable devices like cell phones and laptop computers. Power consumption also has been an issue for many industries that use computer systems heavily such as Internet service providers using servers or companies with many employees using computers and other computational devices. Many different approaches have been discovered by researchers to estimate power consumption efficiently. This survey paper focuses on the different methods where power consumption can be estimated or measured in real-time.

AMD Turbo Core a.k.a. AMD Core Performance Boost (CPB) is a technology implemented by AMD that allows the processor to dynamically adjust and control the processor operating frequency in certain versions of its processors which allows for increased performance when needed while maintaining lower power and thermal parameters during normal operation. AMD Turbo Core technology has been implemented beginning with the Phenom II X6 microprocessors based on the AMD K10 microarchitecture. AMD Turbo Core is available with some AMD A-Series accelerated processing units.