A relational database (RDB) is a database based on the relational model of data, as proposed by E. F. Codd in 1970. A database management system used to maintain relational databases is a relational database management system (RDBMS). Many relational database systems are equipped with the option of using SQL for querying and updating the database.

A foreign key is a set of attributes in a table that refers to the primary key of another table, linking these two tables. In the context of relational databases, a foreign key is subject to an inclusion dependency constraint that the tuples consisting of the foreign key attributes in one relation, R, must also exist in some other relation, S; furthermore that those attributes must also be a candidate key in S.

Referential integrity is a property of data stating that all its references are valid. In the context of relational databases, it requires that if a value of one attribute (column) of a relation (table) references a value of another attribute, then the referenced value must exist.

In the context of SQL, data definition or data description language (DDL) is a syntax for creating and modifying database objects such as tables, indices, and users. DDL statements are similar to a computer programming language for defining data structures, especially database schemas. Common examples of DDL statements include CREATE, ALTER, and DROP. If you see a .ddl file, that means the file contains a statement to create a table. Oracle SQL Developer contains the ability to export from an ERD generated with Data Modeler to either a .sql file or a .ddl file.

A surrogate key in a database is a unique identifier for either an entity in the modeled world or an object in the database. The surrogate key is not derived from application data, unlike a natural key.

An entity–relationship model describes interrelated things of interest in a specific domain of knowledge. A basic ER model is composed of entity types and specifies relationships that can exist between entities.

Object–role modeling (ORM) is used to model the semantics of a universe of discourse. ORM is often used for data modeling and software engineering.

An SQL INSERT statement adds one or more records to any single table in a relational database.

Object–relational impedance mismatch is a set of difficulties going between data in relational data stores and data in domain-driven object models. Relational Database Management Systems (RDBMS) is the standard method for storing data in a dedicated database, while object-oriented (OO) programming is the default method for business-centric design in programming languages. The problem lies in neither relational databases nor OO programming, but in the conceptual difficulty mapping between the two logic models. Both logical models are differently implementable using database servers, programming languages, design patterns, or other technologies. Issues range from application to enterprise scale, whenever stored relational data is used in domain-driven object models, and vice versa. Object-oriented data stores can trade this problem for other implementation difficulties.

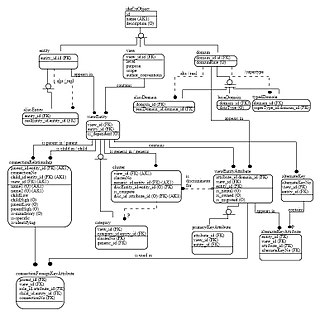

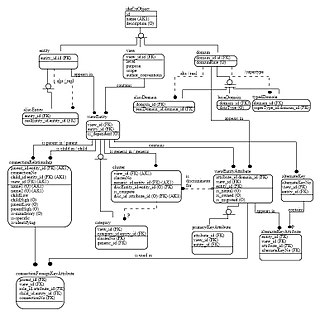

Integration DEFinition for information modeling (IDEF1X) is a data modeling language for the development of semantic data models. IDEF1X is used to produce a graphical information model which represents the structure and semantics of information within an environment or system.

Data cleansing or data cleaning is the process of detecting and correcting corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data. Data cleansing may be performed interactively with data wrangling tools, or as batch processing through scripting or a data quality firewall.

An entity–attribute–value model (EAV) is a data model optimized for the space-efficient storage of sparse—or ad-hoc—property or data values, intended for situations where runtime usage patterns are arbitrary, subject to user variation, or otherwise unforeseeable using a fixed design. The use-case targets applications which offer a large or rich system of defined property types, which are in turn appropriate to a wide set of entities, but where typically only a small, specific selection of these are instantiated for a given entity. Therefore, this type of data model relates to the mathematical notion of a sparse matrix. EAV is also known as object–attribute–value model, vertical database model, and open schema.

In relational database management systems, a unique key is a candidate key. All the candidate keys of a relation can uniquely identify the records of the relation, but only one of them is used as the primary key of the relation. The remaining candidate keys are called unique keys because they can uniquely identify a record in a relation. Unique keys can consist of multiple columns. Unique keys are also called alternate keys. Unique keys are an alternative to the primary key of the relation. In SQL, the unique keys have a UNIQUE constraint assigned to them in order to prevent duplicates. Alternate keys may be used like the primary key when doing a single-table select or when filtering in a where clause, but are not typically used to join multiple tables.

Liquibase is an open-source database-independent library for tracking, managing and applying database schema changes. It was started in 2006 to allow easier tracking of database changes, especially in an agile software development environment.

Scott W. Ambler is a Canadian software engineer, consultant and author. He is an author of books about the Disciplined Agile Delivery toolkit, the Unified process, Agile software development, the Unified Modeling Language, and Capability Maturity Model (CMM) development.

NoSQL is an approach to database design that focuses on providing a mechanism for storage and retrieval of data that is modeled in means other than the tabular relations used in relational databases. Instead of the typical tabular structure of a relational database, NoSQL databases house data within one data structure. Since this non-relational database design does not require a schema, it offers rapid scalability to manage large and typically unstructured data sets. NoSQL systems are also sometimes called "Not only SQL" to emphasize that they may support SQL-like query languages or sit alongside SQL databases in polyglot-persistent architectures.

Database testing usually consists of a layered process, including the user interface (UI) layer, the business layer, the data access layer and the database itself. The UI layer deals with the interface design of the database, while the business layer includes databases supporting business strategies.

The following is provided as an overview of and topical guide to databases:

Semantic heterogeneity is when database schema or datasets for the same domain are developed by independent parties, resulting in differences in meaning and interpretation of data values. Beyond structured data, the problem of semantic heterogeneity is compounded due to the flexibility of semi-structured data and various tagging methods applied to documents or unstructured data. Semantic heterogeneity is one of the more important sources of differences in heterogeneous datasets.

Evolutionary database design involves incremental improvements to the database schema so that it can be continuously updated with changes, reflecting the customer's requirements. People across the globe work on the same piece of software at the same time hence, there is a need for techniques that allow a smooth evolution of database as the design develops. Such methods utilize automated refactoring and continuous integration so that it supports agile methodologies for software development. These development techniques are applied on systems that are in pre-production stage as well on systems that have already been released. These techniques not only cover relevant changes in the database schema according to customer's changing needs, but also migration of modified data into the database and also customizing the database access code accordingly without changing the data semantics.