In mathematics, the discrete Fourier transform (DFT) converts a finite sequence of equally-spaced samples of a function into a same-length sequence of equally-spaced samples of the discrete-time Fourier transform (DTFT), which is a complex-valued function of frequency. The interval at which the DTFT is sampled is the reciprocal of the duration of the input sequence. An inverse DFT (IDFT) is a Fourier series, using the DTFT samples as coefficients of complex sinusoids at the corresponding DTFT frequencies. It has the same sample-values as the original input sequence. The DFT is therefore said to be a frequency domain representation of the original input sequence. If the original sequence spans all the non-zero values of a function, its DTFT is continuous, and the DFT provides discrete samples of one cycle. If the original sequence is one cycle of a periodic function, the DFT provides all the non-zero values of one DTFT cycle.

In engineering, a transfer function of a system, sub-system, or component is a mathematical function that models the system's output for each possible input. It is widely used in electronic engineering tools like circuit simulators and control systems. In simple cases, this function can be represented as a two-dimensional graph of an independent scalar input versus the dependent scalar output. Transfer functions for components are used to design and analyze systems assembled from components, particularly using the block diagram technique, in electronics and control theory.

A wavelet is a wave-like oscillation with an amplitude that begins at zero, increases or decreases, and then returns to zero one or more times. Wavelets are termed a "brief oscillation". A taxonomy of wavelets has been established, based on the number and direction of its pulses. Wavelets are imbued with specific properties that make them useful for signal processing.

In physics, engineering and mathematics, the Fourier transform (FT) is an integral transform that takes a function as input and outputs another function that describes the extent to which various frequencies are present in the original function. The output of the transform is a complex-valued function of frequency. The term Fourier transform refers to both this complex-valued function and the mathematical operation. When a distinction needs to be made, the output of the operation is sometimes called the frequency domain representation of the original function. The Fourier transform is analogous to decomposing the sound of a musical chord into the intensities of its constituent pitches.

A low-pass filter is a filter that passes signals with a frequency lower than a selected cutoff frequency and attenuates signals with frequencies higher than the cutoff frequency. The exact frequency response of the filter depends on the filter design. The filter is sometimes called a high-cut filter, or treble-cut filter in audio applications. A low-pass filter is the complement of a high-pass filter.

Fourier optics is the study of classical optics using Fourier transforms (FTs), in which the waveform being considered is regarded as made up of a combination, or superposition, of plane waves. It has some parallels to the Huygens–Fresnel principle, in which the wavefront is regarded as being made up of a combination of spherical wavefronts whose sum is the wavefront being studied. A key difference is that Fourier optics considers the plane waves to be natural modes of the propagation medium, as opposed to Huygens–Fresnel, where the spherical waves originate in the physical medium.

In mathematics, the Gibbs phenomenon is the oscillatory behavior of the Fourier series of a piecewise continuously differentiable periodic function around a jump discontinuity. The th partial Fourier series of the function produces large peaks around the jump which overshoot and undershoot the function values. As more sinusoids are used, this approximation error approaches a limit of about 9% of the jump, though the infinite Fourier series sum does eventually converge almost everywhere except points of discontinuity.

In signal processing, a finite impulse response (FIR) filter is a filter whose impulse response is of finite duration, because it settles to zero in finite time. This is in contrast to infinite impulse response (IIR) filters, which may have internal feedback and may continue to respond indefinitely.

In mathematics, the continuous wavelet transform (CWT) is a formal tool that provides an overcomplete representation of a signal by letting the translation and scale parameter of the wavelets vary continuously.

The point spread function (PSF) describes the response of a focused optical imaging system to a point source or point object. A more general term for the PSF is the system's impulse response; the PSF is the impulse response or impulse response function (IRF) of a focused optical imaging system. The PSF in many contexts can be thought of as the extended blob in an image that represents a single point object, that is considered as a spatial impulse. In functional terms, it is the spatial domain version of the optical transfer function (OTF) of an imaging system. It is a useful concept in Fourier optics, astronomical imaging, medical imaging, electron microscopy and other imaging techniques such as 3D microscopy and fluorescence microscopy.

In electrical engineering and applied mathematics, blind deconvolution is deconvolution without explicit knowledge of the impulse response function used in the convolution. This is usually achieved by making appropriate assumptions of the input to estimate the impulse response by analyzing the output. Blind deconvolution is not solvable without making assumptions on input and impulse response. Most of the algorithms to solve this problem are based on assumption that both input and impulse response live in respective known subspaces. However, blind deconvolution remains a very challenging non-convex optimization problem even with this assumption.

In signal processing, a causal filter is a linear and time-invariant causal system. The word causal indicates that the filter output depends only on past and present inputs. A filter whose output also depends on future inputs is non-causal, whereas a filter whose output depends only on future inputs is anti-causal. Systems that are realizable must be causal because such systems cannot act on a future input. In effect that means the output sample that best represents the input at time comes out slightly later. A common design practice for digital filters is to create a realizable filter by shortening and/or time-shifting a non-causal impulse response. If shortening is necessary, it is often accomplished as the product of the impulse-response with a window function.

In mathematics, a wavelet series is a representation of a square-integrable function by a certain orthonormal series generated by a wavelet. This article provides a formal, mathematical definition of an orthonormal wavelet and of the integral wavelet transform.

In electronics and signal processing, mainly in digital signal processing, a Gaussian filter is a filter whose impulse response is a Gaussian function. Gaussian filters have the properties of having no overshoot to a step function input while minimizing the rise and fall time. This behavior is closely connected to the fact that the Gaussian filter has the minimum possible group delay. A Gaussian filter will have the best combination of suppression of high frequencies while also minimizing spatial spread, being the critical point of the uncertainty principle. These properties are important in areas such as oscilloscopes and digital telecommunication systems.

In mathematics, Wiener deconvolution is an application of the Wiener filter to the noise problems inherent in deconvolution. It works in the frequency domain, attempting to minimize the impact of deconvolved noise at frequencies which have a poor signal-to-noise ratio.

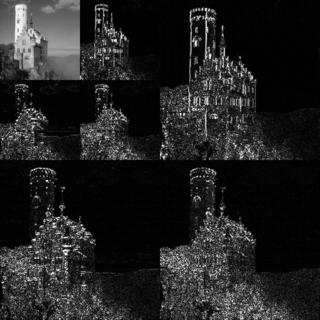

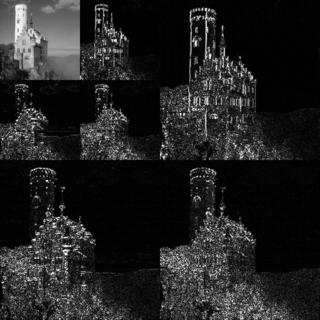

Phase stretch transform (PST) is a computational approach to signal and image processing. One of its utilities is for feature detection and classification. PST is related to time stretch dispersive Fourier transform. It transforms the image by emulating propagation through a diffractive medium with engineered 3D dispersive property. The operation relies on symmetry of the dispersion profile and can be understood in terms of dispersive eigenfunctions or stretch modes. PST performs similar functionality as phase-contrast microscopy, but on digital images. PST can be applied to digital images and temporal data. It is a physics-based feature engineering algorithm.

In signal processing, nonlinear multidimensional signal processing (NMSP) covers all signal processing using nonlinear multidimensional signals and systems. Nonlinear multidimensional signal processing is a subset of signal processing (multidimensional signal processing). Nonlinear multi-dimensional systems can be used in a broad range such as imaging, teletraffic, communications, hydrology, geology, and economics. Nonlinear systems cannot be treated as linear systems, using Fourier transformation and wavelet analysis. Nonlinear systems will have chaotic behavior, limit cycle, steady state, bifurcation, multi-stability and so on. Nonlinear systems do not have a canonical representation, like impulse response for linear systems. But there are some efforts to characterize nonlinear systems, such as Volterra and Wiener series using polynomial integrals as the use of those methods naturally extend the signal into multi-dimensions. Another example is the Empirical mode decomposition method using Hilbert transform instead of Fourier Transform for nonlinear multi-dimensional systems. This method is an empirical method and can be directly applied to data sets. Multi-dimensional nonlinear filters (MDNF) are also an important part of NMSP, MDNF are mainly used to filter noise in real data. There are nonlinear-type hybrid filters used in color image processing, nonlinear edge-preserving filters use in magnetic resonance image restoration. Those filters use both temporal and spatial information and combine the maximum likelihood estimate with the spatial smoothing algorithm.

Multidimensional seismic data processing forms a major component of seismic profiling, a technique used in geophysical exploration. The technique itself has various applications, including mapping ocean floors, determining the structure of sediments, mapping subsurface currents and hydrocarbon exploration. Since geophysical data obtained in such techniques is a function of both space and time, multidimensional signal processing techniques may be better suited for processing such data.

In multidimensional signal processing, Multidimensional signal restoration refers to the problem of estimating the original input signal from observations of the distorted or noise contaminated version of the original signal using some prior information about the input signal and /or the distortion process. Multidimensional signal processing systems such as audio, image and video processing systems often receive as input, signals that undergo distortions like blurring, band-limiting etc. during signal acquisition or transmission and it may be vital to recover the original signal for further filtering. Multidimensional signal restoration is an inverse problem, where only the distorted signal is observed and some information about the distortion process and/or input signal properties is known. A general class of iterative methods have been developed for the multidimensional restoration problem with successful applications to multidimensional deconvolution, signal extrapolation and denoising.

Lightfieldmicroscopy (LFM) is a scanning-free 3-dimensional (3D) microscopic imaging method based on the theory of light field. This technique allows sub-second (~10 Hz) large volumetric imaging with ~1 μm spatial resolution in the condition of weak scattering and semi-transparence, which has never been achieved by other methods. Just as in traditional light field rendering, there are two steps for LFM imaging: light field capture and processing. In most setups, a microlens array is used to capture the light field. As for processing, it can be based on two kinds of representations of light propagation: the ray optics picture and the wave optics picture. The Stanford University Computer Graphics Laboratory published their first prototype LFM in 2006 and has been working on the cutting edge since then.