IBM mainframes are large computer systems produced by IBM since 1952. During the 1960s and 1970s, IBM dominated the computer market with the 7000 series and the later System/360, followed by the System/370. Current mainframe computers in IBM's line of business computers are developments of the basic design of the System/360.

In digital computers, an interrupt is a request for the processor to interrupt currently executing code, so that the event can be processed in a timely manner. If the request is accepted, the processor will suspend its current activities, save its state, and execute a function called an interrupt handler to deal with the event. This interruption is often temporary, allowing the software to resume normal activities after the interrupt handler finishes, although the interrupt could instead indicate a fatal error.

Multiple Virtual Storage, more commonly called MVS, is the most commonly used operating system on the System/370, System/390 and IBM Z IBM mainframe computers. IBM developed MVS, along with OS/VS1 and SVS, as a successor to OS/360. It is unrelated to IBM's other mainframe operating system lines, e.g., VSE, VM, TPF.

An operating system (OS) is system software that manages computer hardware and software resources, and provides common services for computer programs.

The IBM System/360 (S/360) is a family of mainframe computer systems that was announced by IBM on April 7, 1964, and delivered between 1965 and 1978. It was the first family of computers designed to cover both commercial and scientific applications and a complete range of applications from small to large. The design distinguished between architecture and implementation, allowing IBM to release a suite of compatible designs at different prices. All but the only partially compatible Model 44 and the most expensive systems use microcode to implement the instruction set, featuring 8-bit byte addressing and binary, decimal and hexadecimal floating-point calculations.

Computer operating systems (OSes) provide a set of functions needed and used by most application programs on a computer, and the links needed to control and synchronize computer hardware. On the first computers, with no operating system, every program needed the full hardware specification to run correctly and perform standard tasks, and its own drivers for peripheral devices like printers and punched paper card readers. The growing complexity of hardware and application programs eventually made operating systems a necessity for everyday use.

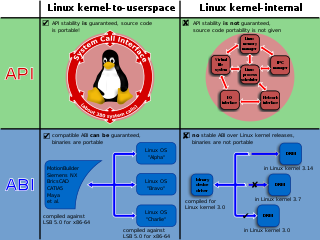

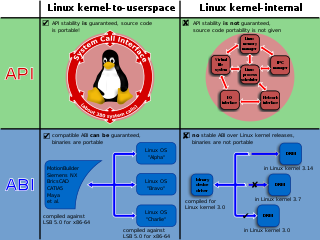

In computing, a system call is the programmatic way in which a computer program requests a service from the operating system on which it is executed. This may include hardware-related services, creation and execution of new processes, and communication with integral kernel services such as process scheduling. System calls provide an essential interface between a process and the operating system.

CP/CMS is a discontinued time-sharing operating system of the late 1960s and early 1970s, known for its excellent performance and advanced features. Among its three versions, CP-40/CMS was an important "one-off" research system that established the CP/CMS virtual machine architecture. It was followed by CP-67/CMS, a reimplementation of CP-40/CMS for the IBM System/360-67, and the primary focus of this article. Finally, CP-370/CMS was a reimplementation of CP-67/CMS for the System/370. While it was never released as such, it became the foundation of IBM's VM/370 operating system, announced in 1972.

BIOS implementations provide interrupts that can be invoked by operating systems and application programs to use the facilities of the firmware on IBM PC compatible computers. Traditionally, BIOS calls are mainly used by DOS programs and some other software such as boot loaders. BIOS runs in the real address mode of the x86 CPU, so programs that call BIOS either must also run in real mode or must switch from protected mode to real mode before calling BIOS and then switching back again. For this reason, modern operating systems that use the CPU in Protected mode or Long mode generally do not use the BIOS interrupt calls to support system functions, although they use the BIOS interrupt calls to probe and initialize hardware during booting. Real mode has the 1MB memory limitation, modern boot loaders use the unreal mode or protected mode to access up to 4GB memory.

A hypervisor is a type of computer software, firmware or hardware that creates and runs virtual machines. A computer on which a hypervisor runs one or more virtual machines is called a host machine, and each virtual machine is called a guest machine. The hypervisor presents the guest operating systems with a virtual operating platform and manages the execution of the guest operating systems. Unlike an emulator, the guest executes most instructions on the native hardware. Multiple instances of a variety of operating systems may share the virtualized hardware resources: for example, Linux, Windows, and macOS instances can all run on a single physical x86 machine. This contrasts with operating-system–level virtualization, where all instances must share a single kernel, though the guest operating systems can differ in user space, such as different Linux distributions with the same kernel.

In software engineering, profiling is a form of dynamic program analysis that measures, for example, the space (memory) or time complexity of a program, the usage of particular instructions, or the frequency and duration of function calls. Most commonly, profiling information serves to aid program optimization, and more specifically, performance engineering.

In computing, channel I/O is a high-performance input/output (I/O) architecture that is implemented in various forms on a number of computer architectures, especially on mainframe computers. In the past, channels were generally implemented with custom devices, variously named channel, I/O processor, I/O controller, I/O synchronizer, or DMA controller.

An instruction set simulator (ISS) is a simulation model, usually coded in a high-level programming language, which mimics the behavior of a mainframe or microprocessor by "reading" instructions and maintaining internal variables which represent the processor's registers.

The following is a timeline of virtualization development. In computing, virtualization is the use of a computer to simulate another computer. Through virtualization, a host simulates a guest by exposing virtual hardware devices, which may be done through software or by allowing access to a physical device connected to the machine.

In computer science, full virtualization (fv) is a modern virtualization technique developed in late 1990s. It is different from simulation and emulation. Virtualization employs techniques that can create instances of a virtual environment, as opposed to simulation, which models the environment; and emulation, which replicates the target environment with certain kinds of virtual environments called emulation environments for virtual machines. Full virtualization requires that every salient feature of the hardware be reflected into one of several virtual machines – including the full instruction set, input/output operations, interrupts, memory access, and whatever other elements are used by the software that runs on the bare machine, and that is intended to run in a virtual machine. In such an environment, any software capable of execution on the raw hardware can be run in the virtual machine and, in particular, any operating systems. The obvious test of full virtualization is whether an operating system intended for stand-alone use can successfully run inside a virtual machine.

The history of IBM mainframe operating systems is significant within the history of mainframe operating systems, because of IBM's long-standing position as the world's largest hardware supplier of mainframe computers. IBM mainframes run operating systems supplied by IBM and by third parties.

OS/360, officially known as IBM System/360 Operating System, is a discontinued batch processing operating system developed by IBM for their then-new System/360 mainframe computer, announced in 1964; it was influenced by the earlier IBSYS/IBJOB and Input/Output Control System (IOCS) packages for the IBM 7090/7094 and even more so by the PR155 Operating System for the IBM 1410/7010 processors. It was one of the earliest operating systems to require the computer hardware to include at least one direct access storage device.

The IBM Administrative Terminal System, also known as ATS/360, provided text- and data-management tools for working with documents to users of IBM System/360 systems.

The Input/Output Supervisor (IOS) is that portion of the control program in the IBM mainframe OS/360 and successors operating systems which issues the privileged I/O instructions and supervises the resulting I/O interruptions for any program which requests I/O device operations until the normal or abnormal conclusion of those operations.