Non-uniform memory access (NUMA) is a computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory. NUMA is beneficial for workloads with high memory locality of reference and low lock contention, because a processor may operate on a subset of memory mostly or entirely within its own cache node, reducing traffic on the memory bus.

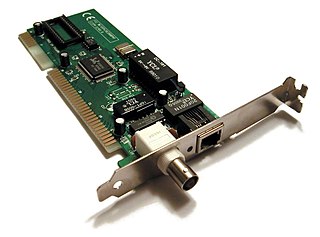

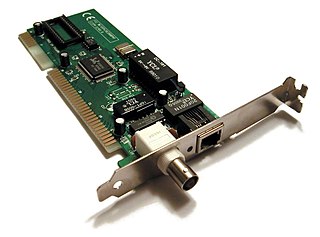

A network interface controller is a computer hardware component that connects a computer to a computer network.

Unix-like operating systems identify a user by a value called a user identifier, often abbreviated to user ID or UID. The UID, along with the group identifier (GID) and other access control criteria, is used to determine which system resources a user can access. The password file maps textual user names to UIDs. UIDs are stored in the inodes of the Unix file system, running processes, tar archives, and the now-obsolete Network Information Service. In POSIX-compliant environments, the shell command id gives the current user's UID, as well as more information such as the user name, primary user group and group identifier (GID).

The Direct Rendering Manager (DRM) is a subsystem of the Linux kernel responsible for interfacing with GPUs of modern video cards. DRM exposes an API that user-space programs can use to send commands and data to the GPU and perform operations such as configuring the mode setting of the display. DRM was first developed as the kernel-space component of the X Server Direct Rendering Infrastructure, but since then it has been used by other graphic stack alternatives such as Wayland and standalone applications and libraries such as SDL2 and Kodi.

The proc filesystem (procfs) is a special filesystem in Unix-like operating systems that presents information about processes and other system information in a hierarchical file-like structure, providing a more convenient and standardized method for dynamically accessing process data held in the kernel than traditional tracing methods or direct access to kernel memory. Typically, it is mapped to a mount point named /proc at boot time. The proc file system acts as an interface to internal data structures about running processes in the kernel. In Linux, it can also be used to obtain information about the kernel and to change certain kernel parameters at runtime (sysctl).

seccomp is a computer security facility in the Linux kernel. seccomp allows a process to make a one-way transition into a "secure" state where it cannot make any system calls except exit , sigreturn , read and write to already-open file descriptors. Should it attempt any other system calls, the kernel will either just log the event or terminate the process with SIGKILL or SIGSYS. In this sense, it does not virtualize the system's resources but isolates the process from them entirely.

OS-level virtualization is an operating system (OS) virtualization paradigm in which the kernel allows the existence of multiple isolated user space instances, including containers, zones, virtual private servers (OpenVZ), partitions, virtual environments (VEs), virtual kernels, and jails. Such instances may look like real computers from the point of view of programs running in them. A computer program running on an ordinary operating system can see all resources of that computer. Programs running inside a container can only see the container's contents and devices assigned to the container.

Out of memory (OOM) is an often undesired state of computer operation where no additional memory can be allocated for use by programs or the operating system. Such a system will be unable to load any additional programs, and since many programs may load additional data into memory during execution, these will cease to function correctly. This usually occurs because all available memory, including disk swap space, has been allocated.Roberto ramirez orozco

OpenVZ is an operating-system-level virtualization technology for Linux. It allows a physical server to run multiple isolated operating system instances, called containers, virtual private servers (VPSs), or virtual environments (VEs). OpenVZ is similar to Solaris Containers and LXC.

In computing, virtualization is the use of a computer to simulate another computer. The following is a chronological list of virtualization technologies.

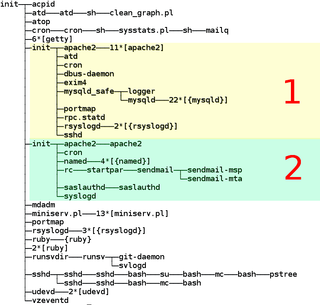

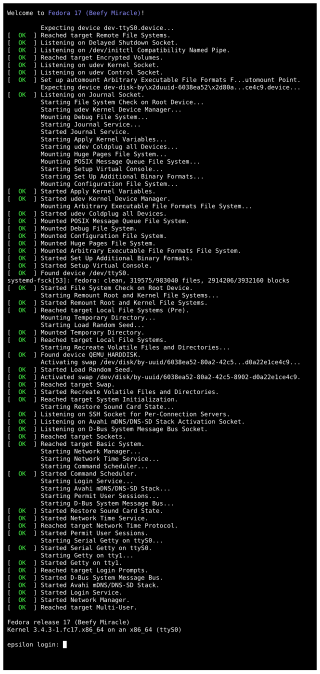

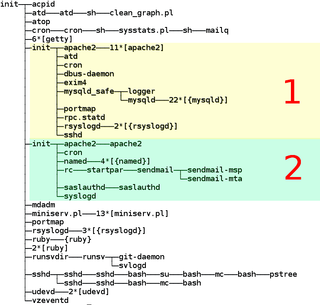

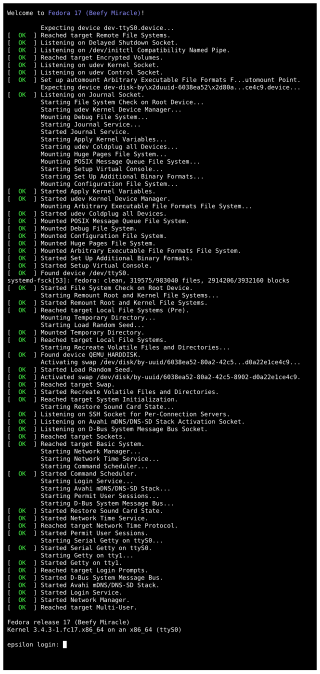

The Linux booting process involves multiple stages and is in many ways similar to the BSD and other Unix-style boot processes, from which it derives. Although the Linux booting process depends very much on the computer architecture, those architectures share similar stages and software components, including system startup, bootloader execution, loading and startup of a Linux kernel image, and execution of various startup scripts and daemons. Those are grouped into 4 steps: system startup, bootloader stage, kernel stage, and init process.

The Linux kernel is a free and open source, Unix-like kernel that is used in many computer systems worldwide. The kernel was created by Linus Torvalds in 1991 and was soon adopted as the kernel for the GNU operating system (OS) which was created to be a free replacement for Unix. Since the late 1990s, it has been included in many operating system distributions, many of which are called Linux. One such Linux kernel operating system is Android which is used in many mobile and embedded devices.

Readahead is a system call of the Linux kernel that loads a file's contents into the page cache. This prefetches the file so that when it is subsequently accessed, its contents are read from the main memory (RAM) rather than from a hard disk drive (HDD), resulting in much lower file access latencies.

Linux Containers (LXC) is an operating system-level virtualization method for running multiple isolated Linux systems (containers) on a control host using a single Linux kernel.

systemd is a software suite that provides an array of system components for Linux operating systems. The main aim is to unify service configuration and behavior across Linux distributions. Its primary component is a "system and service manager" — an init system used to bootstrap user space and manage user processes. It also provides replacements for various daemons and utilities, including device management, login management, network connection management, and event logging. The name systemd adheres to the Unix convention of naming daemons by appending the letter d. It also plays on the term "System D", which refers to a person's ability to adapt quickly and improvise to solve problems.

Docker is a set of platform as a service (PaaS) products that use OS-level virtualization to deliver software in packages called containers. The service has both free and premium tiers. The software that hosts the containers is called Docker Engine. It was first released in 2013 and is developed by Docker, Inc.

zswap is a Linux kernel feature that provides a compressed write-back cache for swapped pages, as a form of virtual memory compression. Instead of moving memory pages to a swap device when they are to be swapped out, zswap performs their compression and then stores them into a memory pool dynamically allocated in the system RAM. Later writeback to the actual swap device is deferred or even completely avoided, resulting in a significantly reduced I/O for Linux systems that require swapping; the tradeoff is the need for additional CPU cycles to perform the compression.

Namespaces are a feature of the Linux kernel that partition kernel resources such that one set of processes sees one set of resources, while another set of processes sees a different set of resources. The feature works by having the same namespace for a set of resources and processes, but those namespaces refer to distinct resources. Resources may exist in multiple namespaces. Examples of such resources are process IDs, host-names, user IDs, file names, some names associated with network access, and Inter-process communication.

In the Linux kernel, kernfs is a set of functions that contain the functionality required for creating the pseudo file systems used internally by various kernel subsystems so that they may use virtual files. For example, sysfs provides a set of virtual files by exporting information about hardware devices and associated device drivers from the kernel's device model to user space.

Container Linux is a discontinued open-source lightweight operating system based on the Linux kernel and designed for providing infrastructure for clustered deployments. One of its focuses was scalability. As an operating system, Container Linux provided only the minimal functionality required for deploying applications inside software containers, together with built-in mechanisms for service discovery and configuration sharing.