This article needs additional citations for verification .(December 2014) |

The NOOP scheduler is the simplest I/O scheduler for the Linux kernel. This scheduler was developed by Jens Axboe.

This article needs additional citations for verification .(December 2014) |

The NOOP scheduler is the simplest I/O scheduler for the Linux kernel. This scheduler was developed by Jens Axboe.

The NOOP scheduler inserts all incoming I/O requests into a simple FIFO queue and implements request merging. This scheduler is useful when it has been determined that the host should not attempt to re-order requests based on the sector numbers contained therein. In other words, the scheduler assumes that the host is unaware of how to productively re-order requests.

There are (generally) three basic situations where this situation is desirable:

However, NOOP is not necessarily the preferred I/O scheduler for the above scenarios. Typical to performance tuning, all guidance shall be based on observed work load patterns (undermining one's ability to create simplistic rules of thumb). If there is contention for available I/O bandwidth from other applications, it is still possible that other schedulers will generate better performance by virtue of more intelligently carving up that bandwidth for the applications deemed most important. For example, running an LDAP directory server may benefit from deadline's read preference and latency guarantees. At the same time, a user with a desktop system running many different applications may want to have access to CFQ's tunables or its ability to prioritize bandwidth for particular applications over others (ionice).

If there is no contention between applications, then there are little to no benefits from selecting a scheduler for the above-listed three scenarios. This is due to a resulting inability to deprioritize one workload's operations in a way that makes additional capacity available to another workload. In other words, if the I/O paths are not saturated and the requests for all the workloads fail to cause an unreasonable shifting around of drive heads (which the operating system is aware of), the benefit of prioritizing one workload may create a situation where CPU time spent scheduling I/O is wasted instead of providing desired benefits.

The Linux kernel also exposes the nomerges sysfs parameter as a scheduler-agnostic configuration, making it possible for the block layer's requests merging logic to be disabled either entirely, or only for more complex merging attempts. [2] This reduces the need for the NOOP scheduler as the overhead of most I/O schedulers is associated with their attempts to locate adjacent sectors in the request queue in order to merge them. However, most I/O workloads benefit from a certain level of requests merging, even on fast low-latency storage such as SSDs. [3] [4]

A network interface controller is a computer hardware component that connects a computer to a computer network.

In computer operating systems, memory paging is a memory management scheme by which a computer stores and retrieves data from secondary storage for use in main memory. In this scheme, the operating system retrieves data from secondary storage in same-size blocks called pages. Paging is an important part of virtual memory implementations in modern operating systems, using secondary storage to let programs exceed the size of available physical memory.

Completely Fair Queuing (CFQ) is an I/O scheduler for the Linux kernel which was written in 2003 by Jens Axboe.

Ingo Molnár, employed by Red Hat as of May 2013, is a Hungarian Linux hacker. He is best known for his contributions to the operating system in terms of security and performance.

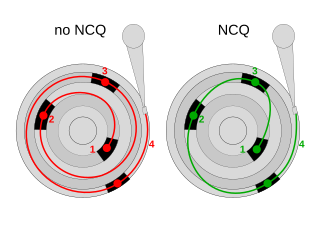

In computing, Native Command Queuing (NCQ) is an extension of the Serial ATA protocol allowing hard disk drives to internally optimize the order in which received read and write commands are executed. This can reduce the amount of unnecessary drive head movement, resulting in increased performance for workloads where multiple simultaneous read/write requests are outstanding, most often occurring in server-type applications.

OS-level virtualization is an operating system paradigm in which the kernel allows the existence of multiple isolated user space instances. Such instances, called containers, Zones, virtual private servers (OpenVZ), partitions, virtual environments (VEs), virtual kernels, or jails, may look like real computers from the point of view of programs running in them. A computer program running on an ordinary operating system can see all resources of that computer. However, programs running inside of a container can only see the container's contents and devices assigned to the container.

Anticipatory scheduling is an algorithm for scheduling hard disk input/output. It seeks to increase the efficiency of disk utilization by "anticipating" future synchronous read operations.

A solid-state drive (SSD) is a solid-state storage device that uses integrated circuit assemblies to store data persistently, typically using flash memory, and functioning as secondary storage in the hierarchy of computer storage. It is also sometimes called a solid-state device or a solid-state disk, even though SSDs lack the physical spinning disks and movable read–write heads used in hard disk drives (HDDs) and floppy disks.

In computer storage, disk buffer is the embedded memory in a hard disk drive (HDD) acting as a buffer between the rest of the computer and the physical hard disk platter that is used for storage. Modern hard disk drives come with 8 to 256 MiB of such memory, and solid-state drives come with up to 4 GB of cache memory.

Jens Axboe is a Linux kernel hacker.

Input/output (I/O) scheduling is the method that computer operating systems use to decide in which order the block I/O operations will be submitted to storage volumes. I/O scheduling is sometimes called disk scheduling.

The deadline scheduler is an I/O scheduler for the Linux kernel which was written in 2002 by Jens Axboe.

A trim command allows an operating system to inform a solid-state drive (SSD) which blocks of data are no longer considered to be 'in use' and therefore can be erased internally.

cgroups is a Linux kernel feature that limits, accounts for, and isolates the resource usage of a collection of processes.

NVM Express (NVMe) or Non-Volatile Memory Host Controller Interface Specification (NVMHCIS) is an open, logical-device interface specification for accessing a computer's non-volatile storage media usually attached via PCI Express (PCIe) bus. The acronym NVM stands for non-volatile memory, which is often NAND flash memory that comes in several physical form factors, including solid-state drives (SSDs), PCI Express (PCIe) add-in cards, and M.2 cards, the successor to mSATA cards. NVM Express, as a logical-device interface, has been designed to capitalize on the low latency and internal parallelism of solid-state storage devices.

Device Mapper Multipath Input Output often shortened to DM-Multipathing and abbreviated as DM-MPIO provides input-output (I/O) fail-over and load-balancing by using multipath I/O within Linux for block devices. By utilizing device-mapper, the multipathd daemon provides the host-side logic to use multiple paths of a redundant network to provide continuous availability and higher-bandwidth connectivity between the host server and the block-level device. DM-MPIO handles the rerouting of block I/O to an alternate path in the event of a path failure. DM-MPIO can also balance the I/O load across all of the available paths that are typically utilized in Fibre Channel (FC) and iSCSI SAN environments. DM-MPIO is based on the device mapper, which provides the basic framework that maps one block device onto another.

A network scheduler, also called packet scheduler, queueing discipline (qdisc) or queueing algorithm, is an arbiter on a node in packet switching communication network. It manages the sequence of network packets in the transmit and receive queues of the protocol stack and network interface controller. There are several network schedulers available for the different operating systems, that implement many of the existing network scheduling algorithms.

bcache is a cache in the Linux kernel's block layer, which is used for accessing secondary storage devices. It allows one or more fast storage devices, such as flash-based solid-state drives (SSDs), to act as a cache for one or more slower storage devices, such as hard disk drives (HDDs); this effectively creates hybrid volumes and provides performance improvements.

dm-cache is a component of the Linux kernel's device mapper, which is a framework for mapping block devices onto higher-level virtual block devices. It allows one or more fast storage devices, such as flash-based solid-state drives (SSDs), to act as a cache for one or more slower storage devices such as hard disk drives (HDDs); this effectively creates hybrid volumes and provides secondary storage performance improvements.

An open-channel solid state drive is a solid-state drive which does not have a firmware Flash Translation Layer implemented on the device, but instead leaves the management of the physical solid-state storage to the computer's operating system. The Linux 4.4 kernel is an example of an operating system kernel that supports open-channel SSDs which follow the NVM Express specification. The interface used by the operating system to access open-channel solid state drives is called LightNVM.