Related Research Articles

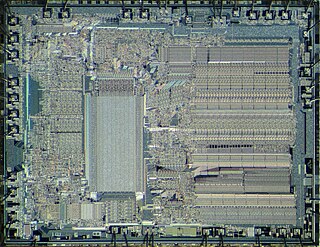

A central processing unit (CPU), also called a central processor or main processor, is the electronic circuitry within a computer that executes instructions that make up a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions. The computer industry used the term "central processing unit" as early as 1955. Traditionally, the term "CPU" refers to a processor, more specifically to its processing unit and control unit (CU), distinguishing these core elements of a computer from external components such as main memory and I/O circuitry.

The control unit (CU) is a component of a computer's central processing unit (CPU) that directs the operation of the processor. It tells the computer's memory, arithmetic and logic unit and input and output devices how to respond to the instructions that have been sent to the processor.

An instruction set architecture (ISA) is an abstract model of a computer. It is also referred to as architecture or computer architecture. A realization of an ISA, such as a central processing unit (CPU), is called an implementation.

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set containing instructions that operate on one-dimensional arrays of data called vectors, compared to the scalar processors, whose instructions operate on single data items. Vector processors can greatly improve performance on certain workloads, notably numerical simulation and similar tasks. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor designs led to the vector supercomputer's demise in the later 1990s.

In computer science, instruction pipelining is a technique for implementing instruction-level parallelism within a single processor. Pipelining attempts to keep every part of the processor busy with some instruction by dividing incoming instructions into a series of sequential steps performed by different processor units with different parts of instructions processed in parallel.

Tomasulo’s algorithm is a computer architecture hardware algorithm for dynamic scheduling of instructions that allows out-of-order execution and enables more efficient use of multiple execution units. It was developed by Robert Tomasulo at IBM in 1967 and was first implemented in the IBM System/360 Model 91’s floating point unit.

In the domain of central processing unit (CPU) design, hazards are problems with the instruction pipeline in CPU microarchitectures when the next instruction cannot execute in the following clock cycle, and can potentially lead to incorrect computation results. Three common types of hazards are data hazards, structural hazards, and control hazards.

In the history of computer hardware, some early reduced instruction set computer central processing units used a very similar architectural solution, now called a classic RISC pipeline. Those CPUs were: MIPS, SPARC, Motorola 88000, and later the notional CPU DLX invented for education.

In computer architecture, register renaming is a technique that abstracts logical registers from physical registers. Every logical register has a set of physical registers associated with it. While a programmer in assembly language refers for instance to a logical register accu, the processor transposes this name to one specific physical register on the fly. The physical registers are opaque and cannot be referenced directly but only via the canonical names.

The DLX is a RISC processor architecture designed by John L. Hennessy and David A. Patterson, the principal designers of the Stanford MIPS and the Berkeley RISC designs (respectively), the two benchmark examples of RISC design.

In computer science, computer engineering and programming language implementations, a stack machine is a type of computer. In some cases, the term refers to a software scheme that simulates a stack machine.

The instruction cycle is the cycle which the central processing unit (CPU) follows from boot-up until the computer has shut down in order to process instructions. It is composed of three main stages: the fetch stage, the decode stage, and the execute stage.

Addressing modes are an aspect of the instruction set architecture in most central processing unit (CPU) designs. The various addressing modes that are defined in a given instruction set architecture define how the machine language instructions in that architecture identify the operand(s) of each instruction. An addressing mode specifies how to calculate the effective memory address of an operand by using information held in registers and/or constants contained within a machine instruction or elsewhere.

The Intel 8087, announced in 1980, was the first x87 floating-point coprocessor for the 8086 line of microprocessors.

The CDC STAR-100 is a vector supercomputer that was designed, manufactured, and marketed by Control Data Corporation (CDC). It was one of the first machines to use a vector processor to improve performance on appropriate scientific applications. It was also the first supercomputer to use integrated circuits and the first to be equipped with one million words of computer memory.

In computing, a pipeline, also known as a data pipeline, is a set of data processing elements connected in series, where the output of one element is the input of the next one. The elements of a pipeline are often executed in parallel or in time-sliced fashion. Some amount of buffer storage is often inserted between elements.

In computer engineering, microarchitecture, also called computer organization and sometimes abbreviated as µarch or uarch, is the way a given instruction set architecture (ISA) is implemented in a particular processor. A given ISA may be implemented with different microarchitectures; implementations may vary due to different goals of a given design or due to shifts in technology.

In computer architecture, a transport triggered architecture (TTA) is a kind of processor design in which programs directly control the internal transport buses of a processor. Computation happens as a side effect of data transports: writing data into a triggering port of a functional unit triggers the functional unit to start a computation. This is similar to what happens in a systolic array. Due to its modular structure, TTA is an ideal processor template for application-specific instruction-set processors (ASIP) with customized datapath but without the inflexibility and design cost of fixed function hardware accelerators.

The Mill architecture is a novel belt machine-based computer architecture for general-purpose computing. It has been under development since about 2003 by Ivan Godard and his startup Mill Computing, Inc., formerly named Out Of The Box Computing, in East Palo Alto, California. Mill Computing claims it has a "10x single-thread power/performance gain over conventional out-of-order superscalar architectures" but "runs the same programs, without rewrite".

A Load-Hit-Store, sometimes abbreviated as LHS, is a data dependency in a CPU in which a memory location that has just been the target of a store operation is loaded from. The CPU may then need to wait until the store finishes, so that the correct value can be retrieved. This involves e.g. a L1 cache roundtrip, during which most or all of the pipeline will be stalled, causing a significant decrease in performance. For example, (C/C++):

References

- ↑ "CMSC 411 Lecture 19, Pipelining Data Forwarding". University of Maryland Baltimore County Computer Science and Electrical Engineering Department. Retrieved 2020-01-22.

- ↑ "High performance computing, Notes of class 11". hpc.serc.iisc.ernet.in. September 2000. Archived from the original on 2013-12-27. Retrieved 2014-02-08.