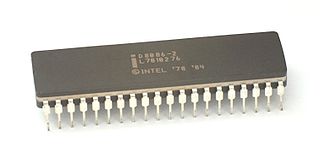

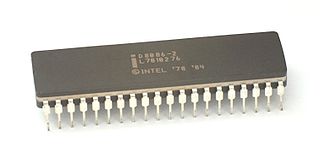

x86 is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was introduced in 1978 as a fully 16-bit extension of Intel's 8-bit 8080 microprocessor, with memory segmentation as a solution for addressing more memory than can be covered by a plain 16-bit address. The term "x86" came into being because the names of several successors to Intel's 8086 processor end in "86", including the 80186, 80286, 80386 and 80486 processors. Colloquially, their names were "186", "286", "386" and "486".

SPARC is a reduced instruction set computer (RISC) instruction set architecture originally developed by Sun Microsystems. Its design was strongly influenced by the experimental Berkeley RISC system developed in the early 1980s. First developed in 1986 and released in 1987, SPARC was one of the most successful early commercial RISC systems, and its success led to the introduction of similar RISC designs from many vendors through the 1980s and 1990s.

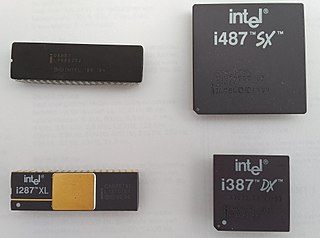

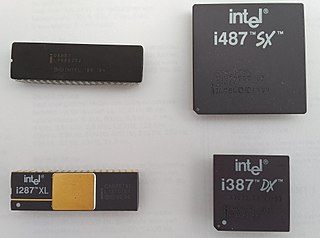

A floating-point unit is a part of a computer system specially designed to carry out operations on floating-point numbers. Typical operations are addition, subtraction, multiplication, division, and square root. Some FPUs can also perform various transcendental functions such as exponential or trigonometric calculations, but the accuracy can be low, so some systems prefer to compute these functions in software.

In computing, endianness is the order in which bytes within a word of digital data are transmitted over a data communication medium or addressed in computer memory, counting only byte significance compared to earliness. Endianness is primarily expressed as big-endian (BE) or little-endian (LE), terms introduced by Danny Cohen into computer science for data ordering in an Internet Experiment Note published in 1980. The adjective endian has its origin in the writings of 18th century Anglo-Irish writer Jonathan Swift. In the 1726 novel Gulliver's Travels, he portrays the conflict between sects of Lilliputians divided into those breaking the shell of a boiled egg from the big end or from the little end. By analogy, a CPU may read a digital word big end first, or little end first.

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal and it can be directly accessible through an instruction set architecture (ISA), but it should not be confused with an ISA. SIMD describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously.

AltiVec is a single-precision floating point and integer SIMD instruction set designed and owned by Apple, IBM, and Freescale Semiconductor — the AIM alliance. It is implemented on versions of the PowerPC processor architecture, including Motorola's G4, IBM's G5 and POWER6 processors, and P.A. Semi's PWRficient PA6T. AltiVec is a trademark owned solely by Freescale, so the system is also referred to as Velocity Engine by Apple and VMX by IBM and P.A. Semi.

In computing, Streaming SIMD Extensions (SSE) is a single instruction, multiple data (SIMD) instruction set extension to the x86 architecture, designed by Intel and introduced in 1999 in their Pentium III series of central processing units (CPUs) shortly after the appearance of Advanced Micro Devices (AMD's) 3DNow!. SSE contains 70 new instructions, most of which work on single precision floating-point data. SIMD instructions can greatly increase performance when exactly the same operations are to be performed on multiple data objects. Typical applications are digital signal processing and graphics processing.

In computer architecture, 64-bit integers, memory addresses, or other data units are those that are 64 bits wide. Also, 64-bit central processing units (CPU) and arithmetic logic units (ALU) are those that are based on processor registers, address buses, or data buses of that size. A computer that uses such a processor is a 64-bit computer.

x86 assembly language is the name for the family of assembly languages which provide some level of backward compatibility with CPUs back to the Intel 8008 microprocessor, which was launched in April 1972. It is used to produce object code for the x86 class of processors.

In computing, especially digital signal processing, the multiply–accumulate (MAC) or multiply-add (MAD) operation is a common step that computes the product of two numbers and adds that product to an accumulator. The hardware unit that performs the operation is known as a multiplier–accumulator ; the operation itself is also often called a MAC or a MAD operation. The MAC operation modifies an accumulator a:

The Emotion Engine is a central processing unit developed and manufactured by Sony Computer Entertainment and Toshiba for use in the PlayStation 2 video game console. It was also used in early PlayStation 3 models sold in Japan and North America to provide PlayStation 2 game support. Mass production of the Emotion Engine began in 1999 and ended in late 2012 with the discontinuation of the PlayStation 2.

The POWER1 is a multi-chip CPU developed and fabricated by IBM that implemented the POWER instruction set architecture (ISA). It was originally known as the RISC System/6000 CPU or, when in an abbreviated form, the RS/6000 CPU, before introduction of successors required the original name to be replaced with one that used the same naming scheme (POWERn) as its successors in order to differentiate it from the newer designs.

In computing, a word is the natural unit of data used by a particular processor design. A word is a fixed-sized datum handled as a unit by the instruction set or the hardware of the processor. The number of bits or digits in a word is an important characteristic of any specific processor design or computer architecture.

In C and related programming languages, long double refers to a floating-point data type that is often more precise than double precision though the language standard only requires it to be at least as precise as double. As with C's other floating-point types, it may not necessarily map to an IEEE format.

Extended precision refers to floating-point number formats that provide greater precision than the basic floating-point formats. Extended precision formats support a basic format by minimizing roundoff and overflow errors in intermediate values of expressions on the base format. In contrast to extended precision, arbitrary-precision arithmetic refers to implementations of much larger numeric types using special software.

Advanced Vector Extensions are SIMD extensions to the x86 instruction set architecture for microprocessors from Intel and Advanced Micro Devices (AMD). They were proposed by Intel in March 2008 and first supported by Intel with the Sandy Bridge processor shipping in Q1 2011 and later by AMD with the Bulldozer processor shipping in Q3 2011. AVX provides new features, new instructions, and a new coding scheme.

In computing, quadruple precision is a binary floating-point–based computer number format that occupies 16 bytes with precision at least twice the 53-bit double precision.

In computer architecture, 256-bit integers, memory addresses, or other data units are those that are 256 bits wide. Also, 256-bit central processing unit (CPU) and arithmetic logic unit (ALU) architectures are those that are based on registers, address buses, or data buses of that size. There are currently no mainstream general-purpose processors built to operate on 256-bit integers or addresses, though a number of processors do operate on 256-bit data.

AVX-512 are 512-bit extensions to the 256-bit Advanced Vector Extensions SIMD instructions for x86 instruction set architecture (ISA) proposed by Intel in July 2013, and first implemented in the 2016 Intel Xeon Phi x200, and then later in a number of AMD and other Intel CPUs. AVX-512 consists of multiple extensions that may be implemented independently. This policy is a departure from the historical requirement of implementing the entire instruction block. Only the core extension AVX-512F is required by all AVX-512 implementations.

Power ISA is a reduced instruction set computer (RISC) instruction set architecture (ISA) currently developed by the OpenPOWER Foundation, led by IBM. It was originally developed by IBM and the now-defunct Power.org industry group. Power ISA is an evolution of the PowerPC ISA, created by the mergers of the core PowerPC ISA and the optional Book E for embedded applications. The merger of these two components in 2006 was led by Power.org founders IBM and Freescale Semiconductor.