Computer animation is the process used for digitally generating moving images. The more general term computer-generated imagery (CGI) encompasses both still images and moving images, while computer animation only refers to moving images. Modern computer animation usually uses 3D computer graphics.

Poser is a figure posing and rendering 3D computer graphics program distributed by Bondware. Poser is optimized for the 3D modeling of human figures. It enables beginners to produce basic animations and digital images, along with the extensive availability of third-party digital 3D models.

Motion capture is the process of recording the movement of objects or people. It is used in military, entertainment, sports, medical applications, and for validation of computer vision and robots. In films, television shows and video games, motion capture refers to recording actions of human actors and using that information to animate digital character models in 2D or 3D computer animation. When it includes face and fingers or captures subtle expressions, it is often referred to as performance capture. In many fields, motion capture is sometimes called motion tracking, but in filmmaking and games, motion tracking usually refers more to match moving.

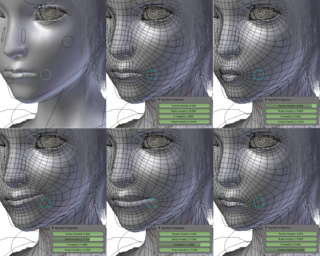

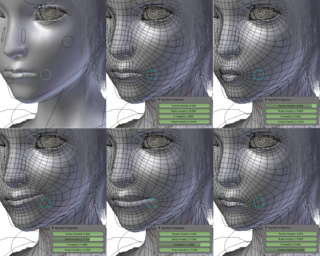

Computer facial animation is primarily an area of computer graphics that encapsulates methods and techniques for generating and animating images or models of a character face. The character can be a human, a humanoid, an animal, a legendary creature or character, etc. Due to its subject and output type, it is also related to many other scientific and artistic fields from psychology to traditional animation. The importance of human faces in verbal and non-verbal communication and advances in computer graphics hardware and software have caused considerable scientific, technological, and artistic interests in computer facial animation.

Facial motion capture is the process of electronically converting the movements of a person's face into a digital database using cameras or laser scanners. This database may then be used to produce computer graphics (CG), computer animation for movies, games, or real-time avatars. Because the motion of CG characters is derived from the movements of real people, it results in a more realistic and nuanced computer character animation than if the animation were created manually.

Digital puppetry is the manipulation and performance of digitally animated 2D or 3D figures and objects in a virtual environment that are rendered in real-time by computers. It is most commonly used in filmmaking and television production but has also been used in interactive theme park attractions and live theatre.

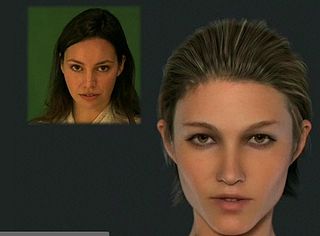

Human image synthesis is technology that can be applied to make believable and even photorealistic renditions of human-likenesses, moving or still. It has effectively existed since the early 2000s. Many films using computer generated imagery have featured synthetic images of human-like characters digitally composited onto the real or other simulated film material. Towards the end of the 2010s deep learning artificial intelligence has been applied to synthesize images and video that look like humans, without need for human assistance, once the training phase has been completed, whereas the old school 7D-route required massive amounts of human work .

Toon Boom Animation Inc., also known as Toon Boom, is a Canadian software company founded in 1994 and based in Montreal, Quebec. It specializes in the development and production of animation and storyboarding software for film, television, the World Wide Web, video games, mobile devices, training and education.

The following outline is provided as an overview of and topical guide to animation:

Image Metrics is a 3D facial animation and Virtual Try-on company headquartered in El Segundo, with offices in Las Vegas, and research facilities in Manchester. Image Metrics are the makers of the Live Driver and Portable You SDKs for software developers and are providers of facial animation software and services to the visual effects industries.

iClone is a real-time 3D animation and rendering software program. Real-time playback is enabled by using a 3D videogame engine for instant on-screen rendering.

Computer-generated imagery (CGI) is a specific-technology or application of computer graphics for creating or improving images in art, printed media, simulators, videos and video games. These images are either static or dynamic. CGI both refers to 2D computer graphics and 3D computer graphics with the purpose of designing characters, virtual worlds, or scenes and special effects. The application of CGI for creating/improving animations is called computer animation, or CGI animation.

Reallusion is a 2D and 3D character creation and animation software developer with tools from cartoon characters to digital humans and animation pipelines for films, real-time engines, video games, virtual production, archvis.

Mixamo Inc. is a 3D computer graphics technology company. Based in San Francisco, the company develops and sells web-based services for 3D character animation. Mixamo's technologies use machine learning methods to automate the steps of the character animation process, including 3D modeling to rigging and 3D animation.

Faceware Technologies is a facial animation and motion capture development company in America. The company was established under Image Metrics and became its own company at the beginning of 2012.

Visage SDK is a multi-platform software development kit (SDK) created by Visage Technologies AB. Visage SDK allows software programmers to build facial motion capture and eye tracking applications.

Live2D is an animation technique used to animate static images—usually anime-style characters—that involves separating an image into parts and animating each part accordingly, without the need of frame-by-frame animation or a 3D model. This enables characters to move using 2.5D movement while maintaining the original illustration.

A virtual human is a software fictional character or human being. Virtual humans have been created as tools and artificial companions in simulation, video games, film production, human factors and ergonomic and usability studies in various industries, clothing industry, telecommunications (avatars), medicine, etc. These applications require domain-dependent simulation fidelity. A medical application might require an exact simulation of specific internal organs; film industry requires highest aesthetic standards, natural movements, and facial expressions; ergonomic studies require faithful body proportions for a particular population segment and realistic locomotion with constraints, etc.

An Aniform is a two-dimensional cartoon character operated like a puppet, to be displayed to live audiences or in visual media. The concept was invented by Morey Bunin with his spouse Charlotte, Bunin being a puppeteer who had worked with string marionettes and hand puppets. The distinctive feature of an Aniforms character is that it displays a physical form that appears "animated" on a real or simulated television screen. The technique was used in television production.