In mathematical analysis, the Dirac delta function, also known as the unit impulse, is a generalized function on the real numbers, whose value is zero everywhere except at zero, and whose integral over the entire real line is equal to one. Since there is no function having this property, modelling the delta "function" rigorously involves the use of limits or, as is common in mathematics, measure theory and the theory of distributions.

In mathematics, the Wiener process is a real-valued continuous-time stochastic process named in honor of American mathematician Norbert Wiener for his investigations on the mathematical properties of the one-dimensional Brownian motion. It is often also called Brownian motion due to its historical connection with the physical process of the same name originally observed by Scottish botanist Robert Brown. It is one of the best known Lévy processes and occurs frequently in pure and applied mathematics, economics, quantitative finance, evolutionary biology, and physics.

Noether's theorem states that every continuous symmetry of the action of a physical system with conservative forces has a corresponding conservation law. This is the first of two theorems proven by mathematician Emmy Noether in 1915 and published in 1918. The action of a physical system is the integral over time of a Lagrangian function, from which the system's behavior can be determined by the principle of least action. This theorem only applies to continuous and smooth symmetries of physical space.

The calculus of variations is a field of mathematical analysis that uses variations, which are small changes in functions and functionals, to find maxima and minima of functionals: mappings from a set of functions to the real numbers. Functionals are often expressed as definite integrals involving functions and their derivatives. Functions that maximize or minimize functionals may be found using the Euler–Lagrange equation of the calculus of variations.

In the calculus of variations, a field of mathematical analysis, the functional derivative relates a change in a functional to a change in a function on which the functional depends.

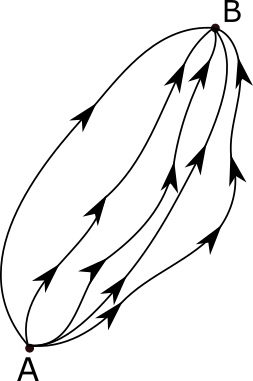

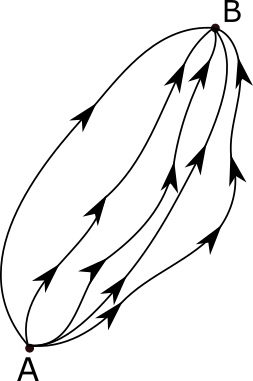

The path integral formulation is a description in quantum mechanics that generalizes the stationary action principle of classical mechanics. It replaces the classical notion of a single, unique classical trajectory for a system with a sum, or functional integral, over an infinity of quantum-mechanically possible trajectories to compute a quantum amplitude.

In probability theory, the Borel–Kolmogorov paradox is a paradox relating to conditional probability with respect to an event of probability zero. It is named after Émile Borel and Andrey Kolmogorov.

In mathematics, specifically the study of differential equations, the Picard–Lindelöf theorem gives a set of conditions under which an initial value problem has a unique solution. It is also known as Picard's existence theorem, the Cauchy–Lipschitz theorem, or the existence and uniqueness theorem.

In the theory of stochastic processes, the Karhunen–Loève theorem, also known as the Kosambi–Karhunen–Loève theorem states that a stochastic process can be represented as an infinite linear combination of orthogonal functions, analogous to a Fourier series representation of a function on a bounded interval. The transformation is also known as Hotelling transform and eigenvector transform, and is closely related to principal component analysis (PCA) technique widely used in image processing and in data analysis in many fields.

In probability theory and related fields, Malliavin calculus is a set of mathematical techniques and ideas that extend the mathematical field of calculus of variations from deterministic functions to stochastic processes. In particular, it allows the computation of derivatives of random variables. Malliavin calculus is also called the stochastic calculus of variations. P. Malliavin first initiated the calculus on infinite dimensional space. Then, the significant contributors such as S. Kusuoka, D. Stroock, J-M. Bismut, Shinzo Watanabe, I. Shigekawa, and so on finally completed the foundations.

In statistics, econometrics, and signal processing, an autoregressive (AR) model is a representation of a type of random process; as such, it is used to describe certain time-varying processes in nature, economics, behavior, etc. The autoregressive model specifies that the output variable depends linearly on its own previous values and on a stochastic term ; thus the model is in the form of a stochastic difference equation which should not be confused with a differential equation. Together with the moving-average (MA) model, it is a special case and key component of the more general autoregressive–moving-average (ARMA) and autoregressive integrated moving average (ARIMA) models of time series, which have a more complicated stochastic structure; it is also a special case of the vector autoregressive model (VAR), which consists of a system of more than one interlocking stochastic difference equation in more than one evolving random variable.

In physics, Hamilton's principle is William Rowan Hamilton's formulation of the principle of stationary action. It states that the dynamics of a physical system are determined by a variational problem for a functional based on a single function, the Lagrangian, which may contain all physical information concerning the system and the forces acting on it. The variational problem is equivalent to and allows for the derivation of the differential equations of motion of the physical system. Although formulated originally for classical mechanics, Hamilton's principle also applies to classical fields such as the electromagnetic and gravitational fields, and plays an important role in quantum mechanics, quantum field theory and criticality theories.

The Debye–Hückel theory was proposed by Peter Debye and Erich Hückel as a theoretical explanation for departures from ideality in solutions of electrolytes and plasmas. It is a linearized Poisson–Boltzmann model, which assumes an extremely simplified model of electrolyte solution but nevertheless gave accurate predictions of mean activity coefficients for ions in dilute solution. The Debye–Hückel equation provides a starting point for modern treatments of non-ideality of electrolyte solutions.

In mathematics, Weyl's lemma, named after Hermann Weyl, states that every weak solution of Laplace's equation is a smooth solution. This contrasts with the wave equation, for example, which has weak solutions that are not smooth solutions. Weyl's lemma is a special case of elliptic or hypoelliptic regularity.

The Sokhotski–Plemelj theorem is a theorem in complex analysis, which helps in evaluating certain integrals. The real-line version of it is often used in physics, although rarely referred to by name. The theorem is named after Julian Sochocki, who proved it in 1868, and Josip Plemelj, who rediscovered it as a main ingredient of his solution of the Riemann–Hilbert problem in 1908.

In mathematics, Laplace's principle is a basic theorem in large deviations theory which is similar to Varadhan's lemma. It gives an asymptotic expression for the Lebesgue integral of exp(−θφ(x)) over a fixed set A as θ becomes large. Such expressions can be used, for example, in statistical mechanics to determining the limiting behaviour of a system as the temperature tends to absolute zero.

In mathematics, Schilder's theorem is a generalization of the Laplace method from integrals on to functional Wiener integration. The theorem is used in the large deviations theory of stochastic processes. Roughly speaking, out of Schilder's theorem one gets an estimate for the probability that a (scaled-down) sample path of Brownian motion will stray far from the mean path. This statement is made precise using rate functions. Schilder's theorem is generalized by the Freidlin–Wentzell theorem for Itō diffusions.

The exponential mechanism is a technique for designing differentially private algorithms. It was developed by Frank McSherry and Kunal Talwar in 2007. Their work was recognized as a co-winner of the 2009 PET Award for Outstanding Research in Privacy Enhancing Technologies.

Computational anatomy (CA) is the study of shape and form in medical imaging. The study of deformable shapes in computational anatomy rely on high-dimensional diffeomorphism groups which generate orbits of the form . In CA, this orbit is in general considered a smooth Riemannian manifold since at every point of the manifold there is an inner product inducing the norm on the tangent space that varies smoothly from point to point in the manifold of shapes . This is generated by viewing the group of diffeomorphisms as a Riemannian manifold with , associated to the tangent space at . This induces the norm and metric on the orbit under the action from the group of diffeomorphisms.

In probability theory, a branch of mathematics, white noise analysis, otherwise known as Hida calculus, is a framework for infinite-dimensional and stochastic calculus, based on the Gaussian white noise probability space, to be compared with Malliavin calculus based on the Wiener process. It was initiated by Takeyuki Hida in his 1975 Carleton Mathematical Lecture Notes.