In statistical mechanics and mathematics, a Boltzmann distribution is a probability distribution or probability measure that gives the probability that a system will be in a certain state as a function of that state's energy and the temperature of the system. The distribution is expressed in the form:

Entropy is a scientific concept that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the microscopic description of nature in statistical physics, and to the principles of information theory. It has found far-ranging applications in chemistry and physics, in biological systems and their relation to life, in cosmology, economics, sociology, weather science, climate change, and information systems including the transmission of information in telecommunication.

The Boltzmann constant is the proportionality factor that relates the average relative thermal energy of particles in a gas with the thermodynamic temperature of the gas. It occurs in the definitions of the kelvin (K) and the gas constant, in Planck's law of black-body radiation and Boltzmann's entropy formula, and is used in calculating thermal noise in resistors. The Boltzmann constant has dimensions of energy divided by temperature, the same as entropy and heat capacity. It is named after the Austrian scientist Ludwig Boltzmann.

The molar gas constant is denoted by the symbol R or R. It is the molar equivalent to the Boltzmann constant, expressed in units of energy per temperature increment per amount of substance, rather than energy per temperature increment per particle. The constant is also a combination of the constants from Boyle's law, Charles's law, Avogadro's law, and Gay-Lussac's law. It is a physical constant that is featured in many fundamental equations in the physical sciences, such as the ideal gas law, the Arrhenius equation, and the Nernst equation.

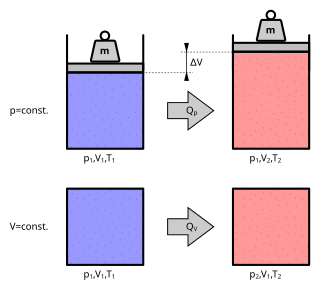

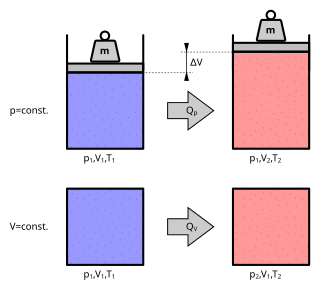

The ideal gas law, also called the general gas equation, is the equation of state of a hypothetical ideal gas. It is a good approximation of the behavior of many gases under many conditions, although it has several limitations. It was first stated by Benoît Paul Émile Clapeyron in 1834 as a combination of the empirical Boyle's law, Charles's law, Avogadro's law, and Gay-Lussac's law. The ideal gas law is often written in an empirical form:

An ideal gas is a theoretical gas composed of many randomly moving point particles that are not subject to interparticle interactions. The ideal gas concept is useful because it obeys the ideal gas law, a simplified equation of state, and is amenable to analysis under statistical mechanics. The requirement of zero interaction can often be relaxed if, for example, the interaction is perfectly elastic or regarded as point-like collisions.

In statistical mechanics, Maxwell–Boltzmann statistics describes the distribution of classical material particles over various energy states in thermal equilibrium. It is applicable when the temperature is high enough or the particle density is low enough to render quantum effects negligible.

In statistical mechanics, a semi-classical derivation of entropy that does not take into account the indistinguishability of particles yields an expression for entropy which is not extensive. This leads to a paradox known as the Gibbs paradox, after Josiah Willard Gibbs, who proposed this thought experiment in 1874‒1875. The paradox allows for the entropy of closed systems to decrease, violating the second law of thermodynamics. A related paradox is the "mixing paradox". If one takes the perspective that the definition of entropy must be changed so as to ignore particle permutation, in the thermodynamic limit, the paradox is averted.

In classical statistical mechanics, the H-theorem, introduced by Ludwig Boltzmann in 1872, describes the tendency to decrease in the quantity H in a nearly-ideal gas of molecules. As this quantity H was meant to represent the entropy of thermodynamics, the H-theorem was an early demonstration of the power of statistical mechanics as it claimed to derive the second law of thermodynamics—a statement about fundamentally irreversible processes—from reversible microscopic mechanics. It is thought to prove the second law of thermodynamics, albeit under the assumption of low-entropy initial conditions.

Ludwig Eduard Boltzmann was an Austrian physicist and philosopher. His greatest achievements were the development of statistical mechanics and the statistical explanation of the second law of thermodynamics. In 1877 he provided the current definition of entropy, , where Ω is the number of microstates whose energy equals the system's energy, interpreted as a measure of the statistical disorder of a system. Max Planck named the constant kB the Boltzmann constant.

An ideal Bose gas is a quantum-mechanical phase of matter, analogous to a classical ideal gas. It is composed of bosons, which have an integer value of spin and abide by Bose–Einstein statistics. The statistical mechanics of bosons were developed by Satyendra Nath Bose for a photon gas and extended to massive particles by Albert Einstein, who realized that an ideal gas of bosons would form a condensate at a low enough temperature, unlike a classical ideal gas. This condensate is known as a Bose–Einstein condensate.

In statistical mechanics, the microcanonical ensemble is a statistical ensemble that represents the possible states of a mechanical system whose total energy is exactly specified. The system is assumed to be isolated in the sense that it cannot exchange energy or particles with its environment, so that the energy of the system does not change with time.

The principle of detailed balance can be used in kinetic systems which are decomposed into elementary processes. It states that at equilibrium, each elementary process is in equilibrium with its reverse process.

The Loschmidt constant or Loschmidt's number (symbol: n0) is the number of particles (atoms or molecules) of an ideal gas per volume (the number density), and usually quoted at standard temperature and pressure. The 2018 CODATA recommended value is 2.686780111...×1025 m−3 at 0 °C and 1 atm. It is named after the Austrian physicist Johann Josef Loschmidt, who was the first to estimate the physical size of molecules in 1865. The term Loschmidt constant is also sometimes used to refer to the Avogadro constant, particularly in German texts.

In the history of physics, the concept of entropy developed in response to the observation that a certain amount of functional energy released from combustion reactions is always lost to dissipation or friction and is thus not transformed into useful work. Early heat-powered engines such as Thomas Savery's (1698), the Newcomen engine (1712) and Nicolas-Joseph Cugnot's steam tricycle (1769) were inefficient, converting less than two percent of the input energy into useful work output; a great deal of useful energy was dissipated or lost. Over the next two centuries, physicists investigated this puzzle of lost energy; the result was the concept of entropy.

The concept entropy was first developed by German physicist Rudolf Clausius in the mid-nineteenth century as a thermodynamic property that predicts that certain spontaneous processes are irreversible or impossible. In statistical mechanics, entropy is formulated as a statistical property using probability theory. The statistical entropy perspective was introduced in 1870 by Austrian physicist Ludwig Boltzmann, who established a new field of physics that provided the descriptive linkage between the macroscopic observation of nature and the microscopic view based on the rigorous treatment of large ensembles of microscopic states that constitute thermodynamic systems.

The quantum concentrationnQ is the particle concentration of a system where the interparticle distance is equal to the thermal de Broglie wavelength.

The Planck constant, or Planck's constant, denoted by , is a fundamental physical constant of foundational importance in quantum mechanics: a photon's energy is equal to its frequency multiplied by the Planck constant, and the wavelength of a matter wave equals the Planck constant divided by the associated particle momentum. The closely related reduced Planck constant, equal to and denoted is commonly used in quantum physics equations.

Hugo Martin Tetrode was a Dutch theoretical physicist who contributed to statistical physics, early quantum theory and quantum mechanics.