In combinatorial mathematics, the Steiner tree problem, or minimum Steiner tree problem, named after Jakob Steiner, is an umbrella term for a class of problems in combinatorial optimization. While Steiner tree problems may be formulated in a number of settings, they all require an optimal interconnect for a given set of objects and a predefined objective function. One well-known variant, which is often used synonymously with the term Steiner tree problem, is the Steiner tree problem in graphs. Given an undirected graph with non-negative edge weights and a subset of vertices, usually referred to as terminals, the Steiner tree problem in graphs requires a tree of minimum weight that contains all terminals and minimizes the total weight of its edges. Further well-known variants are the Euclidean Steiner tree problem and the rectilinear minimum Steiner tree problem.

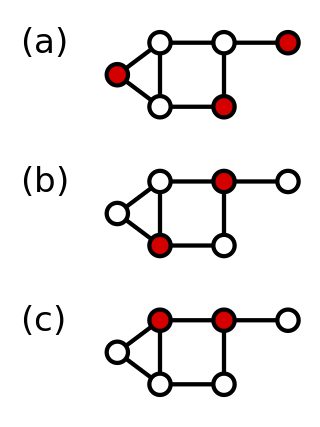

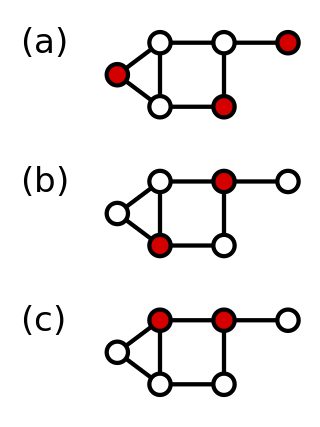

In graph theory, an independent set, stable set, coclique or anticlique is a set of vertices in a graph, no two of which are adjacent. That is, it is a set of vertices such that for every two vertices in , there is no edge connecting the two. Equivalently, each edge in the graph has at most one endpoint in . A set is independent if and only if it is a clique in the graph's complement. The size of an independent set is the number of vertices it contains. Independent sets have also been called "internally stable sets", of which "stable set" is a shortening.

In graph theory, a vertex cover of a graph is a set of vertices that includes at least one endpoint of every edge of the graph.

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are also many approximation algorithms that provide an additive guarantee on the quality of the returned solution. A notable example of an approximation algorithm that provides both is the classic approximation algorithm of Lenstra, Shmoys and Tardos for scheduling on unrelated parallel machines.

In the mathematical discipline of graph theory, a matching or independent edge set in an undirected graph is a set of edges without common vertices. In other words, a subset of the edges is a matching if each vertex appears in at most one edge of that matching. Finding a matching in a bipartite graph can be treated as a network flow problem.

The set cover problem is a classical question in combinatorics, computer science, operations research, and complexity theory.

In graph theory, a dominating set for a graph G is a subset D of its vertices, such that any vertex of G is in D, or has a neighbor in D. The domination numberγ(G) is the number of vertices in a smallest dominating set for G.

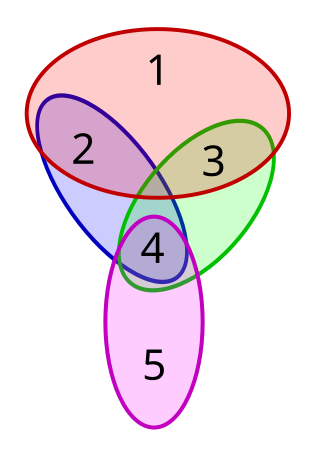

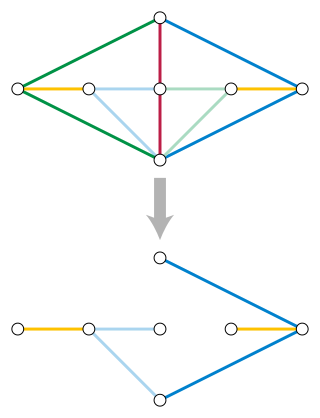

In graph theory, a domatic partition of a graph is a partition of into disjoint sets , ,..., such that each Vi is a dominating set for G. The figure on the right shows a domatic partition of a graph; here the dominating set consists of the yellow vertices, consists of the green vertices, and consists of the blue vertices.

In the mathematical discipline of graph theory, a feedback vertex set (FVS) of a graph is a set of vertices whose removal leaves a graph without cycles. Equivalently, each FVS contains at least one vertex of any cycle in the graph. The feedback vertex set number of a graph is the size of a smallest feedback vertex set. The minimum feedback vertex set problem is an NP-complete problem; it was among the first problems shown to be NP-complete. It has wide applications in operating systems, database systems, and VLSI chip design.

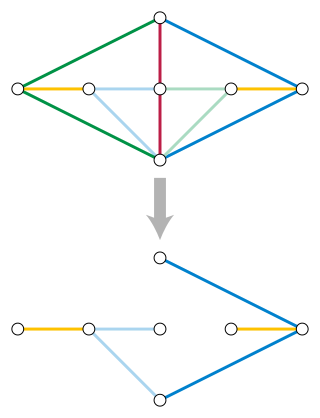

In graph theory and graph algorithms, a feedback arc set or feedback edge set in a directed graph is a subset of the edges of the graph that contains at least one edge out of every cycle in the graph. Removing these edges from the graph breaks all of the cycles, producing an acyclic subgraph of the given graph, often called a directed acyclic graph. A feedback arc set with the fewest possible edges is a minimum feedback arc set and its removal leaves a maximum acyclic subgraph; weighted versions of these optimization problems are also used. If a feedback arc set is minimal, meaning that removing any edge from it produces a subset that is not a feedback arc set, then it has an additional property: reversing all of its edges, rather than removing them, produces a directed acyclic graph.

In computational complexity theory, the class APX is the set of NP optimization problems that allow polynomial-time approximation algorithms with approximation ratio bounded by a constant. In simple terms, problems in this class have efficient algorithms that can find an answer within some fixed multiplicative factor of the optimal answer.

In graph theory, a cut is a partition of the vertices of a graph into two disjoint subsets. Any cut determines a cut-set, the set of edges that have one endpoint in each subset of the partition. These edges are said to cross the cut. In a connected graph, each cut-set determines a unique cut, and in some cases cuts are identified with their cut-sets rather than with their vertex partitions.

In the mathematical area of graph theory, Kőnig's theorem, proved by Dénes Kőnig, describes an equivalence between the maximum matching problem and the minimum vertex cover problem in bipartite graphs. It was discovered independently, also in 1931, by Jenő Egerváry in the more general case of weighted graphs.

In graph theory, a clique cover or partition into cliques of a given undirected graph is a collection of cliques that cover the whole graph. A minimum clique cover is a clique cover that uses as few cliques as possible. The minimum k for which a clique cover exists is called the clique cover number of the given graph.

In a graph, a maximum cut is a cut whose size is at least the size of any other cut. That is, it is a partition of the graph's vertices into two complementary sets S and T, such that the number of edges between S and T is as large as possible. Finding such a cut is known as the max-cut problem.

In the mathematical fields of graph theory and combinatorial optimization, the bipartite dimension or biclique cover number of a graph G = (V, E) is the minimum number of bicliques (that is complete bipartite subgraphs), needed to cover all edges in E. A collection of bicliques covering all edges in G is called a biclique edge cover, or sometimes biclique cover. The bipartite dimension of G is often denoted by the symbol d(G).

In the mathematical discipline of graph theory, a 3-dimensional matching is a generalization of bipartite matching to 3-partite hypergraphs, which consist of hyperedges each of which contains 3 vertices.

In graph theory, the metric k-center problem is a combinatorial optimization problem studied in theoretical computer science. Given n cities with specified distances, one wants to build k warehouses in different cities and minimize the maximum distance of a city to a warehouse. In graph theory, this means finding a set of k vertices for which the largest distance of any point to its closest vertex in the k-set is minimum. The vertices must be in a metric space, providing a complete graph that satisfies the triangle inequality.

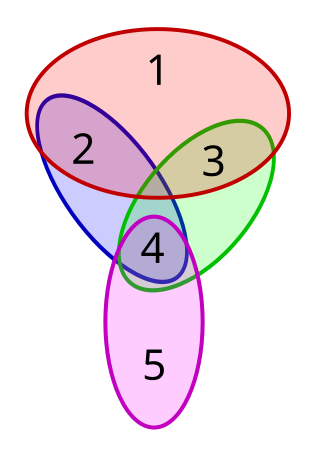

In the mathematical field of graph theory, the intersection number of a graph is the smallest number of elements in a representation of as an intersection graph of finite sets. In such a representation, each vertex is represented as a set, and two vertices are connected by an edge whenever their sets have a common element. Equivalently, the intersection number is the smallest number of cliques needed to cover all of the edges of .

In combinatorial optimization, the matroid parity problem is a problem of finding the largest independent set of paired elements in a matroid. The problem was formulated by Lawler (1976) as a common generalization of graph matching and matroid intersection. It is also known as polymatroid matching, or the matchoid problem.