Autocorrelation, sometimes known as serial correlation in the discrete time case, is the correlation of a signal with a delayed copy of itself as a function of delay. Informally, it is the similarity between observations of a random variable as a function of the time lag between them. The analysis of autocorrelation is a mathematical tool for finding repeating patterns, such as the presence of a periodic signal obscured by noise, or identifying the missing fundamental frequency in a signal implied by its harmonic frequencies. It is often used in signal processing for analyzing functions or series of values, such as time domain signals.

In probability theory, the expected value is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of the possible values a random variable can take, weighted by the probability of those outcomes. Since it is obtained through arithmetic, the expected value sometimes may not even be included in the sample data set; it is not the value you would "expect" to get in reality.

Distributions, also known as Schwartz distributions or generalized functions, are objects that generalize the classical notion of functions in mathematical analysis. Distributions make it possible to differentiate functions whose derivatives do not exist in the classical sense. In particular, any locally integrable function has a distributional derivative.

In mathematics, the Wiener process is a real-valued continuous-time stochastic process named in honor of American mathematician Norbert Wiener for his investigations on the mathematical properties of the one-dimensional Brownian motion. It is often also called Brownian motion due to its historical connection with the physical process of the same name originally observed by Scottish botanist Robert Brown. It is one of the best known Lévy processes and occurs frequently in pure and applied mathematics, economics, quantitative finance, evolutionary biology, and physics.

In information theory, the asymptotic equipartition property (AEP) is a general property of the output samples of a stochastic source. It is fundamental to the concept of typical set used in theories of data compression.

In probability theory, a martingale is a sequence of random variables for which, at a particular time, the conditional expectation of the next value in the sequence is equal to the present value, regardless of all prior values.

In mathematics, Fatou's lemma establishes an inequality relating the Lebesgue integral of the limit inferior of a sequence of functions to the limit inferior of integrals of these functions. The lemma is named after Pierre Fatou.

Lookback options, in the terminology of finance, are a type of exotic option with path dependency, among many other kind of options. The payoff depends on the optimal underlying asset's price occurring over the life of the option. The option allows the holder to "look back" over time to determine the payoff. There exist two kinds of lookback options: with floating strike and with fixed strike.

In probability theory, the Azuma–Hoeffding inequality gives a concentration result for the values of martingales that have bounded differences.

In probability theory, in particular in the study of stochastic processes, a stopping time is a specific type of “random time”: a random variable whose value is interpreted as the time at which a given stochastic process exhibits a certain behavior of interest. A stopping time is often defined by a stopping rule, a mechanism for deciding whether to continue or stop a process on the basis of the present position and past events, and which will almost always lead to a decision to stop at some finite time.

In functional analysis and related areas of mathematics, a sequence space is a vector space whose elements are infinite sequences of real or complex numbers. Equivalently, it is a function space whose elements are functions from the natural numbers to the field K of real or complex numbers. The set of all such functions is naturally identified with the set of all possible infinite sequences with elements in K, and can be turned into a vector space under the operations of pointwise addition of functions and pointwise scalar multiplication. All sequence spaces are linear subspaces of this space. Sequence spaces are typically equipped with a norm, or at least the structure of a topological vector space.

In probability theory, the central limit theorem says that, under certain conditions, the sum of many independent identically-distributed random variables, when scaled appropriately, converges in distribution to a standard normal distribution. The martingale central limit theorem generalizes this result for random variables to martingales, which are stochastic processes where the change in the value of the process from time t to time t + 1 has expectation zero, even conditioned on previous outcomes.

In mathematics, the theory of optimal stopping or early stopping is concerned with the problem of choosing a time to take a particular action, in order to maximise an expected reward or minimise an expected cost. Optimal stopping problems can be found in areas of statistics, economics, and mathematical finance. A key example of an optimal stopping problem is the secretary problem. Optimal stopping problems can often be written in the form of a Bellman equation, and are therefore often solved using dynamic programming.

Expected shortfall (ES) is a risk measure—a concept used in the field of financial risk measurement to evaluate the market risk or credit risk of a portfolio. The "expected shortfall at q% level" is the expected return on the portfolio in the worst of cases. ES is an alternative to value at risk that is more sensitive to the shape of the tail of the loss distribution.

In mathematics, uniform integrability is an important concept in real analysis, functional analysis and measure theory, and plays a vital role in the theory of martingales.

In mathematics – specifically, in the theory of stochastic processes – Doob's martingale convergence theorems are a collection of results on the limits of supermartingales, named after the American mathematician Joseph L. Doob. Informally, the martingale convergence theorem typically refers to the result that any supermartingale satisfying a certain boundedness condition must converge. One may think of supermartingales as the random variable analogues of non-increasing sequences; from this perspective, the martingale convergence theorem is a random variable analogue of the monotone convergence theorem, which states that any bounded monotone sequence converges. There are symmetric results for submartingales, which are analogous to non-decreasing sequences.

In probability theory, the optional stopping theorem says that, under certain conditions, the expected value of a martingale at a stopping time is equal to its initial expected value. Since martingales can be used to model the wealth of a gambler participating in a fair game, the optional stopping theorem says that, on average, nothing can be gained by stopping play based on the information obtainable so far. Certain conditions are necessary for this result to hold true. In particular, the theorem applies to doubling strategies.

In mathematics and physics, the Magnus expansion, named after Wilhelm Magnus (1907–1990), provides an exponential representation of the solution of a first-order homogeneous linear differential equation for a linear operator. In particular, it furnishes the fundamental matrix of a system of linear ordinary differential equations of order n with varying coefficients. The exponent is aggregated as an infinite series, whose terms involve multiple integrals and nested commutators.

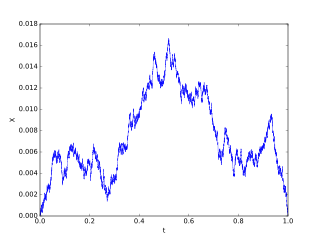

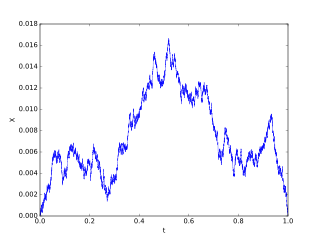

In probability theory a Brownian excursion process is a stochastic process that is closely related to a Wiener process. Realisations of Brownian excursion processes are essentially just realizations of a Wiener process selected to satisfy certain conditions. In particular, a Brownian excursion process is a Wiener process conditioned to be positive and to take the value 0 at time 1. Alternatively, it is a Brownian bridge process conditioned to be positive. BEPs are important because, among other reasons, they naturally arise as the limit process of a number of conditional functional central limit theorems.

In functional analysis and related areas of mathematics, a metrizable topological vector space (TVS) is a TVS whose topology is induced by a metric. An LM-space is an inductive limit of a sequence of locally convex metrizable TVS.