Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

In 3-D computer graphics, ray tracing is a technique for modeling light transport for use in a wide variety of rendering algorithms for generating digital images.

The painter’s algorithm is an algorithm for visible surface determination in 3D computer graphics that works on a polygon-by-polygon basis rather than a pixel-by-pixel, row by row, or area by area basis of other Hidden-Surface Removal algorithms. The painter’s algorithm creates images by sorting the polygons within the image by their depth and placing each polygon in order from the farthest to the closest object.

Scanline rendering is an algorithm for visible surface determination, in 3D computer graphics, that works on a row-by-row basis rather than a polygon-by-polygon or pixel-by-pixel basis. All of the polygons to be rendered are first sorted by the top y coordinate at which they first appear, then each row or scan line of the image is computed using the intersection of a scanline with the polygons on the front of the sorted list, while the sorted list is updated to discard no-longer-visible polygons as the active scan line is advanced down the picture.

Direct3D is a graphics application programming interface (API) for Microsoft Windows. Part of DirectX, Direct3D is used to render three-dimensional graphics in applications where performance is important, such as games. Direct3D uses hardware acceleration if it is available on the graphics card, allowing for hardware acceleration of the entire 3D rendering pipeline or even only partial acceleration. Direct3D exposes the advanced graphics capabilities of 3D graphics hardware, including Z-buffering, W-buffering, stencil buffering, spatial anti-aliasing, alpha blending, color blending, mipmapping, texture blending, clipping, culling, atmospheric effects, perspective-correct texture mapping, programmable HLSL shaders and effects. Integration with other DirectX technologies enables Direct3D to deliver such features as video mapping, hardware 3D rendering in 2D overlay planes, and even sprites, providing the use of 2D and 3D graphics in interactive media ties.

A depth buffer, also known as a z-buffer, is a type of data buffer used in computer graphics to represent depth information of objects in 3D space from a particular perspective. Depth buffers are an aid to rendering a scene to ensure that the correct polygons properly occlude other polygons. Z-buffering was first described in 1974 by Wolfgang Straßer in his PhD thesis on fast algorithms for rendering occluded objects. A similar solution to determining overlapping polygons is the painter's algorithm, which is capable of handling non-opaque scene elements, though at the cost of efficiency and incorrect results.

Shadow volume is a technique used in 3D computer graphics to add shadows to a rendered scene. They were first proposed by Frank Crow in 1977 as the geometry describing the 3D shape of the region occluded from a light source. A shadow volume divides the virtual world in two: areas that are in shadow and areas that are not.

In 3D computer graphics, hidden-surface determination is the process of identifying what surfaces and parts of surfaces can be seen from a particular viewing angle. A hidden-surface determination algorithm is a solution to the visibility problem, which was one of the first major problems in the field of 3D computer graphics. The process of hidden-surface determination is sometimes called hiding, and such an algorithm is sometimes called a hider. When referring to line rendering it is known as hidden-line removal. Hidden-surface determination is necessary to render a scene correctly, so that one may not view features hidden behind the model itself, allowing only the naturally viewable portion of the graphic to be visible.

Parallel rendering is the application of parallel programming to the computational domain of computer graphics. Rendering graphics can require massive computational resources for complex scenes that arise in scientific visualization, medical visualization, CAD applications, and virtual reality. Recent research has also suggested that parallel rendering can be applied to mobile gaming to decrease power consumption and increase graphical fidelity. Rendering is an embarrassingly parallel workload in multiple domains and thus has been the subject of much research.

In computer graphics, a shader is a computer program that calculates the appropriate levels of light, darkness, and color during the rendering of a 3D scene—a process known as shading. Shaders have evolved to perform a variety of specialized functions in computer graphics special effects and video post-processing, as well as general-purpose computing on graphics processing units.

The computer graphics pipeline, also known as the rendering pipeline or graphics pipeline, is a framework within computer graphics that outlines the necessary procedures for transforming a three-dimensional (3D) scene into a two-dimensional (2D) representation on a screen. Once a 3D model is generated, whether it's for a video game or any other form of 3D computer animation, the graphics pipeline converts the model into a visually perceivable format on the computer display. Due to the dependence on specific software, hardware configurations, and desired display attributes, a universally applicable graphics pipeline does not exist. Nevertheless, graphics application programming interfaces (APIs), such as Direct3D and OpenGL, were developed to standardize common procedures and oversee the graphics pipeline of a given hardware accelerator. These APIs provide an abstraction layer over the underlying hardware, relieving programmers from the need to write code explicitly targeting various graphics hardware accelerators like AMD, Intel, Nvidia, and others.

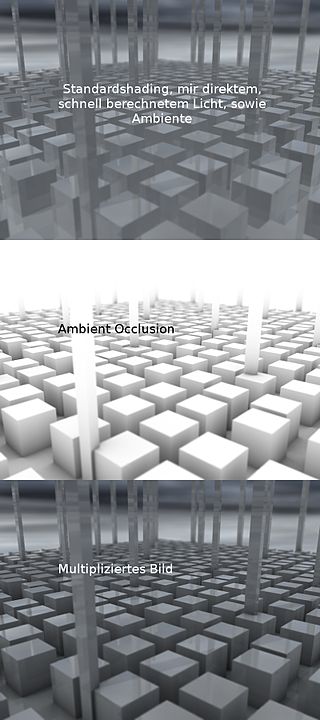

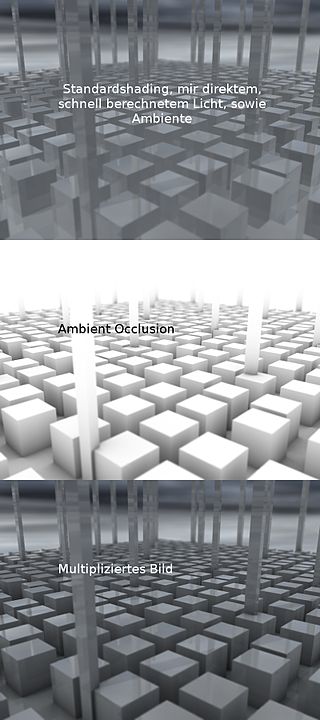

In 3D computer graphics, modeling, and animation, ambient occlusion is a shading and rendering technique used to calculate how exposed each point in a scene is to ambient lighting. For example, the interior of a tube is typically more occluded than the exposed outer surfaces, and becomes darker the deeper inside the tube one goes.

Real-time computer graphics or real-time rendering is the sub-field of computer graphics focused on producing and analyzing images in real time. The term can refer to anything from rendering an application's graphical user interface (GUI) to real-time image analysis, but is most often used in reference to interactive 3D computer graphics, typically using a graphics processing unit (GPU). One example of this concept is a video game that rapidly renders changing 3D environments to produce an illusion of motion.

In computer graphics, per-pixel lighting refers to any technique for lighting an image or scene that calculates illumination for each pixel on a rendered image. This is in contrast to other popular methods of lighting such as vertex lighting, which calculates illumination at each vertex of a 3D model and then interpolates the resulting values over the model's faces to calculate the final per-pixel color values.

Ray-tracing hardware is special-purpose computer hardware designed for accelerating ray tracing calculations.

In 3D computer graphics, Potentially Visible Sets are used to accelerate the rendering of 3D environments. They are a form of occlusion culling, whereby a candidate set of potentially visible polygons are pre-computed, then indexed at run-time in order to quickly obtain an estimate of the visible geometry. The term PVS is sometimes used to refer to any occlusion culling algorithm, although in almost all the literature, it is used to refer specifically to occlusion culling algorithms that pre-compute visible sets and associate these sets with regions in space. In order to make this association, the camera's view-space is typically subdivided into regions and a PVS is computed for each region.

Screen space ambient occlusion (SSAO) is a computer graphics technique for efficiently approximating the ambient occlusion effect in real time. It was developed by Vladimir Kajalin while working at Crytek and was used for the first time in 2007 by the video game Crysis, also developed by Crytek.

Order-independent transparency (OIT) is a class of techniques in rasterisational computer graphics for rendering transparency in a 3D scene, which do not require rendering geometry in sorted order for alpha compositing.

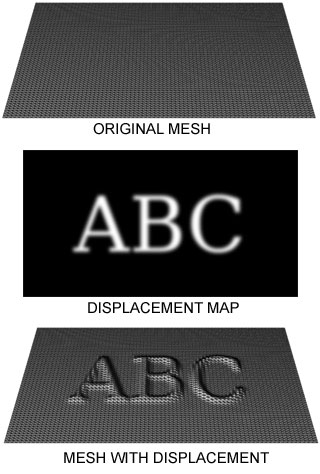

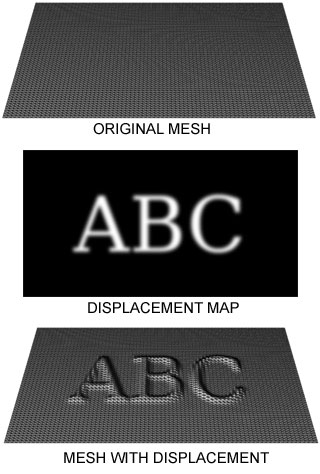

Displacement mapping is an alternative computer graphics technique in contrast to bump, normal, and parallax mapping, using a texture or height map to cause an effect where the actual geometric position of points over the textured surface are displaced, often along the local surface normal, according to the value the texture function evaluates to at each point on the surface. It gives surfaces a great sense of depth and detail, permitting in particular self-occlusion, self-shadowing and silhouettes; on the other hand, it is the most costly of this class of techniques owing to the large amount of additional geometry.

This is a glossary of terms relating to computer graphics.