The painter's algorithm is an algorithm for visible surface determination in 3D computer graphics that works on a polygon-by-polygon basis rather than a pixel-by-pixel, row by row, or area by area basis of other Hidden-Surface Removal algorithms. The painter's algorithm creates images by sorting the polygons within the image by their depth and placing each polygon in order from the farthest to the closest object.

Scanline rendering is an algorithm for visible surface determination, in 3D computer graphics, that works on a row-by-row basis rather than a polygon-by-polygon or pixel-by-pixel basis. All of the polygons to be rendered are first sorted by the top y coordinate at which they first appear, then each row or scan line of the image is computed using the intersection of a scanline with the polygons on the front of the sorted list, while the sorted list is updated to discard no-longer-visible polygons as the active scan line is advanced down the picture.

Gouraud shading, named after Henri Gouraud, is an interpolation method used in computer graphics to produce continuous shading of surfaces represented by polygon meshes. In practice, Gouraud shading is most often used to achieve continuous lighting on triangle meshes by computing the lighting at the corners of each triangle and linearly interpolating the resulting colours for each pixel covered by the triangle. Gouraud first published the technique in 1971. However, enhanced hardware support for superior shading models has yielded Gouraud shading largely obsolete in modern rendering.

Texture mapping is a method for mapping a texture on a computer-generated graphic. "Texture" in this context can be high frequency detail, surface texture, or color.

Forced perspective is a technique that employs optical illusion to make an object appear farther away, closer, larger or smaller than it actually is. It manipulates human visual perception through the use of scaled objects and the correlation between them and the vantage point of the spectator or camera. It has uses in photography, filmmaking and architecture.

Shading refers to the depiction of depth perception in 3D models or illustrations by varying the level of darkness. Shading tries to approximate local behavior of light on the object's surface and is not to be confused with techniques of adding shadows, such as shadow mapping or shadow volumes, which fall under global behavior of light.

2.5D perspective refers to gameplay or movement in a video game or virtual reality environment that is restricted to a two-dimensional (2D) plane with little to no access to a third dimension in a space that otherwise appears to be three-dimensional and is often simulated and rendered in a 3D digital environment.

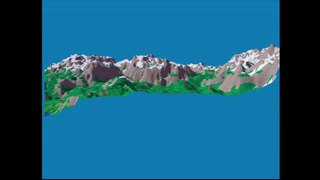

In computer graphics, level of detail (LOD) refers to the complexity of a 3D model representation. LOD can be decreased as the model moves away from the viewer or according to other metrics such as object importance, viewpoint-relative speed or position. LOD techniques increase the efficiency of rendering by decreasing the workload on graphics pipeline stages, usually vertex transformations. The reduced visual quality of the model is often unnoticed because of the small effect on object appearance when distant or moving fast.

Aerial perspective, or atmospheric perspective, refers to the effect the atmosphere has on the appearance of an object as viewed from a distance. As the distance between an object and a viewer increases, the contrast between the object and its background decreases, and the contrast of any markings or details within the object also decreases. The colours of the object also become less saturated and shift toward the background colour, which is usually bluish, but may be some other colour under certain conditions.

Real-time computer graphics or real-time rendering is the sub-field of computer graphics focused on producing and analyzing images in real time. The term can refer to anything from rendering an application's graphical user interface (GUI) to real-time image analysis, but is most often used in reference to interactive 3D computer graphics, typically using a graphics processing unit (GPU). One example of this concept is a video game that rapidly renders changing 3D environments to produce an illusion of motion.

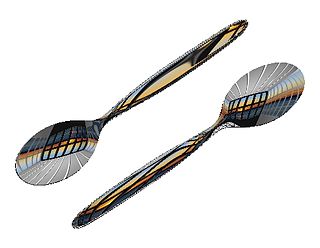

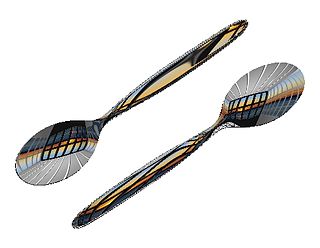

In computer graphics, reflection mapping or environment mapping is an efficient image-based lighting technique for approximating the appearance of a reflective surface by means of a precomputed texture. The texture is used to store the image of the distant environment surrounding the rendered object.

Clipping, in the context of computer graphics, is a method to selectively enable or disable rendering operations within a defined region of interest. Mathematically, clipping can be described using the terminology of constructive geometry. A rendering algorithm only draws pixels in the intersection between the clip region and the scene model. Lines and surfaces outside the view volume are removed.

In computer graphics, draw distance is the maximum distance of objects in a three-dimensional scene that are drawn by the rendering engine. Polygons that lie beyond the draw distance will not be drawn to the screen.

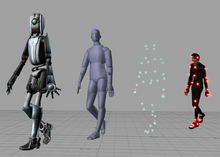

3D rendering is the 3D computer graphics process of converting 3D models into 2D images on a computer. 3D renders may include photorealistic effects or non-photorealistic styles.

Computer graphics lighting is the collection of techniques used to simulate light in computer graphics scenes. While lighting techniques offer flexibility in the level of detail and functionality available, they also operate at different levels of computational demand and complexity. Graphics artists can choose from a variety of light sources, models, shading techniques, and effects to suit the needs of each application.

Terrain cartography or relief mapping is the depiction of the shape of the surface of the Earth on a map, using one or more of several techniques that have been developed. Terrain or relief is an essential aspect of physical geography, and as such its portrayal presents a central problem in cartographic design, and more recently geographic information systems and geovisualization.

PICA200 is a graphics processing unit (GPU) designed by Digital Media Professionals Inc. (DMP), a Japanese GPU design startup company, for use in embedded devices such as vehicle systems, mobile phones, cameras, and game consoles. The PICA200 is an IP Core which can be licensed to other companies to incorporate into their SOCs. It was most notably licensed for use in the Nintendo 3DS.

This is a glossary of terms relating to computer graphics.

Physically based rendering (PBR) is a computer graphics approach that seeks to render images in a way that models the lights and surfaces with optics in the real world. It is often referred to as "Physically Based Lighting" or "Physically Based Shading". Many PBR pipelines aim to achieve photorealism. Feasible and quick approximations of the bidirectional reflectance distribution function and rendering equation are of mathematical importance in this field. Photogrammetry may be used to help discover and encode accurate optical properties of materials. PBR principles may be implemented in real-time applications using Shaders or offline applications using ray tracing or path tracing.