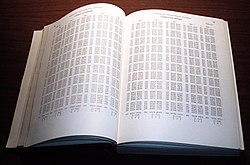

In computer science, a lookup table (LUT) is an array that replaces runtime computation of a mathematical function with a simpler array indexing operation, in a process termed as direct addressing. The savings in processing time can be significant, because retrieving a value from memory is often faster than carrying out an "expensive" computation or input/output operation. [1] The tables may be precalculated and stored in static program storage, calculated (or "pre-fetched") as part of a program's initialization phase ( memoization ), or even stored in hardware in application-specific platforms. Lookup tables are also used extensively to validate input values by matching against a list of valid (or invalid) items in an array and, in some programming languages, may include pointer functions (or offsets to labels) to process the matching input. FPGAs also make extensive use of reconfigurable, hardware-implemented, lookup tables to provide programmable hardware functionality. LUTs differ from hash tables in that, to retrieve a value with key , a hash table would store the value in the slot where is a hash function i.e. is used to compute the slot, while in the case of LUT, the value is stored in slot , thus directly addressable. [2] : 466