Handwriting recognition (HWR), also known as handwritten text recognition (HTR), is the ability of a computer to receive and interpret intelligible handwritten input from sources such as paper documents, photographs, touch-screens and other devices. The image of the written text may be sensed "off line" from a piece of paper by optical scanning or intelligent word recognition. Alternatively, the movements of the pen tip may be sensed "on line", for example by a pen-based computer screen surface, a generally easier task as there are more clues available. A handwriting recognition system handles formatting, performs correct segmentation into characters, and finds the most possible words.

Jürgen Schmidhuber is a German computer scientist noted for his work in the field of artificial intelligence, specifically artificial neural networks. He is a scientific director of the Dalle Molle Institute for Artificial Intelligence Research in Switzerland. He is also director of the Artificial Intelligence Initiative and professor of the Computer Science program in the Computer, Electrical, and Mathematical Sciences and Engineering (CEMSE) division at the King Abdullah University of Science and Technology (KAUST) in Saudi Arabia.

In digital image processing and computer vision, image segmentation is the process of partitioning a digital image into multiple image segments, also known as image regions or image objects. The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Image segmentation is typically used to locate objects and boundaries in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain characteristics.

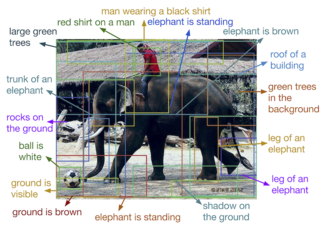

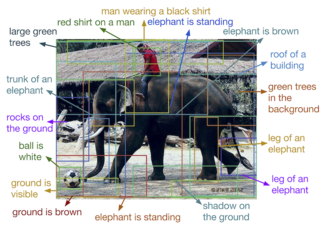

Automatic image annotation is the process by which a computer system automatically assigns metadata in the form of captioning or keywords to a digital image. This application of computer vision techniques is used in image retrieval systems to organize and locate images of interest from a database.

As applied in the field of computer vision, graph cut optimization can be employed to efficiently solve a wide variety of low-level computer vision problems, such as image smoothing, the stereo correspondence problem, image segmentation, object co-segmentation, and many other computer vision problems that can be formulated in terms of energy minimization. Many of these energy minimization problems can be approximated by solving a maximum flow problem in a graph. Under most formulations of such problems in computer vision, the minimum energy solution corresponds to the maximum a posteriori estimate of a solution. Although many computer vision algorithms involve cutting a graph, the term "graph cuts" is applied specifically to those models which employ a max-flow/min-cut optimization.

The image segmentation problem is concerned with partitioning an image into multiple regions according to some homogeneity criterion. This article is primarily concerned with graph theoretic approaches to image segmentation applying graph partitioning via minimum cut or maximum cut. Segmentation-based object categorization can be viewed as a specific case of spectral clustering applied to image segmentation.

The Conference on Computer Vision and Pattern Recognition (CVPR) is an annual conference on computer vision and pattern recognition, which is regarded as one of the most important conferences in its field. According to Google Scholar Metrics (2022), it is the highest impact computing venue.

AlexNet is the name of a convolutional neural network (CNN) architecture, designed by Alex Krizhevsky in collaboration with Ilya Sutskever and Geoffrey Hinton, who was Krizhevsky's Ph.D. advisor at the University of Toronto.

René Vidal is a Chilean electrical engineer and computer scientist who is known for his research in machine learning, computer vision, medical image computing, robotics, and control theory. He is the Herschel L. Seder Professor of the Johns Hopkins Department of Biomedical Engineering, and the founding director of the Mathematical Institute for Data Science (MINDS).

Alan Yuille is a Bloomberg Distinguished Professor of Computational Cognitive Science with appointments in the departments of Cognitive Science and Computer Science at Johns Hopkins University. Yuille develops models of vision and cognition for computers, intended for creating artificial vision systems. He studied under Stephen Hawking at Cambridge University on a PhD in theoretical physics, which he completed in 1981.

Jason Joseph Corso is Co-Founder / CEO of the computer vision startup Voxel51 and a Professor of Robotics, Electrical Engineering and Computer Science at the University of Michigan.

Gregory D. Hager is the Mandell Bellmore Professor of Computer Science and founding director of the Johns Hopkins Malone Center for Engineering in Healthcare at Johns Hopkins University.

Jiebo Luo is a Chinese-American computer scientist, the Albert Arendt Hopeman Professor of Engineering and Professor of Computer Science at the University of Rochester. He is interested in artificial intelligence, data science and computer vision.

Xu Li is a Chinese computer scientist and co-founder and current CEO of SenseTime, an artificial intelligence (AI) company. Xu has led SenseTime since the company's incorporation and helped it independently develop its proprietary deep learning platform.

An energy-based model (EBM) (also called a Canonical Ensemble Learning(CEL) or Learning via Canonical Ensemble (LCE)) is an application of canonical ensemble formulation of statistical physics for learning from data problems. The approach prominently appears in generative models (GMs).

In the domain of physics and probability, the filters, random fields, and maximum entropy (FRAME) model is a Markov random field model of stationary spatial processes, in which the energy function is the sum of translation-invariant potential functions that are one-dimensional non-linear transformations of linear filter responses. The FRAME model was originally developed by Song-Chun Zhu, Ying Nian Wu, and David Mumford for modeling stochastic texture patterns, such as grasses, tree leaves, brick walls, water waves, etc. This model is the maximum entropy distribution that reproduces the observed marginal histograms of responses from a bank of filters, where for each filter tuned to a specific scale and orientation, the marginal histogram is pooled over all the pixels in the image domain. The FRAME model is also proved to be equivalent to the micro-canonical ensemble, which was named the Julesz ensemble. Gibbs sampler is adopted to synthesize texture images by drawing samples from the FRAME model.

Self-supervised learning (SSL) is a paradigm in machine learning where a model is trained on a task using the data itself to generate supervisory signals, rather than relying on external labels provided by humans. In the context of neural networks, self-supervised learning aims to leverage inherent structures or relationships within the input data to create meaningful training signals. SSL tasks are designed so that solving it requires capturing essential features or relationships in the data. The input data is typically augmented or transformed in a way that creates pairs of related samples. One sample serves as the input, and the other is used to formulate the supervisory signal. This augmentation can involve introducing noise, cropping, rotation, or other transformations. Self-supervised learning more closely imitates the way humans learn to classify objects.

Jiaya Jia is a Chair Professor of the Department of Computer Science and Engineering at The Hong Kong University of Science and Technology (HKUST). He is an IEEE Fellow, the associate editor-in-chief of one of IEEE’s flagship and premier journals- Transactions on Pattern Analysis and Machine Intelligence (TPAMI), as well as on the editorial board of International Journal of Computer Vision (IJCV).

Gérard G. Medioni is a computer scientist, author, academic and inventor. He is a vice president and distinguished scientist at Amazon and serves as emeritus professor of Computer Science at the University of Southern California.