Related Research Articles

In computing, a compiler is a computer program that translates computer code written in one programming language into another language. The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a lower level language to create an executable program.

Fortran is a general-purpose, compiled imperative programming language that is especially suited to numeric computation and scientific computing.

In computing, an optimizing compiler is a compiler that tries to minimize or maximize some attributes of an executable computer program. Common requirements are to minimize a program's execution time, memory footprint, storage size, and power consumption.

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. The implementation of threads and processes differs between operating systems, but in most cases a thread is a component of a process. Multiple threads can exist within one process, executing concurrently and sharing resources such as memory, while different processes do not share these resources. In particular, the threads of a process share its executable code and the values of its dynamically allocated variables and non-thread-local global variables at any given time.

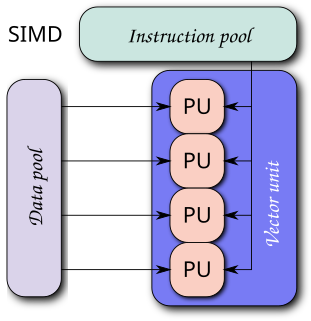

Single instruction, multiple data (SIMD) is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously. Such machines exploit data level parallelism, but not concurrency: there are simultaneous (parallel) computations, but only a single process (instruction) at a given moment. SIMD is particularly applicable to common tasks such as adjusting the contrast in a digital image or adjusting the volume of digital audio. Most modern CPU designs include SIMD instructions to improve the performance of multimedia use. SIMD is not to be confused with SIMT, which utilizes threads.

In computer science, algorithmic efficiency is a property of an algorithm which relates to the number of computational resources used by the algorithm. An algorithm must be analyzed to determine its resource usage, and the efficiency of an algorithm can be measured based on the usage of different resources. Algorithmic efficiency can be thought of as analogous to engineering productivity for a repeating or continuous process.

Parallel computing is a type of computation where many calculations or the execution of processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

In computer science, program optimization, code optimization, or software optimization is the process of modifying a software system to make some aspect of it work more efficiently or use fewer resources. In general, a computer program may be optimized so that it executes more rapidly, or to make it capable of operating with less memory storage or other resources, or draw less power.

In computer science, computer engineering and programming language implementations, a stack machine is a mode of computation where execution control is maintained wholly through append (push) and readoff and truncation (pop) of a first-in-last-out memory buffer, known as a stack, requiring very few processor registers. A stack machine is sufficient to coordinate operation of an entire computer and operating system, for example the Burroughs B5000, may define a particular software program, for example the interpreter for Adobe's PostScript print formatting language, or may be used in only part of the execution thread of a program.

In computing, a parallel programming model is an abstraction of parallel computer architecture, with which it is convenient to express algorithms and their composition in programs. The value of a programming model can be judged on its generality: how well a range of different problems can be expressed for a variety of different architectures, and its performance: how efficiently the compiled programs can execute. The implementation of a parallel programming model can take the form of a library invoked from a sequential language, as an extension to an existing language, or as an entirely new language.

Automatic parallelization, also auto parallelization, or autoparallelization refers to converting sequential code into multi-threaded and/or vectorized code in order to use multiple processors simultaneously in a shared-memory multiprocessor (SMP) machine. Fully automatic parallelization of sequential programs is a challenge because it requires complex program analysis and the best approach may depend upon parameter values that are not known at compilation time.

Stream processing is a computer programming paradigm, equivalent to dataflow programming, event stream processing, and reactive programming, that allows some applications to more easily exploit a limited form of parallel processing. Such applications can use multiple computational units, such as the floating point unit on a graphics processing unit or field-programmable gate arrays (FPGAs), without explicitly managing allocation, synchronization, or communication among those units.

In computer programming, explicit parallelism is the representation of concurrent computations by means of primitives in the form of special-purpose directives or function calls. Most parallel primitives are related to process synchronization, communication or task partitioning. As they seldom contribute to actually carry out the intended computation of the program, their computational cost is often considered as parallelization overhead.

In computer science, implicit parallelism is a characteristic of a programming language that allows a compiler or interpreter to automatically exploit the parallelism inherent to the computations expressed by some of the language's constructs. A pure implicitly parallel language does not need special directives, operators or functions to enable parallel execution, as opposed to explicit parallelism.

SIMD within a register (SWAR) is a technique for performing parallel operations on data contained in a processor register. SIMD stands for single instruction, multiple data.

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to task parallelism as another form of parallelism.

In computing, algorithmic skeletons, or parallelism patterns, are a high-level parallel programming model for parallel and distributed computing.

Data-intensive computing is a class of parallel computing applications which use a data parallel approach to process large volumes of data typically terabytes or petabytes in size and typically referred to as big data. Computing applications which devote most of their execution time to computational requirements are deemed compute-intensive, whereas computing applications which require large volumes of data and devote most of their processing time to I/O and manipulation of data are deemed data-intensive.

For several years parallel hardware was only available for distributed computing but recently it is becoming available for the low end computers as well. Hence it has become inevitable for software programmers to start writing parallel applications. It is quite natural for programmers to think sequentially and hence they are less acquainted with writing multi-threaded or parallel processing applications. Parallel programming requires handling various issues such as synchronization and deadlock avoidance. Programmers require added expertise for writing such applications apart from their expertise in the application domain. Hence programmers prefer to write sequential code and most of the popular programming languages support it. This allows them to concentrate more on the application. Therefore, there is a need to convert such sequential applications to parallel applications with the help of automated tools. The need is also non-trivial because large amount of legacy code written over the past few decades needs to be reused and parallelized.

References

- ↑ "Chapel spec (Acknowledgements)" (PDF). Cray Inc. 2015-10-01. Retrieved 2016-01-14.

- ↑ "ZPL Home Page". Archived from the original on 15 January 2013. Retrieved 17 December 2012.