A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS).

In computing, floating point operations per second is a measure of computer performance, useful in fields of scientific computations that require floating-point calculations. For such cases, it is a more accurate measure than measuring instructions per second.

Netlib is a repository of software for scientific computing maintained by AT&T, Bell Laboratories, the University of Tennessee and Oak Ridge National Laboratory. Netlib comprises many separate programs and libraries. Most of the code is written in C and Fortran, with some programs in other languages.

Jack Joseph Dongarra is an American computer scientist and mathematician. He is the American University Distinguished Professor of Computer Science in the Electrical Engineering and Computer Science Department at the University of Tennessee. He holds the position of a Distinguished Research Staff member in the Computer Science and Mathematics Division at Oak Ridge National Laboratory, Turing Fellowship in the School of Mathematics at the University of Manchester, and is an adjunct professor in the Computer Science Department at Rice University. He served as a faculty fellow at the Texas A&M University Institute for Advanced Study (2014–2018). Dongarra is the founding director of the Innovative Computing Laboratory at the University of Tennessee.

High-performance computing (HPC) uses supercomputers and computer clusters to solve advanced computation problems.

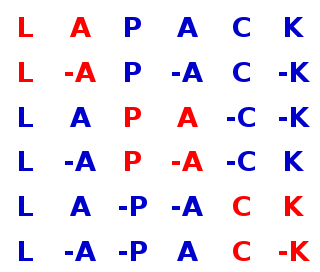

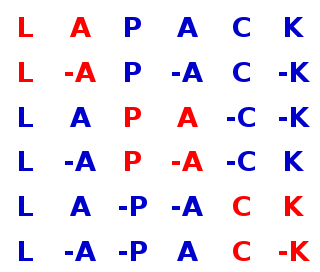

LAPACK is a standard software library for numerical linear algebra. It provides routines for solving systems of linear equations and linear least squares, eigenvalue problems, and singular value decomposition. It also includes routines to implement the associated matrix factorizations such as LU, QR, Cholesky and Schur decomposition. LAPACK was originally written in FORTRAN 77, but moved to Fortran 90 in version 3.2 (2008). The routines handle both real and complex matrices in both single and double precision. LAPACK relies on an underlying BLAS implementation to provide efficient and portable computational building blocks for its routines.

ASCI Red was the first computer built under the Accelerated Strategic Computing Initiative (ASCI), the supercomputing initiative of the United States government created to help the maintenance of the United States nuclear arsenal after the 1992 moratorium on nuclear testing.

Basic Linear Algebra Subprograms (BLAS) is a specification that prescribes a set of low-level routines for performing common linear algebra operations such as vector addition, scalar multiplication, dot products, linear combinations, and matrix multiplication. They are the de facto standard low-level routines for linear algebra libraries; the routines have bindings for both C and Fortran. Although the BLAS specification is general, BLAS implementations are often optimized for speed on a particular machine, so using them can bring substantial performance benefits. BLAS implementations will take advantage of special floating point hardware such as vector registers or SIMD instructions.

Scalable POWERparallel (SP) is a series of supercomputers from IBM. SP systems were part of the IBM RISC System/6000 (RS/6000) family, and were also called the RS/6000 SP. The first model, the SP1, was introduced in February 1993, and new models were introduced throughout the 1990s until the RS/6000 was succeeded by eServer pSeries in October 2000. The SP is a distributed memory system, consisting of multiple RS/6000-based nodes interconnected by an IBM-proprietary switch called the High Performance Switch (HPS). The nodes are clustered using software called PSSP, which is mainly written in Perl.

The TOP500 project ranks and details the 500 most powerful non-distributed computer systems in the world. The project was started in 1993 and publishes an updated list of the supercomputers twice a year. The first of these updates always coincides with the International Supercomputing Conference in June, and the second is presented at the ACM/IEEE Supercomputing Conference in November. The project aims to provide a reliable basis for tracking and detecting trends in high-performance computing and bases rankings on HPL, a portable implementation of the high-performance LINPACK benchmark written in Fortran for distributed-memory computers.

Petascale computing refers to computing systems capable of calculating at least 1015 floating point operations per second (1 petaFLOPS). Petascale computing allowed faster processing of traditional supercomputer applications. The first system to reach this milestone was the IBM Roadrunner in 2008. Petascale supercomputers are planned to be succeeded by exascale computers.

This list compares various amounts of computing power in instructions per second organized by order of magnitude in FLOPS.

HPC Challenge Benchmark combines several benchmarks to test a number of independent attributes of the performance of high-performance computer (HPC) systems. The project has been co-sponsored by the DARPA High Productivity Computing Systems program, the United States Department of Energy and the National Science Foundation.

The K computer – named for the Japanese word/numeral "kei" (京), meaning 10 quadrillion (1016) – was a supercomputer manufactured by Fujitsu, installed at the Riken Advanced Institute for Computational Science campus in Kobe, Hyōgo Prefecture, Japan. The K computer was based on a distributed memory architecture with over 80,000 compute nodes. It was used for a variety of applications, including climate research, disaster prevention and medical research. The K computer's operating system was based on the Linux kernel, with additional drivers designed to make use of the computer's hardware.

The LINPACK Benchmarks are a measure of a system's floating-point computing power. Introduced by Jack Dongarra, they measure how fast a computer solves a dense n by n system of linear equations Ax = b, which is a common task in engineering.

The number of traversed edges per second (TEPS) that can be performed by a supercomputer cluster is a measure of both the communications capabilities and computational power of the machine. This is in contrast to the more standard metric of floating-point operations per second (FLOPS), which does not give any weight to the communication capabilities of the machine. The term first entered usage in 2010 with the advent of petascale computing, and has since been measured for many of the world's largest supercomputers.

XK7 is a supercomputing platform, produced by Cray, launched on October 29, 2012. XK7 is the second platform from Cray to use a combination of central processing units ("CPUs") and graphical processing units ("GPUs") for computing; the hybrid architecture requires a different approach to programming to that of CPU-only supercomputers. Laboratories that host XK7 machines host workshops to train researchers in the new programming languages needed for XK7 machines. The platform is used in Titan, the world's second fastest supercomputer in the November 2013 list as ranked by the TOP500 organization. Other customers include the Swiss National Supercomputing Centre which has a 272 node machine and Blue Waters has a machine that has Cray XE6 and XK7 nodes that performs at approximately 1 petaFLOPS (1015 floating-point operations per second).

The HPCGbenchmark is a supercomputing benchmark test proposed by Michael Heroux from Sandia National Laboratories, and Jack Dongarra and Piotr Luszczek from the University of Tennessee. It is intended to model the data access patterns of real-world applications such as sparse matrix calculations, thus testing the effect of limitations of the memory subsystem and internal interconnect of the supercomputer on its computing performance. Because it is internally I/O bound, HPCG testing generally achieves only a tiny fraction of the peak FLOPS the computer could theoretically deliver.

Fugaku(Japanese: 富岳) is a petascale supercomputer at the Riken Center for Computational Science in Kobe, Japan. It started development in 2014 as the successor to the K computer and made its debut in 2020. It is named after an alternative name for Mount Fuji.