In mathematics, specifically in measure theory, a Borel measure on a topological space is a measure that is defined on all open sets. Some authors require additional restrictions on the measure, as described below.

In mathematics, the concept of a measure is a generalization and formalization of geometrical measures and other common notions, such as magnitude, mass, and probability of events. These seemingly distinct concepts have many similarities and can often be treated together in a single mathematical context. Measures are foundational in probability theory, integration theory, and can be generalized to assume negative values, as with electrical charge. Far-reaching generalizations of measure are widely used in quantum physics and physics in general.

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is The parameter is the mean or expectation of the distribution, while the parameter is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate.

In linear algebra and functional analysis, a spectral theorem is a result about when a linear operator or matrix can be diagonalized. This is extremely useful because computations involving a diagonalizable matrix can often be reduced to much simpler computations involving the corresponding diagonal matrix. The concept of diagonalization is relatively straightforward for operators on finite-dimensional vector spaces but requires some modification for operators on infinite-dimensional spaces. In general, the spectral theorem identifies a class of linear operators that can be modeled by multiplication operators, which are as simple as one can hope to find. In more abstract language, the spectral theorem is a statement about commutative C*-algebras. See also spectral theory for a historical perspective.

In probability theory and statistics, a standardized moment of a probability distribution is a moment that is normalized, typically by a power of the standard deviation, rendering the moment scale invariant. The shape of different probability distributions can be compared using standardized moments.

In calculus and real analysis, absolute continuity is a smoothness property of functions that is stronger than continuity and uniform continuity. The notion of absolute continuity allows one to obtain generalizations of the relationship between the two central operations of calculus—differentiation and integration. This relationship is commonly characterized in the framework of Riemann integration, but with absolute continuity it may be formulated in terms of Lebesgue integration. For real-valued functions on the real line, two interrelated notions appear: absolute continuity of functions and absolute continuity of measures. These two notions are generalized in different directions. The usual derivative of a function is related to the Radon–Nikodym derivative, or density, of a measure. We have the following chains of inclusions for functions over a compact subset of the real line:

In mathematics, the Radon–Nikodym theorem is a result in measure theory that expresses the relationship between two measures defined on the same measurable space. A measure is a set function that assigns a consistent magnitude to the measurable subsets of a measurable space. Examples of a measure include area and volume, where the subsets are sets of points; or the probability of an event, which is a subset of possible outcomes within a wider probability space.

In mathematics, the moments of a function are certain quantitative measures related to the shape of the function's graph. If the function represents mass density, then the zeroth moment is the total mass, the first moment is the center of mass, and the second moment is the moment of inertia. If the function is a probability distribution, then the first moment is the expected value, the second central moment is the variance, the third standardized moment is the skewness, and the fourth standardized moment is the kurtosis.

In probability theory and mathematical physics, a random matrix is a matrix-valued random variable—that is, a matrix in which some or all of its entries are sampled randomly from a probability distribution. Random matrix theory (RMT) is the study of properties of random matrices, often as they become large. RMT provides techniques like mean-field theory, diagrammatic methods, the cavity method, or the replica method to compute quantities like traces, spectral densities, or scalar products between eigenvectors. Many physical phenomena, such as the spectrum of nuclei of heavy atoms, the thermal conductivity of a lattice, or the emergence of quantum chaos, can be modeled mathematically as problems concerning large, random matrices.

In statistics and information theory, a maximum entropy probability distribution has entropy that is at least as great as that of all other members of a specified class of probability distributions. According to the principle of maximum entropy, if nothing is known about a distribution except that it belongs to a certain class, then the distribution with the largest entropy should be chosen as the least-informative default. The motivation is twofold: first, maximizing entropy minimizes the amount of prior information built into the distribution; second, many physical systems tend to move towards maximal entropy configurations over time.

In mathematics, the Hamburger moment problem, named after Hans Ludwig Hamburger, is formulated as follows: given a sequence (m0, m1, m2, ...), does there exist a positive Borel measure μ (for instance, the measure determined by the cumulative distribution function of a random variable) on the real line such that

In linear algebra, Weyl's inequality is a theorem about the changes to eigenvalues of an Hermitian matrix that is perturbed. It can be used to estimate the eigenvalues of a perturbed Hermitian matrix.

In mathematics, the Brunn–Minkowski theorem is an inequality relating the volumes of compact subsets of Euclidean space. The original version of the Brunn–Minkowski theorem applied to convex sets; the generalization to compact nonconvex sets stated here is due to Lazar Lyusternik (1935).

In probability theory and statistics, the normal-inverse-gamma distribution is a four-parameter family of multivariate continuous probability distributions. It is the conjugate prior of a normal distribution with unknown mean and variance.

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time if these events occur with a known constant mean rate and independently of the time since the last event. It can also be used for the number of events in other types of intervals than time, and in dimension greater than 1.

In the mathematical theory of random matrices, the Marchenko–Pastur distribution, or Marchenko–Pastur law, describes the asymptotic behavior of singular values of large rectangular random matrices. The theorem is named after soviet mathematicians Volodymyr Marchenko and Leonid Pastur who proved this result in 1967.

In mathematics, especially measure theory, a set function is a function whose domain is a family of subsets of some given set and that (usually) takes its values in the extended real number line which consists of the real numbers and

In mathematical analysis, Krein's condition provides a necessary and sufficient condition for exponential sums

In probability theory and statistics, the generalized multivariate log-gamma (G-MVLG) distribution is a multivariate distribution introduced by Demirhan and Hamurkaroglu in 2011. The G-MVLG is a flexible distribution. Skewness and kurtosis are well controlled by the parameters of the distribution. This enables one to control dispersion of the distribution. Because of this property, the distribution is effectively used as a joint prior distribution in Bayesian analysis, especially when the likelihood is not from the location-scale family of distributions such as normal distribution.

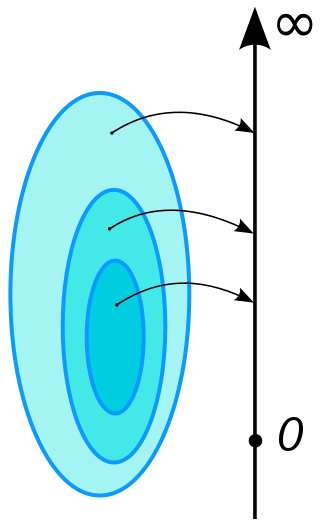

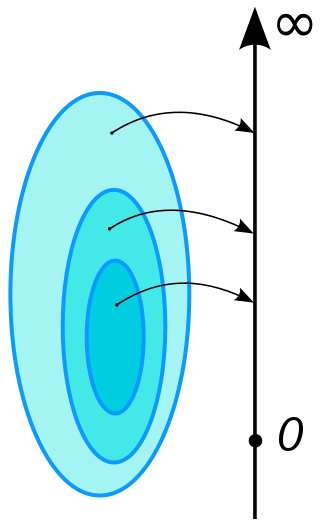

In measure and probability theory in mathematics, a convex measure is a probability measure that — loosely put — does not assign more mass to any intermediate set "between" two measurable sets A and B than it does to A or B individually. There are multiple ways in which the comparison between the probabilities of A and B and the intermediate set can be made, leading to multiple definitions of convexity, such as log-concavity, harmonic convexity, and so on. The mathematician Christer Borell was a pioneer of the detailed study of convex measures on locally convex spaces in the 1970s.