A semantic network, or frame network is a knowledge base that represents semantic relations between concepts in a network. This is often used as a form of knowledge representation. It is a directed or undirected graph consisting of vertices, which represent concepts, and edges, which represent semantic relations between concepts, mapping or connecting semantic fields. A semantic network may be instantiated as, for example, a graph database or a concept map. Typical standardized semantic networks are expressed as semantic triples.

WordNet is a lexical database of semantic relations between words that links words into semantic relations including synonyms, hyponyms, and meronyms. The synonyms are grouped into synsets with short definitions and usage examples. It can thus be seen as a combination and extension of a dictionary and thesaurus. While it is accessible to human users via a web browser, its primary use is in automatic text analysis and artificial intelligence applications. It was first created in the English language and the English WordNet database and software tools have been released under a BSD style license and are freely available for download from that WordNet website. There are now WordNets in more than 200 languages.

Semantic properties or meaning properties are those aspects of a linguistic unit, such as a morpheme, word, or sentence, that contribute to the meaning of that unit. Basic semantic properties include being meaningful or meaningless – for example, whether a given word is part of a language's lexicon with a generally understood meaning; polysemy, having multiple, typically related, meanings; ambiguity, having meanings which aren't necessarily related; and anomaly, where the elements of a unit are semantically incompatible with each other, although possibly grammatically sound. Beyond the expression itself, there are higher-level semantic relations that describe the relationship between units: these include synonymy, antonymy, and hyponymy.

Word-sense disambiguation (WSD) is the process of identifying which sense of a word is meant in a sentence or other segment of context. In human language processing and cognition, it is usually subconscious/automatic but can often come to conscious attention when ambiguity impairs clarity of communication, given the pervasive polysemy in natural language. In computational linguistics, it is an open problem that affects other computer-related writing, such as discourse, improving relevance of search engines, anaphora resolution, coherence, and inference.

A synonym is a word, morpheme, or phrase that means exactly or nearly the same as another word, morpheme, or phrase in a given language. For example, in the English language, the words begin, start, commence, and initiate are all synonyms of one another: they are synonymous. The standard test for synonymy is substitution: one form can be replaced by another in a sentence without changing its meaning. Words are considered synonymous in only one particular sense: for example, long and extended in the context long time or extended time are synonymous, but long cannot be used in the phrase extended family. Synonyms with exactly the same meaning share a seme or denotational sememe, whereas those with inexactly similar meanings share a broader denotational or connotational sememe and thus overlap within a semantic field. The former are sometimes called cognitive synonyms and the latter, near-synonyms, plesionyms or poecilonyms.

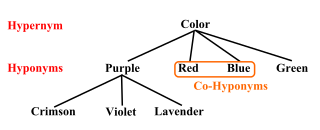

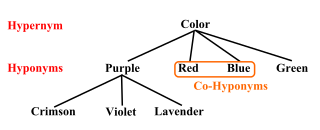

Hyponymy and hypernymy are semantic relations between a term belonging in a set that is defined by another term and the latter. In other words, the relationship of a subtype (hyponym) and the supertype. The semantic field of the hyponym is included within that of the hypernym. For example, pigeon, crow, and eagle are all hyponyms of bird, their hypernym.

Lexical semantics, as a subfield of linguistic semantics, is the study of word meanings. It includes the study of how words structure their meaning, how they act in grammar and compositionality, and the relationships between the distinct senses and uses of a word.

In knowledge representation and ontology components, including for object-oriented programming and design, is-a is a subsumption relationship between abstractions, wherein one class A is a subclass of another class B . In other words, type A is a subtype of type B when A's specification implies B's specification. That is, any object that satisfies A's specification also satisfies B's specification, because B's specification is weaker.

Dependency grammar (DG) is a class of modern grammatical theories that are all based on the dependency relation and that can be traced back primarily to the work of Lucien Tesnière. Dependency is the notion that linguistic units, e.g. words, are connected to each other by directed links. The (finite) verb is taken to be the structural center of clause structure. All other syntactic units (words) are either directly or indirectly connected to the verb in terms of the directed links, which are called dependencies. Dependency grammar differs from phrase structure grammar in that while it can identify phrases it tends to overlook phrasal nodes. A dependency structure is determined by the relation between a word and its dependents. Dependency structures are flatter than phrase structures in part because they lack a finite verb phrase constituent, and they are thus well suited for the analysis of languages with free word order, such as Czech or Warlpiri.

In linguistics, a word sense is one of the meanings of a word. For example, a dictionary may have over 50 different senses of the word "play", each of these having a different meaning based on the context of the word's usage in a sentence, as follows:

We went to see the playRomeo and Juliet at the theater.

The coach devised a great play that put the visiting team on the defensive.

The children went out to play in the park.

In linguistics, semantic analysis is the process of relating syntactic structures, from the levels of phrases, clauses, sentences and paragraphs to the level of the writing as a whole, to their language-independent meanings. It also involves removing features specific to particular linguistic and cultural contexts, to the extent that such a project is possible. The elements of idiom and figurative speech, being cultural, are often also converted into relatively invariant meanings in semantic analysis. Semantics, although related to pragmatics, is distinct in that the former deals with word or sentence choice in any given context, while pragmatics considers the unique or particular meaning derived from context or tone. To reiterate in different terms, semantics is about universally coded meaning, and pragmatics, the meaning encoded in words that is then interpreted by an audience.

A semantic lexicon is a digital dictionary of words labeled with semantic classes so associations can be drawn between words that have not previously been encountered. Semantic lexicons are built upon semantic networks, which represent the semantic relations between words. The difference between a semantic lexicon and a semantic network is that a semantic lexicon has definitions for each word, or a "gloss".

In linguistics, a semantic field is a lexical set of words grouped semantically that refers to a specific subject. The term is also used in anthropology, computational semiotics, and technical exegesis.

The sequence between semantic related ordered words is classified as a lexical chain. A lexical chain is a sequence of related words in writing, spanning short or long distances. A chain is independent of the grammatical structure of the text and in effect it is a list of words that captures a portion of the cohesive structure of the text. A lexical chain can provide a context for the resolution of an ambiguous term and enable identification of the concept that the term represents.

Christiane D. Fellbaum is an American linguist and computational linguistics researcher who is Lecturer with Rank of Professor in the Program in Linguistics and the Computer Science Department at Princeton University. The co-developer of the WordNet project, she is also its current director.

Taxonomy is the practice and science of categorization or classification.

BabelNet is a multilingual lexicalized semantic network and ontology developed at the NLP group of the Sapienza University of Rome. BabelNet was automatically created by linking Wikipedia to the most popular computational lexicon of the English language, WordNet. The integration is done using an automatic mapping and by filling in lexical gaps in resource-poor languages by using statistical machine translation. The result is an encyclopedic dictionary that provides concepts and named entities lexicalized in many languages and connected with large amounts of semantic relations. Additional lexicalizations and definitions are added by linking to free-license wordnets, OmegaWiki, the English Wiktionary, Wikidata, FrameNet, VerbNet and others. Similarly to WordNet, BabelNet groups words in different languages into sets of synonyms, called Babel synsets. For each Babel synset, BabelNet provides short definitions in many languages harvested from both WordNet and Wikipedia.

Automatic taxonomy construction (ATC) is the use of software programs to generate taxonomical classifications from a body of texts called a corpus. ATC is a branch of natural language processing, which in turn is a branch of artificial intelligence.

The Bulgarian WordNet (BulNet) is an electronic multilingual dictionary of synonym sets along with their explanatory definitions and sets of semantic relations with other words in the language.

Malayalam WordNet (പദശൃംഖല) is an on line WordNet created for Malayalam Language. Malayalam WordNet has been developed by the Department of Computer Science, Cochin University Of Science And Technology.