In mathematics, the discrete Fourier transform (DFT) converts a finite sequence of equally-spaced samples of a function into a same-length sequence of equally-spaced samples of the discrete-time Fourier transform (DTFT), which is a complex-valued function of frequency. The interval at which the DTFT is sampled is the reciprocal of the duration of the input sequence. An inverse DFT (IDFT) is a Fourier series, using the DTFT samples as coefficients of complex sinusoids at the corresponding DTFT frequencies. It has the same sample-values as the original input sequence. The DFT is therefore said to be a frequency domain representation of the original input sequence. If the original sequence spans all the non-zero values of a function, its DTFT is continuous, and the DFT provides discrete samples of one cycle. If the original sequence is one cycle of a periodic function, the DFT provides all the non-zero values of one DTFT cycle.

In mathematics, Fourier analysis is the study of the way general functions may be represented or approximated by sums of simpler trigonometric functions. Fourier analysis grew from the study of Fourier series, and is named after Joseph Fourier, who showed that representing a function as a sum of trigonometric functions greatly simplifies the study of heat transfer.

Pink noise, 1⁄f noise or fractal noise is a signal or process with a frequency spectrum such that the power spectral density is inversely proportional to the frequency of the signal. In pink noise, each octave interval carries an equal amount of noise energy.

In physics and mathematics, the Fourier transform (FT) is a transform that converts a function into a form that describes the frequencies present in the original function. The output of the transform is a complex-valued function of frequency. The term Fourier transform refers to both this complex-valued function and the mathematical operation. When a distinction needs to be made the Fourier transform is sometimes called the frequency domain representation of the original function. The Fourier transform is analogous to decomposing the sound of a musical chord into terms of the intensity of its constituent pitches.

The power spectrum of a time series describes the distribution of power into frequency components composing that signal. According to Fourier analysis, any physical signal can be decomposed into a number of discrete frequencies, or a spectrum of frequencies over a continuous range. The statistical average of a certain signal or sort of signal as analyzed in terms of its frequency content, is called its spectrum.

In probability theory and statistics, the beta distribution is a family of continuous probability distributions defined on the interval [0, 1] or in terms of two positive parameters, denoted by alpha (α) and beta (β), that appear as exponents of the variable and its complement to 1, respectively, and control the shape of the distribution.

In mathematics, a Gaussian function, often simply referred to as a Gaussian, is a function of the base form

In signal processing, a finite impulse response (FIR) filter is a filter whose impulse response is of finite duration, because it settles to zero in finite time. This is in contrast to infinite impulse response (IIR) filters, which may have internal feedback and may continue to respond indefinitely.

In signal processing, a periodogram is an estimate of the spectral density of a signal. The term was coined by Arthur Schuster in 1898. Today, the periodogram is a component of more sophisticated methods. It is the most common tool for examining the amplitude vs frequency characteristics of FIR filters and window functions. FFT spectrum analyzers are also implemented as a time-sequence of periodograms.

In mathematics, the continuous wavelet transform (CWT) is a formal tool that provides an overcomplete representation of a signal by letting the translation and scale parameter of the wavelets vary continuously.

In signal processing, time–frequency analysis comprises those techniques that study a signal in both the time and frequency domains simultaneously, using various time–frequency representations. Rather than viewing a 1-dimensional signal and some transform, time–frequency analysis studies a two-dimensional signal – a function whose domain is the two-dimensional real plane, obtained from the signal via a time–frequency transform.

A sensor array is a group of sensors, usually deployed in a certain geometry pattern, used for collecting and processing electromagnetic or acoustic signals. The advantage of using a sensor array over using a single sensor lies in the fact that an array adds new dimensions to the observation, helping to estimate more parameters and improve the estimation performance. For example an array of radio antenna elements used for beamforming can increase antenna gain in the direction of the signal while decreasing the gain in other directions, i.e., increasing signal-to-noise ratio (SNR) by amplifying the signal coherently. Another example of sensor array application is to estimate the direction of arrival of impinging electromagnetic waves. The related processing method is called array signal processing. A third examples includes chemical sensor arrays, which utilize multiple chemical sensors for fingerprint detection in complex mixtures or sensing environments. Application examples of array signal processing include radar/sonar, wireless communications, seismology, machine condition monitoring, astronomical observations fault diagnosis, etc.

The autocorrelation technique is a method for estimating the dominating frequency in a complex signal, as well as its variance. Specifically, it calculates the first two moments of the power spectrum, namely the mean and variance. It is also known as the pulse-pair algorithm in radar theory.

In electronics and signal processing, mainly in digital signal processing, a Gaussian filter is a filter whose impulse response is a Gaussian function. Gaussian filters have the properties of having no overshoot to a step function input while minimizing the rise and fall time. This behavior is closely connected to the fact that the Gaussian filter has the minimum possible group delay. A Gaussian filter will have the best combination of suppression of high frequencies while also minimizing spatial spread, being the critical point of the uncertainty principle. These properties are important in areas such as oscilloscopes and digital telecommunication systems.

Geophysical survey is the systematic collection of geophysical data for spatial studies. Detection and analysis of the geophysical signals forms the core of Geophysical signal processing. The magnetic and gravitational fields emanating from the Earth's interior hold essential information concerning seismic activities and the internal structure. Hence, detection and analysis of the electric and Magnetic fields is very crucial. As the Electromagnetic and gravitational waves are multi-dimensional signals, all the 1-D transformation techniques can be extended for the analysis of these signals as well. Hence this article also discusses multi-dimensional signal processing techniques.

In statistical signal processing, the goal of spectral density estimation (SDE) or simply spectral estimation is to estimate the spectral density of a signal from a sequence of time samples of the signal. Intuitively speaking, the spectral density characterizes the frequency content of the signal. One purpose of estimating the spectral density is to detect any periodicities in the data, by observing peaks at the frequencies corresponding to these periodicities.

Quantitative electroencephalography is a field concerned with the numerical analysis of electroencephalography (EEG) data and associated behavioral correlates.

Multidimension spectral estimation is a generalization of spectral estimation, normally formulated for one-dimensional signals, to multidimensional signals or multivariate data, such as wave vectors.

In statistics, Whittle likelihood is an approximation to the likelihood function of a stationary Gaussian time series. It is named after the mathematician and statistician Peter Whittle, who introduced it in his PhD thesis in 1951. It is commonly used in time series analysis and signal processing for parameter estimation and signal detection.

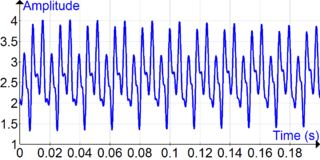

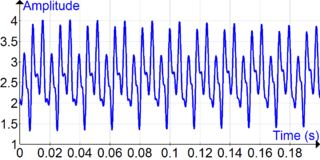

EEG analysis is exploiting mathematical signal analysis methods and computer technology to extract information from electroencephalography (EEG) signals. The targets of EEG analysis are to help researchers gain a better understanding of the brain; assist physicians in diagnosis and treatment choices; and to boost brain-computer interface (BCI) technology. There are many ways to roughly categorize EEG analysis methods. If a mathematical model is exploited to fit the sampled EEG signals, the method can be categorized as parametric, otherwise, it is a non-parametric method. Traditionally, most EEG analysis methods fall into four categories: time domain, frequency domain, time-frequency domain, and nonlinear methods. There are also later methods including deep neural networks (DNNs).