Related Research Articles

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there are supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS).

A Connection Machine (CM) is a member of a series of massively parallel supercomputers that grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science.

nCUBE was a series of parallel computing computers from the company of the same name. Early generations of the hardware used a custom microprocessor. With its final generations of servers, nCUBE no longer designed custom microprocessors for machines, but used server-class chips manufactured by a third party in massively parallel hardware deployments, primarily for the purposes of on-demand video.

iWarp was an experimental parallel supercomputer architecture developed as a joint project by Intel and Carnegie Mellon University. The project started in 1988, as a follow-up to CMU's previous WARP research project, in order to explore building an entire parallel-computing "node" in a single microprocessor, complete with memory and communications links. In this respect the iWarp is very similar to the INMOS transputer and nCUBE.

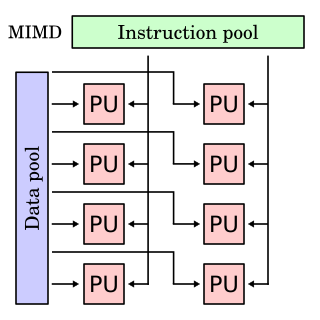

In computing, multiple instruction, multiple data (MIMD) is a technique employed to achieve parallelism. Machines using MIMD have a number of processors that function asynchronously and independently. At any time, different processors may be executing different instructions on different pieces of data.

Cray Inc., a subsidiary of Hewlett Packard Enterprise, is an American supercomputer manufacturer headquartered in Seattle, Washington. It also manufactures systems for data storage and analytics. Several Cray supercomputer systems are listed in the TOP500, which ranks the most powerful supercomputers in the world.

Cleve Barry Moler is an American mathematician and computer programmer specializing in numerical analysis. In the mid to late 1970s, he was one of the authors of LINPACK and EISPACK, Fortran libraries for numerical computing. He invented MATLAB, a numerical computing package, to give his students at the University of New Mexico easy access to these libraries without writing Fortran. In 1984, he co-founded MathWorks with Jack Little to commercialize this program.

The fat tree network is a universal network for provably efficient communication. It was invented by Charles E. Leiserson of the Massachusetts Institute of Technology in 1985. k-ary n-trees, the type of fat-trees commonly used in most high-performance networks, where initially formalized in 1997.

Floating Point Systems, Inc. (FPS), was a Beaverton, Oregon vendor of attached array processors and minisupercomputers. The company was founded in 1970 by former Tektronix engineer Norm Winningstad, with partners Tom Prince, Frank Bouton and Robert Carter. Carter was a salesman for Data General Corp. who persuaded Bouton and Prince to leave Tektronix to start the new company. Winningstad was the fourth partner.

Basic Linear Algebra Subprograms (BLAS) is a specification that prescribes a set of low-level routines for performing common linear algebra operations such as vector addition, scalar multiplication, dot products, linear combinations, and matrix multiplication. They are the de facto standard low-level routines for linear algebra libraries; the routines have bindings for both C and Fortran. Although the BLAS specification is general, BLAS implementations are often optimized for speed on a particular machine, so using them can bring substantial performance benefits. BLAS implementations will take advantage of special floating point hardware such as vector registers or SIMD instructions.

In parallel computing, an embarrassingly parallel workload or problem is one where little or no effort is needed to separate the problem into a number of parallel tasks. This is often the case where there is little or no dependency or need for communication between those parallel tasks, or for results between them.

In computer science, Actor model implementation concerns implementation issues for the Actor model.

The Intel Personal SuperComputer was a product line of parallel computers in the 1980s and 1990s. The iPSC/1 was superseded by the Intel iPSC/2, and then the Intel iPSC/860.

Adam Kazimierz Kolawa was CEO and co-founder of Parasoft, a software company in Monrovia, CA that makes software development tools.

The J–Machine (Jellybean-Machine) was a parallel computer designed by the MIT Concurrent VLSI Architecture group in conjunction with the Intel Corporation. The machine used "jellybean" parts—cheap and multitudinous commodity parts, each with a processor, memory, and a fast communication interface—and a novel network interface to implement fine grained parallel programs.

China operates a number of supercomputer centers which, altogether, hold 29.3% performance share of world's fastest 500 supercomputers. The origins of these centers go back to 1989, when the State Planning Commission, the State Science and Technology Commission and the World Bank jointly launched a project to develop networking and supercomputer facilities in China. In addition to network facilities, the project included three supercomputer centers. China's Sunway TaihuLight ranks third in the TOP500 list.

The term supercomputing arose in the late 1920s in the United States in response to the IBM tabulators at Columbia University. The CDC 6600, released in 1964, is sometimes considered the first supercomputer. However, some earlier computers were considered supercomputers for their day such as the 1960 UNIVAC LARC, the IBM 7030 Stretch, and the Manchester Atlas, both in 1962—all of which were of comparable power; and the 1954 IBM NORC.

John Patrick Hayes is an Irish-American computer scientist and electrical engineer, the Claude E. Shannon Chair of Engineering Science at the University of Michigan.

Geoffrey Charles Fox is a British-born American theoretical physicist and computer scientist, and distinguished professor of informatics and computing, and physics at Indiana University.

Arun K. Somani is Associate Dean for Research of College of Engineering, Distinguished Professor of Electrical and Computer Engineering and Philip and Virginia Sproul Professor at Iowa State University. Somani is Elected Fellow of Institute of Electrical and Electronics Engineers (IEEE) for “contributions to theory and applications of computer networks” from 1999 to 2017 and Life Fellow of IEEE since 2018. He is Distinguished Engineer of Association for Computing Machinery(ACM) and Elected Fellow of The American Association for the Advancement of Science(AAAS).

References

- ↑ Cosmic Cubism from Engineering & Science, March 1984 http://calteches.library.caltech.edu/3419/1/Cubism.pdf

- ↑ Anderson, A. John (1994). Foundations of Computer Technology. CRC Press. p. 378. ISBN 978-0412598104.

- ↑ Cleve Moler (October 28, 2013). "The Intel Hypercube, part 1" . Retrieved November 4, 2013.

- ↑ History of Supercomputing

- ↑ Birth of the Hypercube

- The Torus Routing Chip

- Parallel Computer Archival Documents

- John Apostolakis, Clive Baillie, Robert W. Clayton, Hong Ding, Jon Flower, Geoffrey C. Fox, Thomas D. Gottschalk, Bradford H. Hager, Herbert B. Keller, Adam K. Kolawa, Steve W. Otto, Toshiro Tanimoto, Eric F. van de Velde, J. Barhen, J. R. Einstein, and C. C. Jorgensen. 1989. "Supercomputer applications of the hypercube"—In Supercomputing systems: architectures, design, and performance, Svetlana P. Kartashev and Steven I. Kartashev (Eds.). Van Nostrand Reinhold Co., New York, NY, USA:1989 Pages 480–577.