Operation

The Ctrie data structure is a non-blocking concurrent hash array mapped trie based on single-word compare-and-swap instructions in a shared-memory system. It supports concurrent lookup, insert and remove operations. Just like the hash array mapped trie, it uses the entire 32-bit space for hash values thus having low risk of hashcode collisions. Each node may have up to 32 sub-nodes, but to conserve memory, the list of sub-nodes is represented by a 32-bit bitmap where each bit indicates the presence of a branch, followed by a non-sparse array (of pointers to sub-nodes) whose length equals the Hamming weight of the bitmap.

Keys are inserted by doing an atomic compare-and-swap operation on the node which needs to be modified. To ensure that updates are done independently and in a proper order, a special indirection node (an I-node) is inserted between each regular node and its subtries[ check spelling ].

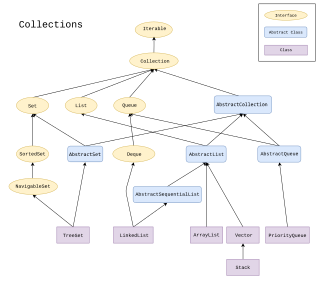

The figure above illustrates the Ctrie insert operation. Trie A is empty - an atomic CAS instruction is used to swap the old node C1 with the new version of C1 which has the new key k1. If the CAS is not successful, the operation is restarted. If the CAS is successful, we obtain the trie B. This procedure is repeated when a new key k2 is added (trie C). If two hashcodes of the keys in the Ctrie collide as is the case with k2 and k3, the Ctrie must be extended with at least one more level - trie D has a new indirection node I2 with a new node C2 which holds both colliding keys. Further CAS instructions are done on the contents of the indirection nodes I1 and I2 - such CAS instructions can be done independently of each other, thus enabling concurrent updates with less contention.

The Ctrie is defined by the pointer to the root indirection node (or a root I-node). The following types of nodes are defined for the Ctrie:

structure INode { main: CNode } structure CNode { bmp: integer array: Branch[2^W] } Branch: INode | SNode structure SNode { k: KeyType v: ValueType }A C-node is a branching node. It typically contains up to 32 branches, so W above is 5. Each branch may either be a key-value pair (represented with an S-node) or another I-node. To avoid wasting 32 entries in the branching array when some branches may be empty, an integer bitmap is used to denote which bits are full and which are empty. The helper method flagpos is used to inspect the relevant hashcode bits for a given level and extract the value of the bit in the bitmap to see if its set or not - denoting whether there is a branch at that position or not. If there is a bit, it also computes its position in the branch array. The formula used to do this is:

bit = bmp & (1 << ((hashcode >> level) & 0x1F)) pos = bitcount((bit - 1) & bmp)

Note that the operations treat only the I-nodes as mutable nodes - all other nodes are never changed after being created and added to the Ctrie.

Below is an illustration of the pseudocode of the insert operation:

definsert(k,v)r=READ(root)ifiinsert(r,k,v,0,null)=RESTARTinsert(k,v)defiinsert(i,k,v,lev,parent)cn=READ(i.main)flag,pos=flagpos(k.hc,lev,cn.bmp)ifcn.bmp&flag=0{ncn=cn.inserted(pos,flag,SNode(k,v))ifCAS(i.main,cn,ncn)returnOKelsereturnRESTART}cn.array(pos)match{casesin:INode=>{returniinsert(sin,k,v,lev+W,i)casesn:SNode=>ifsn.k≠k{nsn=SNode(k,v)nin=INode(CNode(sn,nsn,lev+W))ncn=cn.updated(pos,nin)ifCAS(i.main,cn,ncn)returnOKelsereturnRESTART}else{ncn=cn.updated(pos,SNode(k,v))ifCAS(i.main,cn,ncn)returnOKelsereturnRESTART}}The inserted and updated methods on nodes return new versions of the C-node with a value inserted or updated at the specified position, respectively. Note that the insert operation above is tail-recursive, so it can be rewritten as a while loop. Other operations are described in more detail in the original paper on Ctries. [1] [5]

The data-structure has been proven to be correct [1] - Ctrie operations have been shown to have the atomicity, linearizability and lock-freedom properties. The lookup operation can be modified to guarantee wait-freedom.