A central processing unit (CPU), also called a central processor or main processor, is the electronic circuitry within a computer that carries out the instructions of a computer program by performing the basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions. The computer industry has used the term "central processing unit" at least since the early 1960s. Traditionally, the term "CPU" refers to a processor, more specifically to its processing unit and control unit (CU), distinguishing these core elements of a computer from external components such as main memory and I/O circuitry.

Symmetric multiprocessing (SMP) involves a multiprocessor computer hardware and software architecture where two or more identical processors are connected to a single, shared main memory, have full access to all input and output devices, and are controlled by a single operating system instance that treats all processors equally, reserving none for special purposes. Most multiprocessor systems today use an SMP architecture. In the case of multi-core processors, the SMP architecture applies to the cores, treating them as separate processors.

A superscalar processor is a CPU that implements a form of parallelism called instruction-level parallelism within a single processor. In contrast to a scalar processor that can execute at most one single instruction per clock cycle, a superscalar processor can execute more than one instruction during a clock cycle by simultaneously dispatching multiple instructions to different execution units on the processor. It therefore allows for more throughput than would otherwise be not possible at a given clock rate. Each execution unit is not a separate processor, but an execution resource within a single CPU such as an arithmetic logic unit.

Central processing unit power dissipation or CPU power dissipation is the process in which central processing units (CPUs) consume electrical energy, and dissipate this energy in the form of heat due to the resistance in the electronic circuits.

The clock rate typically refers to the frequency at which a chip like a central processing unit (CPU), one core of a multi-core processor, is running and is used as an indicator of the processor's speed. It is measured in clock cycles per second or its equivalent, the SI unit hertz (Hz). The clock rate of the first generation of computers was measured in hertz or kilohertz (kHz), the first personal computers (PC's) to arrive throughout the 1970s and 1980s had clock rates measured in megahertz (MHz), and in the 21st century the speed of modern CPUs is commonly advertised in gigahertz (GHz). This metric is most useful when comparing processors within the same family, holding constant other features that may affect performance. Video card and CPU manufacturers commonly select their highest performing units from a manufacturing batch and set their maximum clock rate higher, fetching a higher price.

Power management is a feature of some electrical appliances, especially copiers, computers, GPUs and computer peripherals such as monitors and printers, that turns off the power or switches the system to a low-power state when inactive. In computing this is known as PC power management and is built around a standard called ACPI. This supersedes APM. All recent (consumer) computers have ACPI support.

Simultaneous multithreading (SMT) is a technique for improving the overall efficiency of superscalar CPUs with hardware multithreading. SMT permits multiple independent threads of execution to better utilize the resources provided by modern processor architectures.

Explicitly parallel instruction computing (EPIC) is a term coined in 1997 by the HP–Intel alliance to describe a computing paradigm that researchers had been investigating since the early 1980s. This paradigm is also called Independence architectures. It was the basis for Intel and HP development of the Intel Itanium architecture, and HP later asserted that "EPIC" was merely an old term for the Itanium architecture. EPIC permits microprocessors to execute software instructions in parallel by using the compiler, rather than complex on-die circuitry, to control parallel instruction execution. This was intended to allow simple performance scaling without resorting to higher clock frequencies.

In computing, hardware acceleration is the use of computer hardware specially made to perform some functions more efficiently than is possible in software running on a general-purpose CPU. Any transformation of data or routine that can be computed, can be calculated purely in software running on a generic CPU, purely in custom-made hardware, or in some mix of both. An operation can be computed faster in application-specific hardware designed or programmed to compute the operation than specified in software and performed on a general-purpose computer processor. Each approach has advantages and disadvantages. The implementation of computing tasks in hardware to decrease latency and increase throughput is known as hardware acceleration.

A multi-core processor is a single computing component with two or more independent processing units called cores, which read and execute program instructions. The instructions are ordinary CPU instructions but the single processor can run multiple instructions on separate cores at the same time, increasing overall speed for programs amenable to parallel computing. Manufacturers typically integrate the cores onto a single integrated circuit die or onto multiple dies in a single chip package. The microprocessors currently used in almost all personal computers are multi-core.

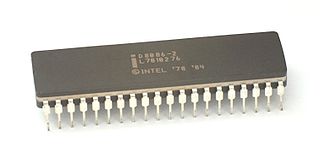

The history of general-purpose CPUs is a continuation of the earlier history of computing hardware.

Explicit data graph execution, or EDGE, is a type of instruction set architecture (ISA) which intends to improve computing performance compared to common processors like the Intel x86 line. EDGE combines many individual instructions into a larger group known as a "hyperblock". Hyperblocks are designed to be able to easily run in parallel.

TRIPS was a microprocessor architecture designed by a team at the University of Texas at Austin in conjunction with IBM, Intel, and Sun Microsystems. TRIPS uses an instruction set architecture designed to be easily broken down into large groups of instructions (graphs) that can be run on independent processing elements. The design collects related data into the graphs, attempting to avoid expensive data reads and writes and keeping the data in high speed memory close to the processing elements. The prototype TRIPS processor contains 16 such elements. TRIPS hoped to reach 1 TFLOP on a single processor, as papers were published from 2003 to 2006.

Stream Processors, Inc was a Silicon Valley-based fabless semiconductor company specializing in the design and manufacture of high-performance digital signal processors for applications including video surveillance, multi-function printers and video conferencing. The company ceased operations in 2009.

In computing, performance per watt is a measure of the energy efficiency of a particular computer architecture or computer hardware. Literally, it measures the rate of computation that can be delivered by a computer for every watt of power consumed. This rate is typically measured by performance on the LINPACK benchmark when trying to compare between computing systems.

Manycore processors are specialist multi-core processors designed for a high degree of parallel processing, containing a large number of simpler, independent processor cores. Manycore processors are used extensively in embedded computers and high-performance computing. As of November 2018, the world's third fastest supercomputer, the Chinese Sunway TaihuLight, obtains its performance from 40,960 SW26010 manycore processors, each containing 256 cores.

Xeon Phi is a series of x86 manycore processors designed and made by Intel. It is intended for use in supercomputers, servers, and high-end workstations. Its architecture allows use of standard programming languages and APIs such as OpenMP.