In mathematics, the determinant is a scalar-valued function of the entries of a square matrix. The determinant of a matrix A is commonly denoted det(A), det A, or |A|. Its value characterizes some properties of the matrix and the linear map represented, on a given basis, by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the corresponding linear map is an isomorphism.

In mathematics, and more specifically in linear algebra, a linear map is a mapping between two vector spaces that preserves the operations of vector addition and scalar multiplication. The same names and the same definition are also used for the more general case of modules over a ring; see Module homomorphism.

In linear algebra, the outer product of two coordinate vectors is the matrix whose entries are all products of an element in the first vector with an element in the second vector. If the two coordinate vectors have dimensions n and m, then their outer product is an n × m matrix. More generally, given two tensors, their outer product is a tensor. The outer product of tensors is also referred to as their tensor product, and can be used to define the tensor algebra.

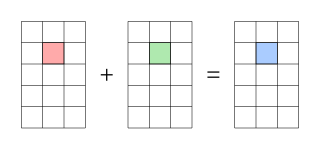

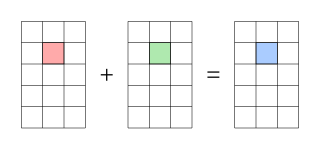

In mathematics, matrix addition is the operation of adding two matrices by adding the corresponding entries together.

In mathematics, particularly in linear algebra, matrix multiplication is a binary operation that produces a matrix from two matrices. For matrix multiplication, the number of columns in the first matrix must be equal to the number of rows in the second matrix. The resulting matrix, known as the matrix product, has the number of rows of the first and the number of columns of the second matrix. The product of matrices A and B is denoted as AB.

In linear algebra, the Cayley–Hamilton theorem states that every square matrix over a commutative ring satisfies its own characteristic equation.

In linear algebra, a diagonal matrix is a matrix in which the entries outside the main diagonal are all zero; the term usually refers to square matrices. Elements of the main diagonal can either be zero or nonzero. An example of a 2×2 diagonal matrix is , while an example of a 3×3 diagonal matrix is. An identity matrix of any size, or any multiple of it is a diagonal matrix called a scalar matrix, for example, . In geometry, a diagonal matrix may be used as a scaling matrix, since matrix multiplication with it results in changing scale (size) and possibly also shape; only a scalar matrix results in uniform change in scale.

In linear algebra, an n-by-n square matrix A is called invertible if there exists an n-by-n square matrix B such thatwhere In denotes the n-by-n identity matrix and the multiplication used is ordinary matrix multiplication. If this is the case, then the matrix B is uniquely determined by A, and is called the (multiplicative) inverse of A, denoted by A−1. Matrix inversion is the process of finding the matrix which when multiplied by the original matrix gives the identity matrix.

In linear algebra, the characteristic polynomial of a square matrix is a polynomial which is invariant under matrix similarity and has the eigenvalues as roots. It has the determinant and the trace of the matrix among its coefficients. The characteristic polynomial of an endomorphism of a finite-dimensional vector space is the characteristic polynomial of the matrix of that endomorphism over any base. The characteristic equation, also known as the determinantal equation, is the equation obtained by equating the characteristic polynomial to zero.

In special relativity, a four-vector is an object with four components, which transform in a specific way under Lorentz transformations. Specifically, a four-vector is an element of a four-dimensional vector space considered as a representation space of the standard representation of the Lorentz group, the representation. It differs from a Euclidean vector in how its magnitude is determined. The transformations that preserve this magnitude are the Lorentz transformations, which include spatial rotations and boosts.

In mathematics, the spectral radius of a square matrix is the maximum of the absolute values of its eigenvalues. More generally, the spectral radius of a bounded linear operator is the supremum of the absolute values of the elements of its spectrum. The spectral radius is often denoted by ρ(·).

In mathematics, a block matrix or a partitioned matrix is a matrix that is interpreted as having been broken into sections called blocks or submatrices.

In linear algebra, the Frobenius companion matrix of the monic polynomial is the square matrix defined as

In linear algebra, a circulant matrix is a square matrix in which all rows are composed of the same elements and each row is rotated one element to the right relative to the preceding row. It is a particular kind of Toeplitz matrix.

In linear algebra, a generalized eigenvector of an matrix is a vector which satisfies certain criteria which are more relaxed than those for an (ordinary) eigenvector.

In mathematics, matrix calculus is a specialized notation for doing multivariable calculus, especially over spaces of matrices. It collects the various partial derivatives of a single function with respect to many variables, and/or of a multivariate function with respect to a single variable, into vectors and matrices that can be treated as single entities. This greatly simplifies operations such as finding the maximum or minimum of a multivariate function and solving systems of differential equations. The notation used here is commonly used in statistics and engineering, while the tensor index notation is preferred in physics.

In linear algebra, an eigenvector or characteristic vector is a vector that has its direction unchanged by a given linear transformation. More precisely, an eigenvector, , of a linear transformation, , is scaled by a constant factor, , when the linear transformation is applied to it: . It is often important to know these vectors in linear algebra. The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor .

In the mathematical discipline of matrix theory, a Jordan matrix, named after Camille Jordan, is a block diagonal matrix over a ring R, where each block along the diagonal, called a Jordan block, has the following form:

In mathematics, specifically multilinear algebra, a dyadic or dyadic tensor is a second order tensor, written in a notation that fits in with vector algebra.

In mathematics, the Frobenius inner product is a binary operation that takes two matrices and returns a scalar. It is often denoted . The operation is a component-wise inner product of two matrices as though they are vectors, and satisfies the axioms for an inner product. The two matrices must have the same dimension—same number of rows and columns—but are not restricted to be square matrices.