A graphical user interface, or GUI, is a form of user interface that allows users to interact with electronic devices through graphical icons and visual indicators such as secondary notation. In many applications, GUIs are used instead of text-based UIs, which are based on typed command labels or text navigation. GUIs were introduced in reaction to the perceived steep learning curve of command-line interfaces (CLIs), which require commands to be typed on a computer keyboard.

The history of the graphical user interface, understood as the use of graphic icons and a pointing device to control a computer, covers a five-decade span of incremental refinements, built on some constant core principles. Several vendors have created their own windowing systems based on independent code, but with basic elements in common that define the WIMP "window, icon, menu and pointing device" paradigm.

In computing, a pointing device gesture or mouse gesture is a way of combining pointing device or finger movements and clicks that the software recognizes as a specific computer event and responds to accordingly. They can be useful for people who have difficulties typing on a keyboard. For example, in a web browser, a user can navigate to the previously viewed page by pressing the right pointing device button, moving the pointing device briefly to the left, then releasing the button.

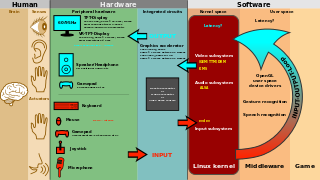

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology.

A touchpad or trackpad is a type of pointing device. Its largest component is a tactile sensor: an electronic device with a flat surface, that detects the motion and position of a user's fingers, and translates them to 2D motion, to control a pointer in a graphical user interface on a computer screen. Touchpads are common on laptop computers, contrasted with desktop computers, where mice are more prevalent. Trackpads are sometimes used on desktops, where desk space is scarce. Because trackpads can be made small, they can be found on personal digital assistants (PDAs) and some portable media players. Wireless touchpads are also available, as detached accessories.

In computing, an icon is a pictogram or ideogram displayed on a computer screen in order to help the user navigate a computer system. The icon itself is a quickly comprehensible symbol of a software tool, function, or a data file, accessible on the system and is more like a traffic sign than a detailed illustration of the actual entity it represents. It can serve as an electronic hyperlink or file shortcut to access the program or data. The user can activate an icon using a mouse, pointer, finger, or voice commands. Their placement on the screen, also in relation to other icons, may provide further information to the user about their usage. In activating an icon, the user can move directly into and out of the identified function without knowing anything further about the location or requirements of the file or code.

In computer science, human–computer interaction, and interaction design, direct manipulation is an approach to interfaces which involves continuous representation of objects of interest together with rapid, reversible, and incremental actions and feedback. As opposed to other interaction styles, for example, the command language, the intention of direct manipulation is to allow a user to manipulate objects presented to them, using actions that correspond at least loosely to manipulation of physical objects. An example of direct manipulation is resizing a graphical shape, such as a rectangle, by dragging its corners or edges with a mouse.

In computer graphical user interfaces, drag and drop is a pointing device gesture in which the user selects a virtual object by "grabbing" it and dragging it to a different location or onto another virtual object. In general, it can be used to invoke many kinds of actions, or create various types of associations between two abstract objects.

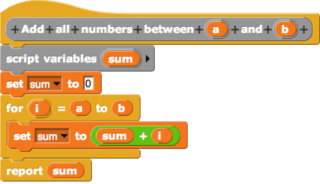

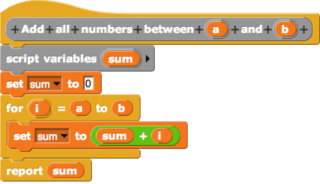

In computing, a visual programming language, also known as diagrammatic programming, graphical programming or block coding, is a programming language that lets users create programs by manipulating program elements graphically rather than by specifying them textually. A VPL allows programming with visual expressions, spatial arrangements of text and graphic symbols, used either as elements of syntax or secondary notation. For example, many VPLs are based on the idea of "boxes and arrows", where boxes or other screen objects are treated as entities, connected by arrows, lines or arcs which represent relations. VPLs are generally the basis of Low-code development platforms.

In human–computer interaction, WIMP stands for "windows, icons, menus, pointer", denoting a style of interaction using these elements of the user interface. Other expansions are sometimes used, such as substituting "mouse" and "mice" for menus, or "pull-down menu" and "pointing" for pointer.

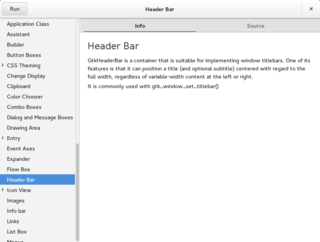

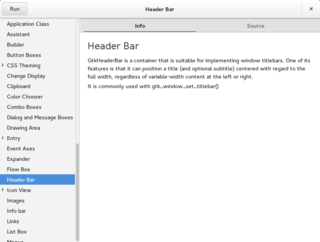

A graphical widget in a graphical user interface is an element of interaction, such as a button or a scroll bar. Controls are software components that a computer user interacts with through direct manipulation to read or edit information about an application. User interface libraries such as Windows Presentation Foundation, Qt, GTK, and Cocoa, contain a collection of controls and the logic to render these.

The following outline is provided as an overview of and topical guide to human–computer interaction:

User interface (UI) design or user interface engineering is the design of user interfaces for machines and software, such as computers, home appliances, mobile devices, and other electronic devices, with the focus on maximizing usability and the user experience. In computer or software design, user interface (UI) design primarily focuses on information architecture. It is the process of building interfaces that clearly communicate to the user what's important. UI design refers to graphical user interfaces and other forms of interface design. The goal of user interface design is to make the user's interaction as simple and efficient as possible, in terms of accomplishing user goals.

This is an alphabetical list of articles pertaining specifically to software engineering.

AgentSheets was one of the first modern block-based programming languages designed for children. The idea of AgentSheets was to overcome syntactic challenges found in common text-based programming languages by using drag-and-drop mechanisms conceptualizing commands such as conditions and actions as editable blocks that could be composed into programs. Ideas such as this would go on to be used in various other programming languages, such as Scratch. AgentSheets was used to create media-rich projects such as games and interactive simulations. The main building blocks of AgentSheets were interactive objects, or "agents," that were programmed through rules. Using conditions, agents could sense the user input including mouse, keyboard, speech recognition and web page content in more advanced versions. Using actions agents could move, produce sounds, open web pages, and compute formulas.

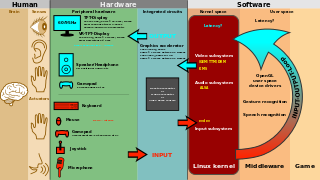

An interaction technique, user interface technique or input technique is a combination of hardware and software elements that provides a way for computer users to accomplish a single task. For example, one can go back to the previously visited page on a Web browser by either clicking a button, pressing a key, performing a mouse gesture or uttering a speech command. It is a widely used term in human-computer interaction. In particular, the term "new interaction technique" is frequently used to introduce a novel user interface design idea.

In computing, 3D interaction is a form of human-machine interaction where users are able to move and perform interaction in 3D space. Both human and machine process information where the physical position of elements in the 3D space is relevant.

In computing, a natural user interface (NUI) or natural interface is a user interface that is effectively invisible, and remains invisible as the user continuously learns increasingly complex interactions. The word "natural" is used because most computer interfaces use artificial control devices whose operation has to be learned. Examples include voice assistants, such as Alexa and Siri, touch and multitouch interactions on today's mobile phones and tablets, but also touch interfaces invisibly integrated into the textiles furnitures.

Hardware interface design (HID) is a cross-disciplinary design field that shapes the physical connection between people and technology in order to create new hardware interfaces that transform purely digital processes into analog methods of interaction. It employs a combination of filmmaking tools, software prototyping, and electronics breadboarding.