Related Research Articles

Computational linguistics is an interdisciplinary field concerned with the computational modelling of natural language, as well as the study of appropriate computational approaches to linguistic questions. In general, computational linguistics draws upon linguistics, computer science, artificial intelligence, mathematics, logic, philosophy, cognitive science, cognitive psychology, psycholinguistics, anthropology and neuroscience, among others.

Natural language processing (NLP) is an interdisciplinary subfield of computer science and information retrieval. It is primarily concerned with giving computers the ability to support and manipulate human language. It involves processing natural language datasets, such as text corpora or speech corpora, using either rule-based or probabilistic machine learning approaches. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. To this end, natural language processing often borrows ideas from theoretical linguistics. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves.

In linguistics, syntax is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). There are numerous approaches to syntax that differ in their central assumptions and goals.

In linguistics and natural language processing, a corpus or text corpus is a dataset, consisting of natively digital and older, digitalized, language resources, either annotated or unannotated.

In the study of language, description or descriptive linguistics is the work of objectively analyzing and describing how language is actually used by a speech community.

In linguistics, transformational grammar (TG) or transformational-generative grammar (TGG) is part of the theory of generative grammar, especially of natural languages. It considers grammar to be a system of rules that generate exactly those combinations of words that form grammatical sentences in a given language and involves the use of defined operations to produce new sentences from existing ones.

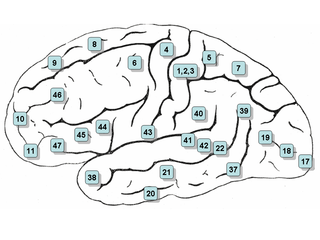

Neurolinguistics is the study of neural mechanisms in the human brain that control the comprehension, production, and acquisition of language. As an interdisciplinary field, neurolinguistics draws methods and theories from fields such as neuroscience, linguistics, cognitive science, communication disorders and neuropsychology. Researchers are drawn to the field from a variety of backgrounds, bringing along a variety of experimental techniques as well as widely varying theoretical perspectives. Much work in neurolinguistics is informed by models in psycholinguistics and theoretical linguistics, and is focused on investigating how the brain can implement the processes that theoretical and psycholinguistics propose are necessary in producing and comprehending language. Neurolinguists study the physiological mechanisms by which the brain processes information related to language, and evaluate linguistic and psycholinguistic theories, using aphasiology, brain imaging, electrophysiology, and computer modeling.

In linguistics, the topic, or theme, of a sentence is what is being talked about, and the comment is what is being said about the topic. This division into old vs. new content is called information structure. It is generally agreed that clauses are divided into topic vs. comment, but in certain cases the boundary between them depends on which specific grammatical theory is being used to analyze the sentence.

In corpus linguistics, part-of-speech tagging, also called grammatical tagging is the process of marking up a word in a text (corpus) as corresponding to a particular part of speech, based on both its definition and its context. A simplified form of this is commonly taught to school-age children, in the identification of words as nouns, verbs, adjectives, adverbs, etc.

Construction grammar is a family of theories within the field of cognitive linguistics which posit that constructions, or learned pairings of linguistic patterns with meanings, are the fundamental building blocks of human language. Constructions include words, morphemes, fixed expressions and idioms, and abstract grammatical rules such as the passive voice or the ditransitive. Any linguistic pattern is considered to be a construction as long as some aspect of its form or its meaning cannot be predicted from its component parts, or from other constructions that are recognized to exist. In construction grammar, every utterance is understood to be a combination of multiple different constructions, which together specify its precise meaning and form.

Syntactic Structures is an important work in linguistics by American linguist Noam Chomsky, originally published in 1957. A short monograph of about a hundred pages, it is recognized as one of the most significant and influential linguistic studies of the 20th century. It contains the now-famous sentence "Colorless green ideas sleep furiously", which Chomsky offered as an example of a grammatically correct sentence that has no discernible meaning, thus arguing for the independence of syntax from semantics.

A language model is a probabilistic model of a natural language. In 1980, the first significant statistical language model was proposed, and during the decade IBM performed ‘Shannon-style’ experiments, in which potential sources for language modeling improvement were identified by observing and analyzing the performance of human subjects in predicting or correcting text.

In linguistics, linguistic competence is the system of unconscious knowledge that one knows when they know a language. It is distinguished from linguistic performance, which includes all other factors that allow one to use one's language in practice.

Epistemic modality is a sub-type of linguistic modality that encompasses knowledge, belief, or credence in a proposition. Epistemic modality is exemplified by the English modals may, might, must. However, it occurs cross-linguistically, encoded in a wide variety of lexical items and grammatical structures. Epistemic modality has been studied from many perspectives within linguistics and philosophy. It is one of the most studied phenomena in formal semantics.

In linguistics, a treebank is a parsed text corpus that annotates syntactic or semantic sentence structure. The construction of parsed corpora in the early 1990s revolutionized computational linguistics, which benefitted from large-scale empirical data.

Statistical machine translation (SMT) was a machine translation approach, that superseded the previous, rule-based approach because it required explicit description of each and every linguistic rule, which was costly, and which often did not generalize to other languages. Since 2003, the statistical approach itself has been gradually superseded by the deep learning-based neural network approach.

In linguistics, grammaticality is determined by the conformity to language usage as derived by the grammar of a particular speech variety. The notion of grammaticality rose alongside the theory of generative grammar, the goal of which is to formulate rules that define well-formed, grammatical sentences. These rules of grammaticality also provide explanations of ill-formed, ungrammatical sentences.

A resumptive pronoun is a personal pronoun appearing in a relative clause, which restates the antecedent after a pause or interruption, as in This is the girli that whenever it rains shei cries.

Aspects of the Theory of Syntax is a book on linguistics written by American linguist Noam Chomsky, first published in 1965. In Aspects, Chomsky presented a deeper, more extensive reformulation of transformational generative grammar (TGG), a new kind of syntactic theory that he had introduced in the 1950s with the publication of his first book, Syntactic Structures. Aspects is widely considered to be the foundational document and a proper book-length articulation of Chomskyan theoretical framework of linguistics. It presented Chomsky's epistemological assumptions with a view to establishing linguistic theory-making as a formal discipline comparable to physical sciences, i.e. a domain of inquiry well-defined in its nature and scope. From a philosophical perspective, it directed mainstream linguistic research away from behaviorism, constructivism, empiricism and structuralism and towards mentalism, nativism, rationalism and generativism, respectively, taking as its main object of study the abstract, inner workings of the human mind related to language acquisition and production.

An acceptability judgment task, also called acceptability rating task, is a common method in empirical linguistics to gather information about the internal grammar of speakers of a language.

References

- ↑ Warstadt, Alex; Singh, Amanpreet; Bowman, Samuel R. (2019). "Neural Network Acceptability Judgments". Transactions of the Association for Computational Linguistics. 7 (4): 625–641. arXiv: 1805.12471 . doi:10.1162/tacl_a_00290.