Related Research Articles

Natural language processing (NLP) is an interdisciplinary subfield of computer science and artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Typically data is collected in text corpora, using either rule-based, statistical or neural-based approaches in machine learning and deep learning.

Perl is a high-level, general-purpose, interpreted, dynamic programming language. Though Perl is not officially an acronym, there are various backronyms in use, including "Practical Extraction and Reporting Language".

Information extraction (IE) is the task of automatically extracting structured information from unstructured and/or semi-structured machine-readable documents and other electronically represented sources. Typically, this involves processing human language texts by means of natural language processing (NLP). Recent activities in multimedia document processing like automatic annotation and content extraction out of images/audio/video/documents could be seen as information extraction.

Doxygen is a documentation generator and static analysis tool for software source trees. When used as a documentation generator, Doxygen extracts information from specially-formatted comments within the code. When used for analysis, Doxygen uses its parse tree to generate diagrams and charts of the code structure. Doxygen can cross reference documentation and code, so that the reader of a document can easily refer to the actual code.

Shallow parsing is an analysis of a sentence which first identifies constituent parts of sentences and then links them to higher order units that have discrete grammatical meanings. While the most elementary chunking algorithms simply link constituent parts on the basis of elementary search patterns, approaches that use machine learning techniques can take contextual information into account and thus compose chunks in such a way that they better reflect the semantic relations between the basic constituents. That is, these more advanced methods get around the problem that combinations of elementary constituents can have different higher level meanings depending on the context of the sentence.

The Natural Language Toolkit, or more commonly NLTK, is a suite of libraries and programs for symbolic and statistical natural language processing (NLP) for English written in the Python programming language. It supports classification, tokenization, stemming, tagging, parsing, and semantic reasoning functionalities. It was developed by Steven Bird and Edward Loper in the Department of Computer and Information Science at the University of Pennsylvania. NLTK includes graphical demonstrations and sample data. It is accompanied by a book that explains the underlying concepts behind the language processing tasks supported by the toolkit, plus a cookbook.

Catalyst is an open-source web application framework written in Perl. It closely follows the model–view–controller (MVC) architecture and supports a number of experimental web patterns. It is written using Moose, a modern object system for Perl. Its design is heavily inspired by frameworks such as Ruby on Rails, Maypole, and Spring.

Text segmentation is the process of dividing written text into meaningful units, such as words, sentences, or topics. The term applies both to mental processes used by humans when reading text, and to artificial processes implemented in computers, which are the subject of natural language processing. The problem is non-trivial, because while some written languages have explicit word boundary markers, such as the word spaces of written English and the distinctive initial, medial and final letter shapes of Arabic, such signals are sometimes ambiguous and not present in all written languages.

Natural-language programming (NLP) is an ontology-assisted way of programming in terms of natural-language sentences, e.g. English. A structured document with Content, sections and subsections for explanations of sentences forms a NLP document, which is actually a computer program. Natural language programming is not to be mixed up with natural language interfacing or voice control where a program is first written and then communicated with through natural language using an interface added on. In NLP the functionality of a program is organised only for the definition of the meaning of sentences. For instance, NLP can be used to represent all the knowledge of an autonomous robot. Having done so, its tasks can be scripted by its users so that the robot can execute them autonomously while keeping to prescribed rules of behaviour as determined by the robot's user. Such robots are called transparent robots as their reasoning is transparent to users and this develops trust in robots. Natural language use and natural-language user interfaces include Inform 7, a natural programming language for making interactive fiction, Shakespeare, an esoteric natural programming language in the style of the plays of William Shakespeare, and Wolfram Alpha, a computational knowledge engine, using natural-language input. Some methods for program synthesis are based on natural-language programming.

MontyLingua is a popular natural language processing toolkit. It is a suite of libraries and programs for symbolic and statistical natural language processing (NLP) for both the Python and Java programming languages. It is enriched with common sense knowledge about the everyday world from Open Mind Common Sense. From English sentences, it extracts subject/verb/object tuples, extracts adjectives, noun phrases and verb phrases, and extracts people's names, places, events, dates and times, and other semantic information. It does not require training. It was written by Hugo Liu at MIT in 2003.

Because it is enriched with common sense knowledge it can avoid many mistakes. e.g.:

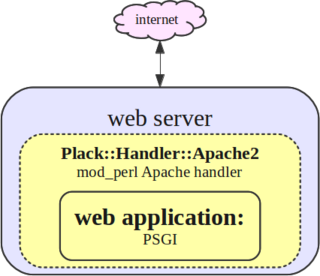

Plack is a Perl web application programming framework inspired by Rack for Ruby and WSGI for Python, and it is the project behind the PSGI specification used by other frameworks such as Catalyst and Dancer. Plack allows for testing of Perl web applications without a live web server.

Truecasing, also called capitalization recovery, capitalization correction, or case restoration, is the problem in natural language processing (NLP) of determining the proper capitalization of words where such information is unavailable. This commonly comes up due to the standard practice of automatically capitalizing the first word of a sentence. It can also arise in badly cased or noncased text.

The Apache OpenNLP library is a machine learning based toolkit for the processing of natural language text. It supports the most common NLP tasks, such as language detection, tokenization, sentence segmentation, part-of-speech tagging, named entity extraction, chunking, parsing and coreference resolution. These tasks are usually required to build more advanced text processing services.

Apache cTAKES: clinical Text Analysis and Knowledge Extraction System is an open-source Natural Language Processing (NLP) system that extracts clinical information from electronic health record unstructured text. It processes clinical notes, identifying types of clinical named entities — drugs, diseases/disorders, signs/symptoms, anatomical sites and procedures. Each named entity has attributes for the text span, the ontology mapping code, context, and negated/not negated.

The following outline is provided as an overview of and topical guide to the Perl programming language:

The following outline is provided as an overview of and topical guide to natural-language processing:

In computer science and visualization, a canvas is a container that holds various drawing elements. It takes its name from the canvas used in visual arts. It is sometimes called a scene graph because it arranges the logical representation of a user interface or graphical scene. Some implementations also define the spatial representation and allow the user to interact with the elements via a graphical user interface.

Spark NLP is an open-source text processing library for advanced natural language processing for the Python, Java and Scala programming languages. The library is built on top of Apache Spark and its Spark ML library.

References

- ↑ E. Stamatatos; N. Fakotakis & G. Kokkinakis. "Automatic extraction of rules for sentence boundary disambiguation". Proceedings of the Workshop on Machine Learning in Human Language Technology. University of Patras. pp. 88–92.

- ↑ O'Neil, John. "Doing Things with Words, Part Two: Sentence Boundary Detection". Archived from the original on 2009-02-21. Retrieved 2009-01-03.

- ↑ Reynar, JC; Ratnaparkhi, A. "A Maximum Entropy Approach to Identifying Sentence Boundaries" (PDF). Retrieved 2009-01-03.

- 1 2 "SATZ: An Adaptive Sentence Boundary Detector". Archived from the original on 2007-09-22.

- ↑ "SentParBreaker Web page". Archived from the original on 2007-11-12.

- ↑ "Lingua-EN-Sentence-0.25 - Module for splitting text into sentences. - metacpan.org". metacpan.org.

- ↑ "Text::Sentence - module for splitting text into sentences - metacpan.org". metacpan.org.

- ↑ "Apache OpenNLP". opennlp.apache.org.

- ↑ "Welcome | FreeLing Home Page".

- ↑ "NLTK :: Natural Language Toolkit". www.nltk.org.

- ↑ "Software - The Stanford Natural Language Processing Group". nlp.stanford.edu.

- ↑ "Google Code Archive - Long-term storage for Google Code Project Hosting". code.google.com.

- ↑ "CogCompNLP". January 2, 2024 – via GitHub.