Neurolinguistics is the study of neural mechanisms in the human brain that control the comprehension, production, and acquisition of language. As an interdisciplinary field, neurolinguistics draws methods and theories from fields such as neuroscience, linguistics, cognitive science, communication disorders and neuropsychology. Researchers are drawn to the field from a variety of backgrounds, bringing along a variety of experimental techniques as well as widely varying theoretical perspectives. Much work in neurolinguistics is informed by models in psycholinguistics and theoretical linguistics, and is focused on investigating how the brain can implement the processes that theoretical and psycholinguistics propose are necessary in producing and comprehending language. Neurolinguists study the physiological mechanisms by which the brain processes information related to language, and evaluate linguistic and psycholinguistic theories, using aphasiology, brain imaging, electrophysiology, and computer modeling.

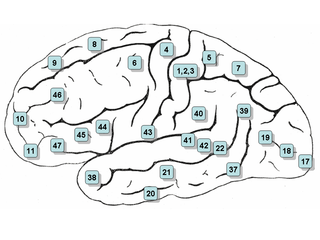

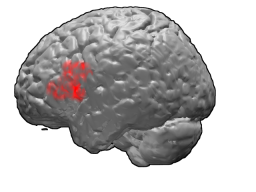

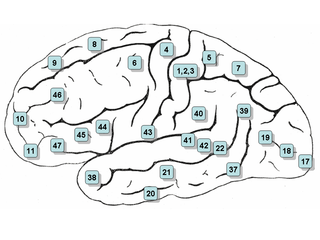

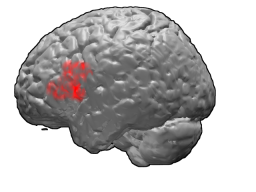

Brodmann area 45 (BA45), is part of the frontal cortex in the human brain. It is situated on the lateral surface, inferior to BA9 and adjacent to BA46.

The N400 is a component of time-locked EEG signals known as event-related potentials (ERP). It is a negative-going deflection that peaks around 400 milliseconds post-stimulus onset, although it can extend from 250-500 ms, and is typically maximal over centro-parietal electrode sites. The N400 is part of the normal brain response to words and other meaningful stimuli, including visual and auditory words, sign language signs, pictures, faces, environmental sounds, and smells.

The lexical decision task (LDT) is a procedure used in many psychology and psycholinguistics experiments. The basic procedure involves measuring how quickly people classify stimuli as words or nonwords.

Sentence processing takes place whenever a reader or listener processes a language utterance, either in isolation or in the context of a conversation or a text. Many studies of the human language comprehension process have focused on reading of single utterances (sentences) without context. Extensive research has shown that language comprehension is affected by context preceding a given utterance as well as many other factors.

The P600 is an event-related potential (ERP) component, or peak in electrical brain activity measured by electroencephalography (EEG). It is a language-relevant ERP component and is thought to be elicited by hearing or reading grammatical errors and other syntactic anomalies. Therefore, it is a common topic of study in neurolinguistic experiments investigating sentence processing in the human brain.

The early left anterior negativity is an event-related potential in electroencephalography (EEG), or component of brain activity that occurs in response to a certain kind of stimulus. It is characterized by a negative-going wave that peaks around 200 milliseconds or less after the onset of a stimulus, and most often occurs in response to linguistic stimuli that violate word-category or phrase structure rules. As such, it is frequently a topic of study in neurolinguistics experiments, specifically in areas such as sentence processing. While it is frequently used in language research, there is no evidence yet that it is necessarily a language-specific phenomenon.

Priming is the idea that exposure to one stimulus may influence a response to a subsequent stimulus, without conscious guidance or intention. The priming effect refers to the positive or negative effect of a rapidly presented stimulus on the processing of a second stimulus that appears shortly after. Generally speaking, the generation of priming effect depends on the existence of some positive or negative relationship between priming and target stimuli. For example, the word nurse might be recognized more quickly following the word doctor than following the word bread. Priming can be perceptual, associative, repetitive, positive, negative, affective, semantic, or conceptual. Priming effects involve word recognition, semantic processing, attention, unconscious processing, and many other issues, and are related to differences in various writing systems. Research, however, has yet to firmly establish the duration of priming effects, yet their onset can be almost instantaneous.

In neuroscience, the lateralized readiness potential (LRP) is an event-related brain potential, or increase in electrical activity at the surface of the brain, that is thought to reflect the preparation of motor activity on a certain side of the body; in other words, it is a spike in the electrical activity of the brain that happens when a person gets ready to move one arm, leg, or foot. It is a special form of bereitschaftspotential. LRPs are recorded using electroencephalography (EEG) and have numerous applications in cognitive neuroscience.

Difference due to memory (Dm) indexes differences in neural activity during the study phase of an experiment for items that subsequently are remembered compared to items that are later forgotten. It is mainly discussed as an event-related potential (ERP) effect that appears in studies employing a subsequent memory paradigm, in which ERPs are recorded when a participant is studying a list of materials and trials are sorted as a function of whether they go on to be remembered or not in the test phase. For meaningful study material, such as words or line drawings, items that are subsequently remembered typically elicit a more positive waveform during the study phase. This difference typically occurs in the range of 400–800 milliseconds (ms) and is generally greatest over centro-parietal recording sites, although these characteristics are modulated by many factors.

In neuroscience, the visual P200 or P2 is a waveform component or feature of the event-related potential (ERP) measured at the human scalp. Like other potential changes measurable from the scalp, this effect is believed to reflect the post-synaptic activity of a specific neural process. The P2 component, also known as the P200, is so named because it is a positive going electrical potential that peaks at about 200 milliseconds after the onset of some external stimulus. This component is often distributed around the centro-frontal and the parieto-occipital areas of the scalp. It is generally found to be maximal around the vertex of the scalp, however there have been some topographical differences noted in ERP studies of the P2 in different experimental conditions.

The N200, or N2, is an event-related potential (ERP) component. An ERP can be monitored using a non-invasive electroencephalography (EEG) cap that is fitted over the scalp on human subjects. An EEG cap allows researchers and clinicians to monitor the minute electrical activity that reaches the surface of the scalp from post-synaptic potentials in neurons, which fluctuate in relation to cognitive processing. EEG provides millisecond-level temporal resolution and is therefore known as one of the most direct measures of covert mental operations in the brain. The N200 in particular is a negative-going wave that peaks 200-350ms post-stimulus and is found primarily over anterior scalp sites. Past research focused on the N200 as a mismatch detector, but it has also been found to reflect executive cognitive control functions, and has recently been used in the study of language.

Change deafness is a perceptual phenomenon that occurs when, under certain circumstances, a physical change in an auditory stimulus goes unnoticed by the listener. There is uncertainty regarding the mechanisms by which changes to auditory stimuli go undetected, though scientific research has been done to determine the levels of processing at which these consciously undetected auditory changes are actually encoded. An understanding of the mechanisms underlying change deafness could offer insight on issues such as the completeness of our representation of the auditory environment, the limitations of the auditory perceptual system, and the relationship between the auditory system and memory. The phenomenon of change deafness is thought to be related to the interactions between high and low level processes that produce conscious experiences of auditory soundscapes.

Bilingual interactive activation plus (BIA+) is a model for understanding the process of bilingual language comprehension and consists of two interactive subsystems: the word identification subsystem and task/decision subsystem. It is the successor of the Bilingual Interactive Activation (BIA) model which was updated in 2002 to include phonologic and semantic lexical representations, revise the role of language nodes, and specify the purely bottom-up nature of bilingual language processing.

Linguistic prediction is a phenomenon in psycholinguistics occurring whenever information about a word or other linguistic unit is activated before that unit is actually encountered. Evidence from eyetracking, event-related potentials, and other experimental methods indicates that in addition to integrating each subsequent word into the context formed by previously encountered words, language users may, under certain conditions, try to predict upcoming words. In particular, prediction seems to occur regularly when the context of a sentence greatly limits the possible words that have not yet been revealed. For instance, a person listening to a sentence like, "In the summer it is hot, and in the winter it is..." would be highly likely to predict the sentence completion "cold" in advance of actually hearing it. A form of prediction is also thought to occur in some types of lexical priming, a phenomenon whereby a word becomes easier to process if it is preceded by a related word. Linguistic prediction is an active area of research in psycholinguistics and cognitive neuroscience.

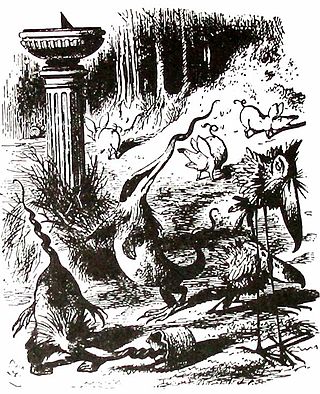

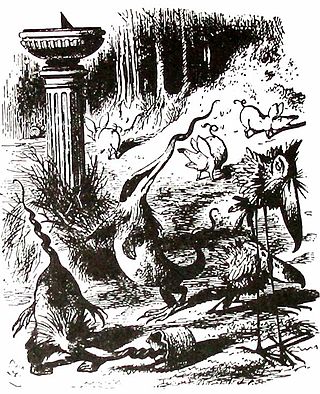

A Jabberwocky sentence is a type of sentence of interest in neurolinguistics. Jabberwocky sentences take their name from the language of Lewis Carroll's well-known poem "Jabberwocky". In the poem, Carroll uses correct English grammar and syntax, but many of the words are made up and merely suggest meaning. A Jabberwocky sentence is therefore a sentence which uses correct grammar and syntax but contains nonsense words, rendering it semantically meaningless.

Embodied cognition occurs when an organism's sensorimotor capacities, body and environment play an important role in thinking. The way in which a person's body and their surroundings interacts also allows for specific brain functions to develop and in the future to be able to act. This means that not only does the mind influence the body's movements, but the body also influences the abilities of the mind, also termed the bi-directional hypothesis. There are three generalizations that are assumed to be true relating to embodied cognition. A person's motor system is activated when (1) they observe manipulable objects, (2) process action verbs, and (3) observe another individual's movements.

Bilingual lexical access is an area of psycholinguistics that studies the activation or retrieval process of the mental lexicon for bilingual people.

The bi-directional hypothesis of language and action proposes that the sensorimotor and language comprehension areas of the brain exert reciprocal influence over one another. This hypothesis argues that areas of the brain involved in movement and sensation, as well as movement itself, influence cognitive processes such as language comprehension. In addition, the reverse effect is argued, where it is proposed that language comprehension influences movement and sensation. Proponents of the bi-directional hypothesis of language and action conduct and interpret linguistic, cognitive, and movement studies within the framework of embodied cognition and embodied language processing. Embodied language developed from embodied cognition, and proposes that sensorimotor systems are not only involved in the comprehension of language, but that they are necessary for understanding the semantic meaning of words.

Seana Coulson is a cognitive scientist known for her research on the neurobiology of language and studies of how meaning is constructed in human language, including experimental pragmatics, concepts, semantics, and metaphors. She is a professor in the Cognitive Science department at University of California, San Diego, where her Brain and Cognition Laboratory focuses on the cognitive neuroscience of language and reasoning.