In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

In probability theory and statistics, the multivariate normal distribution, multivariate Gaussian distribution, or joint normal distribution is a generalization of the one-dimensional (univariate) normal distribution to higher dimensions. One definition is that a random vector is said to be k-variate normally distributed if every linear combination of its k components has a univariate normal distribution. Its importance derives mainly from the multivariate central limit theorem. The multivariate normal distribution is often used to describe, at least approximately, any set of (possibly) correlated real-valued random variables each of which clusters around a mean value.

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. Although in the broadest sense, "correlation" may indicate any type of association, in statistics it usually refers to the degree to which a pair of variables are linearly related. Familiar examples of dependent phenomena include the correlation between the height of parents and their offspring, and the correlation between the price of a good and the quantity the consumers are willing to purchase, as it is depicted in the so-called demand curve.

In probability theory and statistics, a covariance matrix is a square matrix giving the covariance between each pair of elements of a given random vector. Any covariance matrix is symmetric and positive semi-definite and its main diagonal contains variances.

In 1851, George Gabriel Stokes derived an expression, now known as Stokes' law, for the frictional force – also called drag force – exerted on spherical objects with very small Reynolds numbers in a viscous fluid. Stokes' law is derived by solving the Stokes flow limit for small Reynolds numbers of the Navier–Stokes equations.

In statistics, the Pearson correlation coefficient ― also known as Pearson's r, the Pearson product-moment correlation coefficient (PPMCC), the bivariate correlation, or colloquially simply as the correlation coefficient ― is a measure of linear correlation between two sets of data. It is the ratio between the covariance of two variables and the product of their standard deviations; thus, it is essentially a normalized measurement of the covariance, such that the result always has a value between −1 and 1. As with covariance itself, the measure can only reflect a linear correlation of variables, and ignores many other types of relationships or correlations. As a simple example, one would expect the age and height of a sample of teenagers from a high school to have a Pearson correlation coefficient significantly greater than 0, but less than 1.

In statistics, the Wishart distribution is a generalization to multiple dimensions of the gamma distribution. It is named in honor of John Wishart, who first formulated the distribution in 1928.

In statistics, propagation of uncertainty is the effect of variables' uncertainties on the uncertainty of a function based on them. When the variables are the values of experimental measurements they have uncertainties due to measurement limitations which propagate due to the combination of variables in the function.

In statistics, particularly in hypothesis testing, the Hotelling's T-squared distribution (T2), proposed by Harold Hotelling, is a multivariate probability distribution that is tightly related to the F-distribution and is most notable for arising as the distribution of a set of sample statistics that are natural generalizations of the statistics underlying the Student's t-distribution. The Hotelling's t-squared statistic (t2) is a generalization of Student's t-statistic that is used in multivariate hypothesis testing.

In probability theory and statistics, the noncentral chi distribution is a noncentral generalization of the chi distribution. It is also known as the generalized Rayleigh distribution.

In probability theory, calculation of the sum of normally distributed random variables is an instance of the arithmetic of random variables, which can be quite complex based on the probability distributions of the random variables involved and their relationships.

Oblate spheroidal coordinates are a three-dimensional orthogonal coordinate system that results from rotating the two-dimensional elliptic coordinate system about the non-focal axis of the ellipse, i.e., the symmetry axis that separates the foci. Thus, the two foci are transformed into a ring of radius in the x-y plane. Oblate spheroidal coordinates can also be considered as a limiting case of ellipsoidal coordinates in which the two largest semi-axes are equal in length.

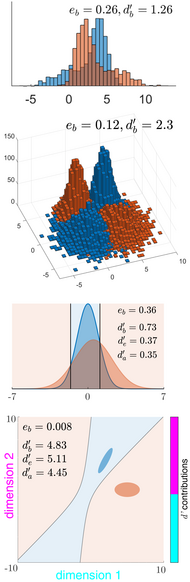

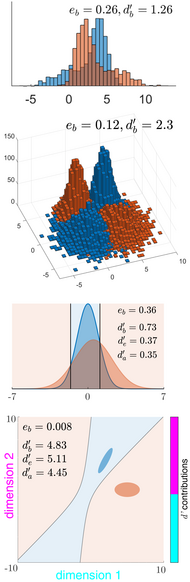

The sensitivity index or discriminability index or detectability index is a dimensionless statistic used in signal detection theory. A higher index indicates that the signal can be more readily detected.

Bayesian linear regression is a type of conditional modeling in which the mean of one variable is described by a linear combination of other variables, with the goal of obtaining the posterior probability of the regression coefficients and ultimately allowing the out-of-sample prediction of the regressandconditional on observed values of the regressors. The simplest and most widely used version of this model is the normal linear model, in which given is distributed Gaussian. In this model, and under a particular choice of prior probabilities for the parameters—so-called conjugate priors—the posterior can be found analytically. With more arbitrarily chosen priors, the posteriors generally have to be approximated.

In statistics, the multivariate t-distribution is a multivariate probability distribution. It is a generalization to random vectors of the Student's t-distribution, which is a distribution applicable to univariate random variables. While the case of a random matrix could be treated within this structure, the matrix t-distribution is distinct and makes particular use of the matrix structure.

A ratio distribution is a probability distribution constructed as the distribution of the ratio of random variables having two other known distributions. Given two random variables X and Y, the distribution of the random variable Z that is formed as the ratio Z = X/Y is a ratio distribution.

In probability theory and statistics, the normal-inverse-gamma distribution is a four-parameter family of multivariate continuous probability distributions. It is the conjugate prior of a normal distribution with unknown mean and variance.

In probability theory, the family of complex normal distributions, denoted or , characterizes complex random variables whose real and imaginary parts are jointly normal. The complex normal family has three parameters: location parameter μ, covariance matrix , and the relation matrix . The standard complex normal is the univariate distribution with , , and .

In probability theory and statistics, the negative multinomial distribution is a generalization of the negative binomial distribution to more than two outcomes.

In probability theory, a logit-normal distribution is a probability distribution of a random variable whose logit has a normal distribution. If Y is a random variable with a normal distribution, and P is the standard logistic function, then X = P(Y) has a logit-normal distribution; likewise, if X is logit-normally distributed, then Y = logit(X)= log is normally distributed. It is also known as the logistic normal distribution, which often refers to a multinomial logit version (e.g.).