A CMUcam is a low cost computer vision device intended for robotics research.

A CMUcam is a low cost computer vision device intended for robotics research.

CMUcams consist of a small video camera and a microcontroller with a serial interface. While other digital cameras typically use a much higher bandwidth connector, the CMUcam's lightweight interface allows it to be accessed by microcontrollers. More importantly, the on-board microprocessor supports simple image processing and color blob tracking, making rudimentary computer vision capable in systems that would previously have far too little power to do such a thing.

It has been used in past years by the high-school FIRST Robotics Competition as a way of letting participants' robots track field elements and navigate autonomously. The CMUcam also has an extremely small form factor. For these reasons, it is relatively popular for making small, mobile robots.

The original design was originally made by Carnegie Mellon University, who has licensed it to various manufacturers.

Pixy2 is the latest in the line of CMUcam sensors. It adds line tracking capability and an onboard light source to the previous CMUcam5, aka original Pixy. These sensors are produced in collaboration with Charmed Labs in Austin, TX.

Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images into descriptions of world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.

An embedded system is a computer system—a combination of a computer processor, computer memory, and input/output peripheral devices—that has a dedicated function within a larger mechanical or electronic system. It is embedded as part of a complete device often including electrical or electronic hardware and mechanical parts. Because an embedded system typically controls physical operations of the machine that it is embedded within, it often has real-time computing constraints. Embedded systems control many devices in common use. In 2009, it was estimated that ninety-eight percent of all microprocessors manufactured were used in embedded systems.

AVR is a family of microcontrollers developed since 1996 by Atmel, acquired by Microchip Technology in 2016. These are modified Harvard architecture 8-bit RISC single-chip microcontrollers. AVR was one of the first microcontroller families to use on-chip flash memory for program storage, as opposed to one-time programmable ROM, EPROM, or EEPROM used by other microcontrollers at the time.

Machine vision is the technology and methods used to provide imaging-based automatic inspection and analysis for such applications as automatic inspection, process control, and robot guidance, usually in industry. Machine vision refers to many technologies, software and hardware products, integrated systems, actions, methods and expertise. Machine vision as a systems engineering discipline can be considered distinct from computer vision, a form of computer science. It attempts to integrate existing technologies in new ways and apply them to solve real world problems. The term is the prevalent one for these functions in industrial automation environments but is also used for these functions in other environment vehicle guidance.

Lego Mindstorms is a discontinued line of educational kits for building programmable robots based on Lego bricks. It was introduced on 1 September 1998 and was discontinued on 31 December 2022.

A barcode reader or barcode scanner is an optical scanner that can read printed barcodes and send the data they contain to computer. Like a flatbed scanner, it consists of a light source, a lens, and a light sensor for translating optical impulses into electrical signals. Additionally, nearly all barcode readers contain decoder circuitry that can analyse the barcode's image data provided by the sensor and send the barcode's content to the scanner's output port.

Motion capture is the process of recording the movement of objects or people. It is used in military, entertainment, sports, medical applications, and for validation of computer vision and robots. In filmmaking and video game development, it refers to recording actions of human actors and using that information to animate digital character models in 2D or 3D computer animation. When it includes face and fingers or captures subtle expressions, it is often referred to as performance capture. In many fields, motion capture is sometimes called motion tracking, but in filmmaking and games, motion tracking usually refers more to match moving.

Gesture recognition is an area of research and development in computer science and language technology concerned with the recognition and interpretation of human gestures. A subdiscipline of computer vision, it employs mathematical algorithms to interpret gestures.

BIG TRAK / bigtrak is a programmable toy electric vehicle created by Milton Bradley in 1979, resembling a futuristic Sci-Fi tank / utility vehicle. The original Big Trak was a six-wheeled tank with a front-mounted blue "photon beam" headlamp, and a keypad on top. The toy could remember up to 16 commands, which it then executed in sequence. There also was an optional cargo trailer accessory, with the UK version being white to match its colour scheme; once hooked to the Bigtrak, this trailer could be programmed to dump its payload.

Bio-mechatronics is an applied interdisciplinary science that aims to integrate biology and mechatronics. It also encompasses the fields of robotics and neuroscience. Biomechatronic devices cover a wide range of applications, from developing prosthetic limbs to engineering solutions concerning respiration, vision, and the cardiovascular system.

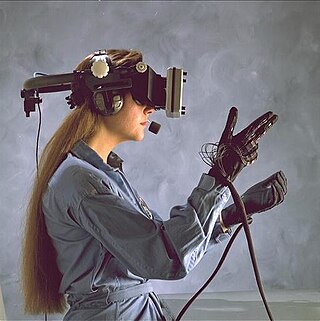

A wired glove is an input device for human–computer interaction worn like a glove.

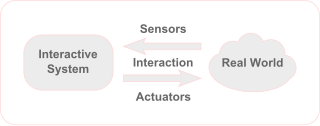

Physical computing involves interactive systems that can sense and respond to the world around them. While this definition is broad enough to encompass systems such as smart automotive traffic control systems or factory automation processes, it is not commonly used to describe them. In a broader sense, physical computing is a creative framework for understanding human beings' relationship to the digital world. In practical use, the term most often describes handmade art, design or DIY hobby projects that use sensors and microcontrollers to translate analog input to a software system, and/or control electro-mechanical devices such as motors, servos, lighting or other hardware.

A mobile robot is an automatic machine that is capable of locomotion. Mobile robotics is usually considered to be a subfield of robotics and information engineering.

ITUpSAT1, short for Istanbul Technical University picoSatellite-1, is a single CubeSat built by the Faculty of Aeronautics and Astronautics at the Istanbul Technical University. It was launched on 23 September 2009 atop a PSLV-C14 satellite launch vehicle from Satish Dhawan Space Centre, Sriharikota, Andhra Pradesh in India, and became the first Turkish university satellite to orbit the Earth. It was expected to have a minimum of six-month life term, but it is still functioning for over two years. It is a picosatellite with side lengths of 10 centimetres (3.9 in) and a mass of 0.990 kilograms (2.18 lb).

PrimeSense was an Israeli 3D sensing company based in Tel Aviv. PrimeSense had offices in Israel, North America, Japan, Singapore, Korea, China and Taiwan. PrimeSense was bought by Apple Inc. for $360 million on November 24, 2013.

The Nikon Expeed image/video processors are media processors for Nikon's digital cameras. They perform a large number of tasks: Bayer filtering, demosaicing, image sensor corrections/dark-frame subtraction, image noise reduction, image sharpening, image scaling, gamma correction, image enhancement/Active D-Lighting, colorspace conversion, chroma subsampling, framerate conversion, lens distortion/chromatic aberration correction, image compression/JPEG encoding, video compression, display/video interface driving, digital image editing, face detection, audio processing/compression/encoding and computer data storage/data transmission.

ArduPilot is an open source, uncrewed vehicle Autopilot Software Suite, capable of controlling:

Salvius is an open source humanoid robot built in the United States in 2008, the first of its kind. Its name is derived from the word 'salvaged', being constructed with an emphasis on using recycled components and materials to reduce the costs of designing and construction. The robot is designed to be able to perform a wide range of tasks due to its humanoid body structure planning. The primary goal for the Salvius project is to create a robot that can function dynamically in a domestic environment.

In virtual reality (VR) and augmented reality (AR), a pose tracking system detects the precise pose of head-mounted displays, controllers, other objects or body parts within Euclidean space. Pose tracking is often referred to as 6DOF tracking, for the six degrees of freedom in which the pose is often tracked.