This article may be too technical for most readers to understand.(February 2024) |

| Part of a series on | ||||

| Network science | ||||

|---|---|---|---|---|

| Network types | ||||

| Graphs | ||||

| ||||

| Models | ||||

| ||||

| ||||

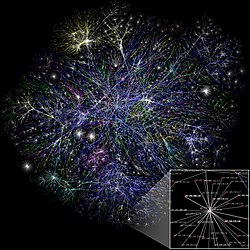

The Hierarchical navigable small world (HNSW) algorithm is a graph-based approximate nearest neighbor search technique used in many vector databases. [1] Nearest neighbor search without an index involves computing the distance from the query to each point in the database, which for large datasets is computationally prohibitive. For high-dimensional data, tree-based exact vector search techniques such as the k-d tree and R-tree do not perform well enough because of the curse of dimensionality. To remedy this, approximate k-nearest neighbor searches have been proposed, such as locality-sensitive hashing (LSH) and product quantization (PQ) that trade performance for accuracy. [1] The HNSW graph offers an approximate k-nearest neighbor search which scales logarithmically even in high-dimensional data.

It is an extension of the earlier work on navigable small world graphs presented at the Similarity Search and Applications (SISAP) conference in 2012 with an additional hierarchical navigation to find entry points to the main graph faster. [2] HNSW-based libraries are among the best performers in the approximate nearest neighbors benchmark. [3] [4]

A related technique is IVFFlat. [5]