Let A be the adjacency matrix of a network under consideration. Elements  of A are variables that take a value 1 if a node i is connected to node j and 0 otherwise. The powers of A indicate the presence (or absence) of links between two nodes through intermediaries. For instance, in matrix

of A are variables that take a value 1 if a node i is connected to node j and 0 otherwise. The powers of A indicate the presence (or absence) of links between two nodes through intermediaries. For instance, in matrix  , if element

, if element  , it indicates that node 2 and node 12 are connected through some walk of length 3. If

, it indicates that node 2 and node 12 are connected through some walk of length 3. If  denotes Katz centrality of a node i, then, given a value

denotes Katz centrality of a node i, then, given a value  , mathematically:

, mathematically:

Note that the above definition uses the fact that the element at location  of

of  reflects the total number of

reflects the total number of  degree connections between nodes

degree connections between nodes  and

and  . The value of the attenuation factor

. The value of the attenuation factor  has to be chosen such that it is smaller than the reciprocal of the absolute value of the largest eigenvalue of A. [5] In this case the following expression can be used to calculate Katz centrality:

has to be chosen such that it is smaller than the reciprocal of the absolute value of the largest eigenvalue of A. [5] In this case the following expression can be used to calculate Katz centrality:

Here  is the identity matrix,

is the identity matrix,  is a vector of size n (n is the number of nodes) consisting of ones.

is a vector of size n (n is the number of nodes) consisting of ones.  denotes the transposed matrix of A and

denotes the transposed matrix of A and  denotes matrix inversion of the term

denotes matrix inversion of the term  . [5]

. [5]

An extension of this framework allows for the walks to be computed in a dynamical setting. [6] [7] By taking a time dependent series of network adjacency snapshots of the transient edges, the dependency for walks to contribute towards a cumulative effect is presented. The arrow of time is preserved so that the contribution of activity is asymmetric in the direction of information propagation.

Network producing data of the form:

representing the adjacency matrix at each time  . Hence:

. Hence:

The time points  are ordered but not necessarily equally spaced.

are ordered but not necessarily equally spaced.  for which

for which  is a weighted count of the number of dynamic walks of length

is a weighted count of the number of dynamic walks of length  from node

from node  to node

to node  . The form for the dynamic communicability between participating nodes is:

. The form for the dynamic communicability between participating nodes is:

This can be normalized via:

Therefore, centrality measures that quantify how effectively node  can 'broadcast' and 'receive' dynamic messages across the network:

can 'broadcast' and 'receive' dynamic messages across the network:

.

.

Applications

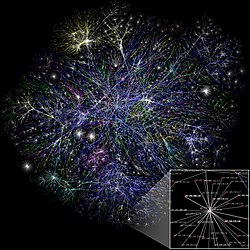

Katz centrality can be used to compute centrality in directed networks such as citation networks and the World Wide Web. [11]

Katz centrality is more suitable in the analysis of directed acyclic graphs where traditionally used measures like eigenvector centrality are rendered useless. [11]

Katz centrality can also be used in estimating the relative status or influence of actors in a social network. The work presented in [12] shows the case study of applying a dynamic version of the Katz centrality to data from Twitter and focuses on particular brands which have stable discussion leaders. The application allows for a comparison of the methodology with that of human experts in the field and how the results are in agreement with a panel of social media experts.

In neuroscience, it is found that Katz centrality correlates with the relative firing rate of neurons in a neural network. [13] The temporal extension of the Katz centrality is applied to fMRI data obtained from a musical learning experiment in [14] where data is collected from the subjects before and after the learning process. The results show that the changes to the network structure over the musical exposure created in each session a quantification of the cross communicability that produced clusters in line with the success of learning.

A generalized form of Katz centrality can be used as an intuitive ranking system for sports teams, such as in college football. [15]

Alpha centrality is implemented in igraph library for network analysis and visualization. [16]

This page is based on this

Wikipedia article Text is available under the

CC BY-SA 4.0 license; additional terms may apply.

Images, videos and audio are available under their respective licenses.