In linguistics, syntax is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). There are numerous approaches to syntax that differ in their central assumptions and goals.

In linguistics, transformational grammar (TG) or transformational-generative grammar (TGG) is part of the theory of generative grammar, especially of natural languages. It considers grammar to be a system of rules that generate exactly those combinations of words that form grammatical sentences in a given language and involves the use of defined operations to produce new sentences from existing ones.

An adjective phrase is a phrase whose head is an adjective. Almost any grammar or syntax textbook or dictionary of linguistics terminology defines the adjective phrase in a similar way, e.g. Kesner Bland (1996:499), Crystal (1996:9), Greenbaum (1996:288ff.), Haegeman and Guéron (1999:70f.), Brinton (2000:172f.), Jurafsky and Martin (2000:362). The adjective can initiate the phrase, conclude the phrase, or appear in a medial position. The dependents of the head adjective—i.e. the other words and phrases inside the adjective phrase—are typically adverb or prepositional phrases, but they can also be clauses. Adjectives and adjective phrases function in two basic ways, attributively or predicatively. An attributive adjective (phrase) precedes the noun of a noun phrase. A predicative adjective (phrase) follows a linking verb and serves to describe the preceding subject, e.g. The man is very happy.

Lexical semantics, as a subfield of linguistic semantics, is the study of word meanings. It includes the study of how words structure their meaning, how they act in grammar and compositionality, and the relationships between the distinct senses and uses of a word.

In linguistics, X-bar theory is a model of phrase-structure grammar and a theory of syntactic category formation that was first proposed by Noam Chomsky in 1970 reformulating the ideas of Zellig Harris (1951), and further developed by Ray Jackendoff, along the lines of the theory of generative grammar put forth in the 1950s by Chomsky. It attempts to capture the structure of phrasal categories with a single uniform structure called the X-bar schema, basing itself on the assumption that any phrase in natural language is an XP that is headed by a given syntactic category X. It played a significant role in resolving issues that phrase structure rules had, representative of which is the proliferation of grammatical rules, which is against the thesis of generative grammar.

Generative grammar is a research tradition in linguistics that aims to explain the cognitive basis of language by formulating and testing explicit models of humans' subconscious grammatical knowledge. Generative linguists tend to share certain working assumptions such as the competence-performance distinction and the notion that some domain-specific aspects of grammar are partly innate. These assumptions are rejected in non-generative approaches such as usage-based models of language. Generative linguistics includes work in core areas such as syntax, semantics, phonology, psycholinguistics, and language acquisition, with additional extensions to topics including biolinguistics and music cognition.

In linguistics, the minimalist program is a major line of inquiry that has been developing inside generative grammar since the early 1990s, starting with a 1993 paper by Noam Chomsky.

Montague grammar is an approach to natural language semantics, named after American logician Richard Montague. The Montague grammar is based on mathematical logic, especially higher-order predicate logic and lambda calculus, and makes use of the notions of intensional logic, via Kripke models. Montague pioneered this approach in the 1960s and early 1970s.

In generative grammar and related frameworks, a node in a parse tree c-commands its sister node and all of its sister's descendants. In these frameworks, c-command plays a central role in defining and constraining operations such as syntactic movement, binding, and scope. Tanya Reinhart introduced c-command in 1976 as a key component of her theory of anaphora. The term is short for "constituent command".

The term predicate is used in two ways in linguistics and its subfields. The first defines a predicate as everything in a standard declarative sentence except the subject, and the other defines it as only the main content verb or associated predicative expression of a clause. Thus, by the first definition, the predicate of the sentence Frank likes cake is likes cake, while by the second definition, it is only the content verb likes, and Frank and cake are the arguments of this predicate. The conflict between these two definitions can lead to confusion.

In linguistics, an argument is an expression that helps complete the meaning of a predicate, the latter referring in this context to a main verb and its auxiliaries. In this regard, the complement is a closely related concept. Most predicates take one, two, or three arguments. A predicate and its arguments form a predicate-argument structure. The discussion of predicates and arguments is associated most with (content) verbs and noun phrases (NPs), although other syntactic categories can also be construed as predicates and as arguments. Arguments must be distinguished from adjuncts. While a predicate needs its arguments to complete its meaning, the adjuncts that appear with a predicate are optional; they are not necessary to complete the meaning of the predicate. Most theories of syntax and semantics acknowledge arguments and adjuncts, although the terminology varies, and the distinction is generally believed to exist in all languages. Dependency grammars sometimes call arguments actants, following Lucien Tesnière (1959).

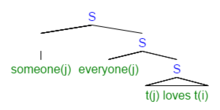

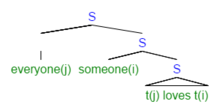

Antecedent-contained deletion (ACD), also called antecedent-contained ellipsis, is a phenomenon whereby an elided verb phrase appears to be contained within its own antecedent. For instance, in the sentence "I read every book that you did", the verb phrase in the main clause appears to license ellipsis inside the relative clause which modifies its object. ACD is a classic puzzle for theories of the syntax-semantics interface, since it threatens to introduce an infinite regress. It is commonly taken as motivation for syntactic transformations such as quantifier raising, though some approaches explain it using semantic composition rules or by adoption more flexible notions of what it means to be a syntactic unit.

In generative grammar, the technical term operator denotes a type of expression that enters into an a-bar movement dependency. One often says that the operator "binds a variable".

The linguistics wars were extended disputes among American theoretical linguists that occurred mostly during the 1960s and 1970s, stemming from a disagreement between Noam Chomsky and several of his associates and students. The debates started in 1967 when linguists Paul Postal, John R. Ross, George Lakoff, and James D. McCawley —self-dubbed the "Four Horsemen of the Apocalypse"—proposed an alternative approach in which the relation between semantics and syntax is viewed differently, which treated deep structures as meaning rather than syntactic objects. While Chomsky and other generative grammarians argued that meaning is driven by an underlying syntax, generative semanticists posited that syntax is shaped by an underlying meaning. This intellectual divergence led to two competing frameworks in generative semantics and interpretive semantics.

Merge is one of the basic operations in the Minimalist Program, a leading approach to generative syntax, when two syntactic objects are combined to form a new syntactic unit. Merge also has the property of recursion in that it may be applied to its own output: the objects combined by Merge are either lexical items or sets that were themselves formed by Merge. This recursive property of Merge has been claimed to be a fundamental characteristic that distinguishes language from other cognitive faculties. As Noam Chomsky (1999) puts it, Merge is "an indispensable operation of a recursive system ... which takes two syntactic objects A and B and forms the new object G={A,B}" (p. 2).

In semantics, a donkey sentence is a sentence containing a pronoun which is semantically bound but syntactically free. They are a classic puzzle in formal semantics and philosophy of language because they are fully grammatical and yet defy straightforward attempts to generate their formal language equivalents. In order to explain how speakers are able to understand them, semanticists have proposed a variety of formalisms including systems of dynamic semantics such as Discourse representation theory. Their name comes from the example sentence "Every farmer who owns a donkey beats it", in which the donkey pronoun acts as a donkey pronoun because it is semantically but not syntactically bound by the indefinite noun phrase "a donkey". The phenomenon is known as donkey anaphora.

Formal semantics is the study of grammatical meaning in natural languages using formal tools from logic, mathematics and theoretical computer science. It is an interdisciplinary field, sometimes regarded as a subfield of both linguistics and philosophy of language. It provides accounts of what linguistic expressions mean and how their meanings are composed from the meanings of their parts. The enterprise of formal semantics can be thought of as that of reverse-engineering the semantic components of natural languages' grammars.

In formal semantics, the scope of a semantic operator is the semantic object to which it applies. For instance, in the sentence "Paulina doesn't drink beer but she does drink wine," the proposition that Paulina drinks beer occurs within the scope of negation, but the proposition that Paulina drinks wine does not. Scope can be thought of as the semantic order of operations.

In formal semantics, a type shifter is an interpretation rule that changes an expression's semantic type. For instance, the English expression "John" might ordinarily denote John himself, but a type shifting rule called Lift can raise its denotation to a function which takes a property and returns "true" if John himself has that property. Lift can be seen as mapping an individual onto the principal ultrafilter that it generates.

- Without type shifting:

- Type shifting with Lift:

In linguistics, the syntax–semantics interface is the interaction between syntax and semantics. Its study encompasses phenomena that pertain to both syntax and semantics, with the goal of explaining correlations between form and meaning. Specific topics include scope, binding, and lexical semantic properties such as verbal aspect and nominal individuation, semantic macroroles, and unaccusativity.